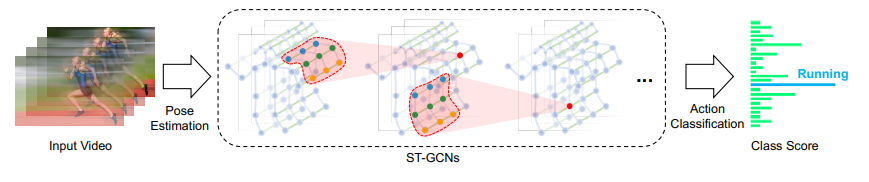

Spatial temporal graph convolutional networks for skeleton-based action recognition

Dynamics of human body skeletons convey significant information for human action recognition. Conventional approaches for modeling skeletons usually rely on hand-crafted parts or traversal rules, thus resulting in limited expressive power and difficulties of generalization. In this work, we propose a novel model of dynamic skeletons called Spatial-Temporal Graph Convolutional Networks (ST-GCN), which moves beyond the limitations of previous methods by automatically learning both the spatial and temporal patterns from data. This formulation not only leads to greater expressive power but also stronger generalization capability. On two large datasets, Kinetics and NTU-RGBD, it achieves substantial improvements over mainstream methods.

| frame sampling strategy | modality | gpus | backbone | top1 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 100 | joint | 8 | STGCN | 88.95 | 10 clips | 3.8G | 3.1M | config | ckpt | log |

| uniform 100 | bone | 8 | STGCN | 91.69 | 10 clips | 3.8G | 3.1M | config | ckpt | log |

| uniform 100 | joint-motion | 8 | STGCN | 86.90 | 10 clips | 3.8G | 3.1M | config | ckpt | log |

| uniform 100 | bone-motion | 8 | STGCN | 87.86 | 10 clips | 3.8G | 3.1M | config | ckpt | log |

| two-stream | 92.12 | |||||||||

| four-stream | 92.34 |

| frame sampling strategy | modality | gpus | backbone | top1 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 100 | joint | 8 | STGCN | 88.11 | 10 clips | 5.7G | 3.1M | config | ckpt | log |

| uniform 100 | bone | 8 | STGCN | 88.76 | 10 clips | 5.7G | 3.1M | config | ckpt | log |

| uniform 100 | joint-motion | 8 | STGCN | 86.06 | 10 clips | 5.7G | 3.1M | config | ckpt | log |

| uniform 100 | bone-motion | 8 | STGCN | 85.49 | 10 clips | 5.7G | 3.1M | config | ckpt | log |

| two-stream | 90.14 | |||||||||

| four-stream | 90.39 |

| frame sampling strategy | modality | gpus | backbone | top1 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 100 | joint | 8 | STGCN | 83.19 | 10 clips | 3.8G | 3.1M | config | ckpt | log |

| uniform 100 | bone | 8 | STGCN | 83.36 | 10 clips | 3.8G | 3.1M | config | ckpt | log |

| uniform 100 | joint-motion | 8 | STGCN | 78.87 | 10 clips | 3.8G | 3.1M | config | ckpt | log |

| uniform 100 | bone-motion | 8 | STGCN | 79.55 | 10 clips | 3.8G | 3.1M | config | ckpt | log |

| two-stream | 84.84 | |||||||||

| four-stream | 85.23 |

| frame sampling strategy | modality | gpus | backbone | top1 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|

| uniform 100 | joint | 8 | STGCN | 82.15 | 10 clips | 5.7G | 3.1M | config | ckpt | log |

| uniform 100 | bone | 8 | STGCN | 84.28 | 10 clips | 5.7G | 3.1M | config | ckpt | log |

| uniform 100 | joint-motion | 8 | STGCN | 78.93 | 10 clips | 5.7G | 3.1M | config | ckpt | log |

| uniform 100 | bone-motion | 8 | STGCN | 80.02 | 10 clips | 5.7G | 3.1M | config | ckpt | log |

| two-stream | 85.68 | |||||||||

| four-stream | 86.19 |

- The gpus indicates the number of gpus we used to get the checkpoint. If you want to use a different number of gpus or videos per gpu, the best way is to set

--auto-scale-lrwhen callingtools/train.py, this parameter will auto-scale the learning rate according to the actual batch size, and the original batch size. - For two-stream fusion, we use joint : bone = 1 : 1. For four-stream fusion, we use joint : joint-motion : bone : bone-motion = 2 : 1 : 2 : 1. For more details about multi-stream fusion, please refer to this tutorial.

You can use the following command to train a model.

python tools/train.py ${CONFIG_FILE} [optional arguments]Example: train STGCN model on NTU60-2D dataset in a deterministic option with periodic validation.

python tools/train.py configs/skeleton/stgcn/stgcn_8xb16-joint-u100-80e_ntu60-xsub-keypoint-2d.py \

--seed 0 --deterministicFor more details, you can refer to the Training part in the Training and Test Tutorial.

You can use the following command to test a model.

python tools/test.py ${CONFIG_FILE} ${CHECKPOINT_FILE} [optional arguments]Example: test STGCN model on NTU60-2D dataset and dump the result to a pickle file.

python tools/test.py configs/skeleton/stgcn/stgcn_8xb16-joint-u100-80e_ntu60-xsub-keypoint-2d.py \

checkpoints/SOME_CHECKPOINT.pth --dump result.pklFor more details, you can refer to the Test part in the Training and Test Tutorial.

@inproceedings{yan2018spatial,

title={Spatial temporal graph convolutional networks for skeleton-based action recognition},

author={Yan, Sijie and Xiong, Yuanjun and Lin, Dahua},

booktitle={Thirty-second AAAI conference on artificial intelligence},

year={2018}

}