-

Notifications

You must be signed in to change notification settings - Fork 325

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[RFC] Use zram-swap to save memory on 32 MiB devices #1692

Comments

|

At some places zram is not suggested e.g. on devices with 4 MB flash, only. https://openwrt.org/docs/guide-user/additional-software/saving_space#excluding_packages But i didn't test zram on Openwrt based devices, yet. |

|

According to https://openwrt.org/packages/table/start?dataflt%5BName_pkg-dependencies*%7E%5D=zram we talk about 15 KB of flash space. |

|

Including missing dependencies (kmod-lib-lz4, swap-utils, block-mount, libssp, possibly more I overlooked), I count about 90KB for the default zram-swap solution. It might be possible to work with less, but I haven't looked into it in detail. |

|

Unfortunately I don't have any 8/32 MiB device deployed or I would test it. @skorpy2009 As you're unable to phrase your opinion, I assume you're just a negative person. |

|

I have deployed zram-swap on a few devices as well and did see positive effects on runtime behavior. Given it needs quite a bit of space - I am not sure it should be on by default. How do you feel about building a package such that there is a choice to include it or not? |

|

@christf Can't we just add |

|

@CodeFetch Can this be done via site.mk for ar7xx-tiny-Target? |

|

@Adorfer Yes, I think so, but I haven't tested it: But I'm not sure whether it makes sense for the tiny target as flash memory is sparse. I think it makes more sense for 8/32 MiB devices... Actually I really dislike the idea of compressing RAM, but as a last resort it might be reasonable. |

|

I am currently testing this package. I would like to use the existing respondd / yanic / influxdb / grafana setup to get reliable data. |

|

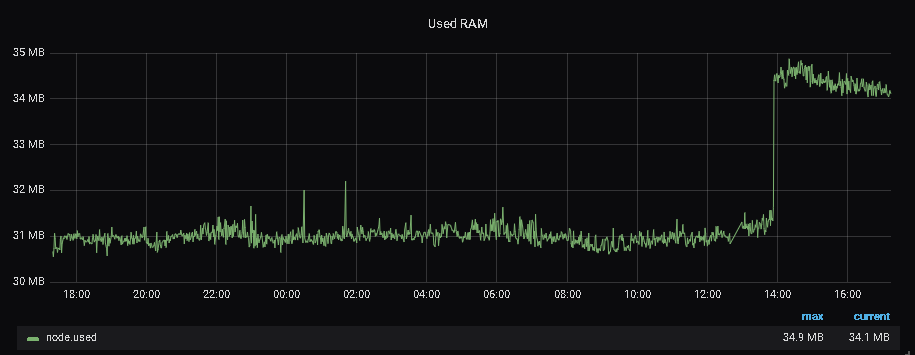

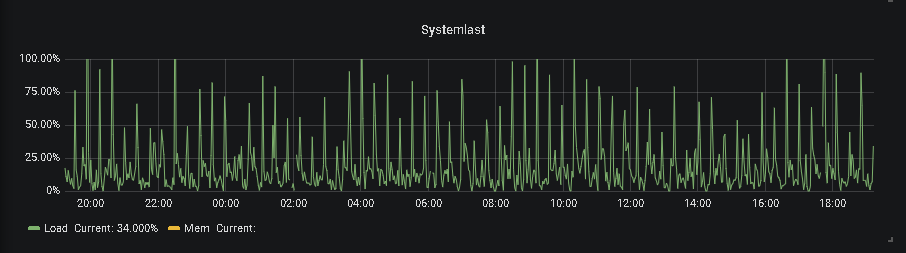

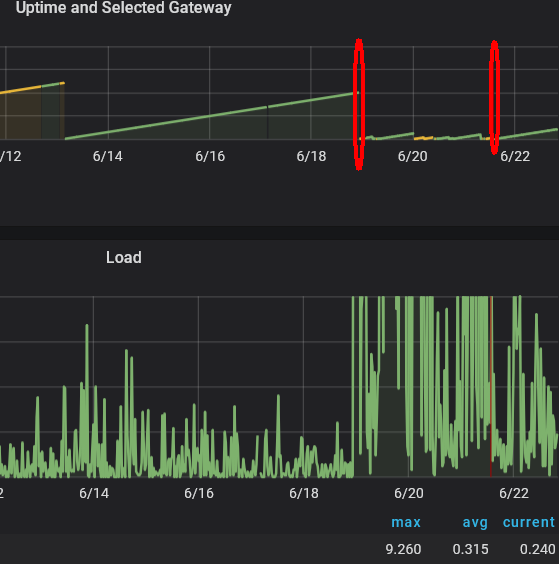

I've applied the update to an Ubiquiti Nanostation M XW (Update at 2pm): Load has increased which might be caused by compression. I will test another device with less RAM. |

|

from these metrics i understand that the zram had the opposite of the expected effect on 64MB devices? the following question(s) could be: even if metrics are not looking good:

|

|

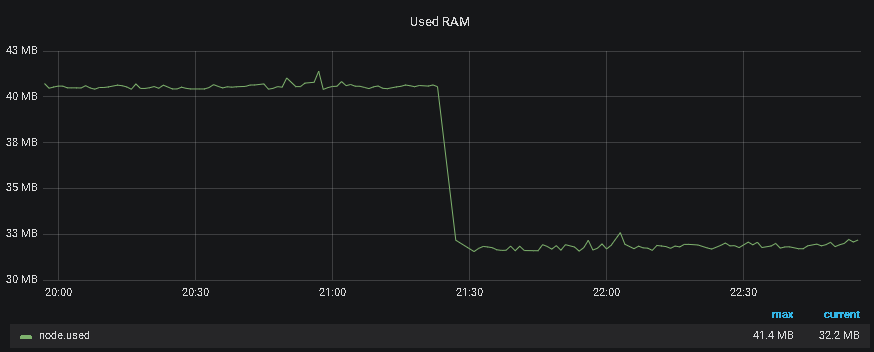

TP-Link TL-WR841N/ND v8 (update ~6pm) This node has four mesh partners, (88%, 77%, 73% and 2%) and no clients. @Adorfer Maybe Zram allocates the space in RAM (like a ballooning device). This would mean, only data that needs to swap will be compressed (which is fine). This would also confirm why 4/32 devices look better. |

|

Another Ubiquiti Nanostation M XW with non-zram firmware: Same total RAM, less used, no swap. |

Same device but RAM usage settled down. |

|

The output of |

The diagrams should be fine then: |

|

how did you apply the zram? Just by site.mk or by patching gluon/target-files? |

I‘ve used the site.mk-packages approach. |

|

TP-Link TL-WR842NDv2 (8 MB Flash, 32 MB RAM) Let's say this saves us roughly 8% (2.1M) memory. Does that sound like it's worth it? What result would we expect from zram-swap to make use of it? |

|

evaluating those free/memavail/load-values "as long as it's non critical" is one metric. i will try it out on some nodes facing regular "reboots after high load" (OOM and whatever is happening). |

|

When I've made tests with the SquashFS thrashing situation it was sometimes a question of 0.5 MB. |

|

@Adorfer So it's still bad, but better with zram-swap? Can you post a link to the Grafana page? I can't recognize how high the load is as the graph is cut. |

|

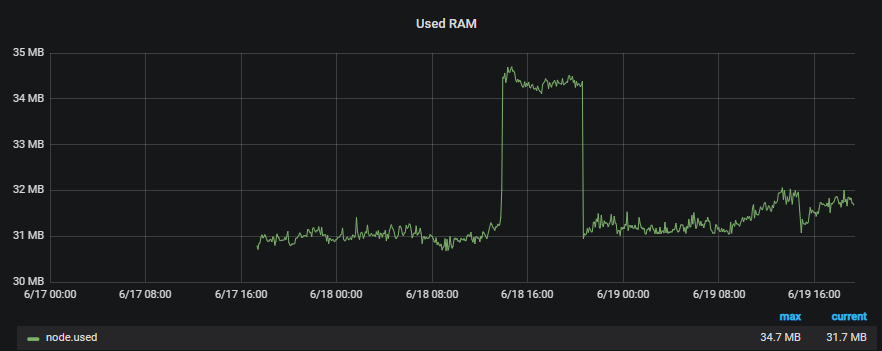

https://map.eulenfunk.de/stats/d/000000004/node-byid?orgId=1&var-nodeid=60e327c6f834&from=1559832353091&to=1561228144453 |

|

@mweinelt I think you interpreted it wrong. Until the 19.06 they were running v2016, on |

|

i agree, the timeframe has to be changed to see the difference (but this comment does NOT comment on the actual impact) |

|

The reason why this should mitigate the load issue is that the load issue is a thrashing issue caused by the flash read and decompression of LZMA blocks in SquashFS on a page fault. Decompressing RAM is bad (but it's swap and thus infrequently used pages), but reading from flash and decompressing big LZMA blocks is worse. Thus I recommend enabling this package for at least all devices with 32 MiB RAM. As swap is only used if there is a lack of memory or to cache infrequently used pages and because zram-swap is very fast compared to a hard drive I'd even go further and say: It should not hurt to enable it on all devices. As you can see here: the load has decreased from average 1.8 (peak 9.3) without zram-swap to average 0.4 (peak 2.2) with zram-swap enabled. |

|

My interpretaion of this is a buffer is geeting filled because of many packets, the slab or slub cache needs more space and frees pages and in the middle pages needs to be reread which causes high load. I guess it's slab that needs space, because the traffic goes down. Likely many small packets... Thus there is still a thrashing-problem, but it's better with zram-swap as the router can recover from it. Edit: Another topic: We should consider decreasing the ag71xx NAPI weight and ring buffer size. This might help in such situations, too. Better drop packets than thrashing as it will trigger fq_codel on client devices which support it and throttle the rate. But what I can see from this: 32 MiB is not enough in the long-run and domains need to get smaller. zram-swap is a quick fix for getting the network in a state again for doing the needed steps. |

|

A little bit OT but might be helpful for others who want to test this: |

|

If there would be some flag in targets to enable this by default on all 32MB-RAM devices via site.mk: Would be great |

|

so we change the default then? It'd have my vote. |

|

@mweinelt @NeoRaider ? |

|

FYI: |

ACK for 4/32, for 8/32 or NACK? i can live with either decision, tendency to 8/32 |

|

@rotanid ACK for 8/32, NACK for 4/32, as flash space is precious there (and the target is deprecated anyway) |

|

It just means it should not be a default for 4/32 MB devices in Gluon (and I agree with that). People can still select it manually in their firmware builds. |

|

Ich habe auf Basis dieser Liste |

|

merged #1819 , closing. |

Geräte mit wenig RAM profitieren laut Tests erheblich durch komprimierung des RAMs freifunk-gluon/gluon#1692 Da nicht immer Platz bei 4MB Flash devices ist, wurde es upstream nur für 8MB Geräte mit wenig RAM hinzugefügt: freifunk-gluon/gluon#1819 Da wir aktuell Platz haben, den 4MB Geräten hinzugefügt.

Has anyone tested it, yet? This might mitigate #1243 further.

The text was updated successfully, but these errors were encountered: