-

Notifications

You must be signed in to change notification settings - Fork 57

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Enable buffering of WebCodecs Encoded Chunks for playback with MSE - aka "MSE for WebCodecs" or "MSE4WC" #184

Comments

|

Hm. I think MSE was designed to support use-cases like DASH and HLS. If you are doing real-time, I would have thought that the WebRTC infrastructure may be more appropriate? |

|

WebRTC has its own overhead, you'll need to go through the process of setting up a STUN/TURN framework and then the hacky solution of making it think a media source (webcam) is your stream. When it comes to real-time video other platforms you're able to access the decoders at the lowest level. You shouldn't have to over complicate the solution to a "simple" problem. |

|

Mozilla had opened a similar bug to investigate this problem (https://bugzilla.mozilla.org/show_bug.cgi?id=1325491) You would still need to wrap the data in a container of some kind... Because the plain raw data doesn't provide sufficient information to properly display those frames. I do believe that we can improve MSE to be more real time friendly. However, I'm not convinced using raw data will help much here. The overhead required in wrapping the content in an mp4 or a webm is rather low. |

|

In solutions I've created outside the web I've only used raw data to achieve 60FPS real-time video, so I can't speak much to container format solutions. The benefit of MSE is the hardware acceleration, however I do know that in my efforts to get real-time streaming working via MSE, delays often show up due to the I-frame delay present when sending over fragmented MP4's. A work around to this is sending frames individually as soon as they are captured, which is less than ideal since they each have to be boxed and every MS counts. If you have any suggestions for approach with the standard we currently have, I'd appreciate fresh eyes. |

|

I think you're making too many assumptions as to how MSE implementations work internally. Sending raw frames vs having them muxed in a MP4 container would make zero difference in regards to speed of decoding, or the ability to use hardware decoding vs software. Both would be identical. The only thing you would save with raw frame, is the time it takes to demux a MP4, which really, is barely relevant in regards to the processing required to decode a frame. Using individual frame in a fragmented MP4 vs using multiple frames in a MP4 would also make no difference in practice: If you were to package 30 frames in a single MP4 fragment, or using 30 fragments of 1 frame, the latency would still be the same (as far as the first decoded sample is concerned). |

|

BTW hardware decoder in Windows might be instructed to enable low-delay mode ( Also I recalled DXVA H.264 decoder experience and it did produce output with reasonably small delay in terms of additional data on its input. It does require some processing time because, for example, it is multithreaded internally and certain synchronization is involved, however it is not as long as many additional input frames of payload data. |

|

CODECAPI_AVLowLatencyMode is only available on Windows 8 and later (and you need a SP). We had to disable also because it caused crashes easily (see https://bugzilla.mozilla.org/show_bug.cgi?id=1205083). FWIW, even with CODECAPI_AVLowLatencyMode and H264, the latency is around 10 frames (until that MF_E_TRANSFORM_NEED_MORE_INPUT is returned). As for disputing that the latency is that high without it, it may worth trying yourself first |

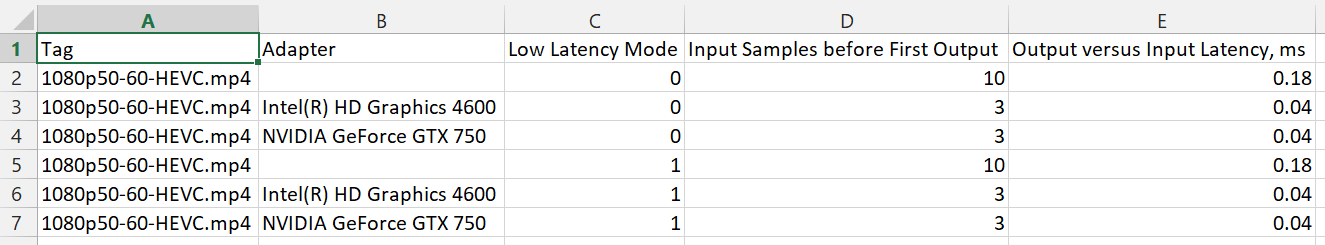

I finally had a chance to check decoder output and whether low latency has effect, in Windows 10. As I assumed decoder MFT does not need 10+ frames on the input before output is produced. Indeed, in default mode there is some latency and you keep feeding input before output is available. In low latency mode it's "one in - one out" and it works great. Let me make it absolutely clear. In low latency mode one does IMFTransform.ProcessInput, and the following ProcessOutput call delivers a decoded frame instead of returning MF_E_TRANSFORM_NEED_MORE_INPUT. It could so happen it had issues in past, quite possible. But eventually it works and low latency mode has great value for near real-time video apps. |

|

@roman380 |

|

@Andrey-M-C Three frames behind on DXVA2-enabled decoding. |

|

@roman380 Thanks for the response! I see the same pattern. If you set CODECAPI_AVDecNumWorkerThreads to 1 for the software decoder than you'll be 4 frames behind, since will be only one decoder thread spawned instead of default four threads. Is there any way to get a clarification from Microsoft about the absence of the low latency mode in HEVC MFT? |

|

@Andrey-M-C I agree that decoder lacks flexibility and low delay mode does not even look like available. In particular, a sequence of just key frames still results in 9 frame latency with the software decoder which suggests the latency is there somehow by design (?). The best place to ask MS comment (except opening an issue with support directly) that I am aware of is MSDN Forums here, however the comments there are still late and not so frequent. |

|

I think this issue merits a slight re-framing (pun intended):

I propose this issue be refocused to target the latter. |

|

We've actually managed to "trick" Chrome and Firefox into decoding in ultra-low latency mode -- of course gaps in data are still a potential issue but in the linked example, its only 7 MS of delay between the host and client. So at least we know its possible. |

Can you provide any details on how you tricked Chrome and Firefox into hardware decoding h.264 fast enough to allow less than 7ms total presentation latency? I would like to try reproducing your results. Was that just for decoding or total end-to-end including encoding, network transport, decoding, and windows desktop rendering/composition? Thanks. |

|

With the right content, the Windows WMF h264 decoder may have no latency. That mode is enabled by default in Chrome, though the Microsoft documentation does state that it's not supposed to work with content having B-frames. |

Documentation quote: "B slices/frames can be present as long as they do not introduce any frame re-ordering in the encoder." |

|

Almost all YT content as B-Frame requiring re-ordering, as most B-frames do. And yet chrome always enable the low latency mode and it obviously works. Edit: oh, I just notice that the comment about B-frames is in relation to the encoder only We disabled it on Firefox because it caused some crashes with some version of Windows 8. |

|

This bug Enable low-latency decoding on Windows 10 and later suggests that we might finally have |

|

With the advent of the WebCodecs API, there are now possibilities I'm looking into around potentially supporting a "WebCodecs" bytestream format for use in MSE, where encoded chunks and configurations (if not also decodedchunks) might be bufferable via new bytestream/MSE feature support. That work seems most applicable to be tracked by this issue. |

|

I'm picking up API shape exploration for buffering WebCodecs encoded chunks as at least a partial solution for this spec issue. Prototype experimental implementation in Chromium will similarly be tracked by https://crbug.com/1144908. |

|

I have created an explainer for supporting buffering containerless WebCodecs encoded media chunks with MSE for low-latency buffering and seekable playback. Please take a look: https://github.com/wolenetz/mse-for-webcodecs/blob/main/explainer.md |

Stubs new MSE methods and overloads that, when fully implemented in later changes, would allow: 1. use of WebCodecs decoder configs as addSourceBuffer() and changeType() arguments (in lieu of parsing initialization segments from a container bytestream), and 2. buffering of WebCodecs encoded chunks via appendEncodedChunks() (in lieu of parsing media segments from a container bytestream). Much of the complexity of this initial change is in the coordination of the IDL bindings generator to achieve disambiguated overload resolution, primarily to keep the exposed API simple (only 1 actual new method name is added, corresponding to bullet 2, above), using two approaches: * Dictionary of Dictionaries: SourceBufferConfig wraps either a WebCodecs audio or video decoder configuration. Without such a distinct new type wrapping them, unioning or overloading would fail to resolve. * Unions, with caveats: the new appendEncodedChunks method takes either sequences of audio or video chunks, or single audio or video chunks, all in a single argument of IDL union type. Caveat: "sequence<A> or sequence<V>" cannot be disambiguated by the bindings, so sequence<A or V> is used in this change. Regardless, the eventual implementation would need to validate that all in the sequence are either A or all are V (along with the usual validation that appended chunks or frames also appear to use the most recent SourceBufferConfig). Caveat: The bindings generator requires help when generated union type identifiers are too long for some platforms. This change adds a seventh case to the existing hard-coded lists of names that need shortening with the generator. I2P: https://groups.google.com/a/chromium.org/g/blink-dev/c/bejy1nmoWmU/m/CQ90X3j5BQAJ TAG early-design review request: w3ctag/design-reviews#576 Explainer: https://github.com/wolenetz/mse-for-webcodecs/blob/main/explainer.md MSE spec bug: w3c/media-source#184 BUG=1144908 Change-Id: Ibc8bd806fe1790ae74fe5ce86865cdfebcdc3096 Reviewed-on: https://chromium-review.googlesource.com/c/chromium/src/+/2515199 Commit-Queue: Matthew Wolenetz <wolenetz@chromium.org> Reviewed-by: Dale Curtis <dalecurtis@chromium.org> Reviewed-by: Kentaro Hara <haraken@chromium.org> Reviewed-by: Dan Sanders <sandersd@chromium.org> Reviewed-by: Chrome Cunningham <chcunningham@chromium.org> Cr-Commit-Position: refs/heads/master@{#830837}

|

I intend to transition the Chromium experimental implementation into origin trials to obtain further feedback on the ergonomics and usability of this feature. Some example use cases include simplifying and improving performance of transmuxing HLS-TS into fMP4 for buffering with MSE, and low-latency streaming with a seekable buffer. Please reach out to me (wolenetz@google.com) or post here if you might be considering using this feature, and if you might want to participate in the origin trial. |

* Updates MediaSource and SourceBuffer sections' IDL to reference the new methods and types. * Updates SOTD substantives list format and content to include this feature. See w3c#184 for the spec issue tracking this feature's addition. (Remove this set of lines during eventual squash and merge:) * Adds placeholder notes including references to the WebCodecs spec to let the updated IDLs' references to definitions from that spec succeed. Upcoming commits will remove the placeholders and include exposition on the behavior of the updated IDL, possibly also refactoring reused steps into subalgorithms.

MSE-for-WebCodecs feature specification [1] needs to normatively reference these concepts. [1] w3c/media-source#184

|

The Chromium experimental implementation is currently in origin trials (as of M95). Note that there are some short-term bugs I'm working to fix in the Chromium prototype, hopefully to get fixed in time to be in the M96 milestone:

|

|

As mentioned in mozilla/standards-positions#582, "Work on this in Chrome and in spec is currently stalled. We're looking for potential users of this API. If you are aware of users or use cases that could benefit from this work, please share if you can. Otherwise, this spec feature may not progress beyond the current preliminary experimental implementation in Chrome and unmerged spec PR." |

Stubs new MSE methods and overloads that, when fully implemented in later changes, would allow: 1. use of WebCodecs decoder configs as addSourceBuffer() and changeType() arguments (in lieu of parsing initialization segments from a container bytestream), and 2. buffering of WebCodecs encoded chunks via appendEncodedChunks() (in lieu of parsing media segments from a container bytestream). Much of the complexity of this initial change is in the coordination of the IDL bindings generator to achieve disambiguated overload resolution, primarily to keep the exposed API simple (only 1 actual new method name is added, corresponding to bullet 2, above), using two approaches: * Dictionary of Dictionaries: SourceBufferConfig wraps either a WebCodecs audio or video decoder configuration. Without such a distinct new type wrapping them, unioning or overloading would fail to resolve. * Unions, with caveats: the new appendEncodedChunks method takes either sequences of audio or video chunks, or single audio or video chunks, all in a single argument of IDL union type. Caveat: "sequence<A> or sequence<V>" cannot be disambiguated by the bindings, so sequence<A or V> is used in this change. Regardless, the eventual implementation would need to validate that all in the sequence are either A or all are V (along with the usual validation that appended chunks or frames also appear to use the most recent SourceBufferConfig). Caveat: The bindings generator requires help when generated union type identifiers are too long for some platforms. This change adds a seventh case to the existing hard-coded lists of names that need shortening with the generator. I2P: https://groups.google.com/a/chromium.org/g/blink-dev/c/bejy1nmoWmU/m/CQ90X3j5BQAJ TAG early-design review request: w3ctag/design-reviews#576 Explainer: https://github.com/wolenetz/mse-for-webcodecs/blob/main/explainer.md MSE spec bug: w3c/media-source#184 BUG=1144908 Change-Id: Ibc8bd806fe1790ae74fe5ce86865cdfebcdc3096 Reviewed-on: https://chromium-review.googlesource.com/c/chromium/src/+/2515199 Commit-Queue: Matthew Wolenetz <wolenetz@chromium.org> Reviewed-by: Dale Curtis <dalecurtis@chromium.org> Reviewed-by: Kentaro Hara <haraken@chromium.org> Reviewed-by: Dan Sanders <sandersd@chromium.org> Reviewed-by: Chrome Cunningham <chcunningham@chromium.org> Cr-Commit-Position: refs/heads/master@{#830837} GitOrigin-RevId: 6507c9cc4ae2d08d090d466da71741d8677380cf

|

As of Chrome 120.0.6074.0+ the prototype API now supports EME. The speculative IDL can be seen at w3c/webcodecs#41 (comment) Does anyone have opinions on |

|

This seems really interesting for a/v synchronized playback without having to containerize webcodec encoded chunks. Are there any MSE player samples that uses webcodec encoded a/v chunks? |

It seems rather counterintuitive to force boxing of video frames for the API. When attempting to do real-time interactive applications like web based remote desktop, low latency is key and MSE forces a lot of overhead.

In an ideal situation allowing raw H.264 encoded frames to be passed to the hardware accelerated decoder and pushed into a video object solves these issues.

The text was updated successfully, but these errors were encountered: