-

Notifications

You must be signed in to change notification settings - Fork 77

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Spread execution of unit tests over time #95

Comments

|

Good idea! How would the spreading work? What would the criteria be for setting these times? Remember that you can set a separate cycle-time for the unit tests. There might even be a way to ignore cycle-overruns (not create them at all) for specific tasks? This is also related to #54. |

|

I made a first implementation which works as follows. I added a function Example usage: I think running it on a longer cycle time will only go so far. You bump into the limit again when you add more tests. Also you need to reserve another core for this longer cycle time I think? I'm not sure if there is a way to ignore cycle overruns. As I mentioned the reason I opened this issue is that I was having problems with the timers. My first solution was to just delay all unit tests until my normal code had initialized, since the normal code of my project is quite computationally intensive in the first few cycles. However, this didn't work because apparently Relating this to #54, I could also work on this. Implementation could be done similar to the delayed test. For example add another method Finally maybe we could make What do you think about it? |

|

Hi @Roald87! Thanks for all your great input/suggestions and I'm really sorry for late response. We've had like three minor build of TwinCAT since you wrote this issue initially. Shame on me 🙈

I've been looking at how other unit-testing frameworks do this, and although it's not fair to do a comparison of "IT"-unit testing frameworks such as JUnit, there still seem to be some need for this in here as well. JUnit recently added support: Obviously I'm afraid to break any existing TcUnit users functionality. If anyone downloads the next TcUnit-release and re-runs their existing tests, they should produce the exact same result, so this needs to be taken into consideration. @Roald87, let me know your thoughts. As I've had numerous people e-mail me over this now, it seems to be important :-) |

|

Hi @sagatowski! No problem. I know it is difficult to work on a project when you have a job and a life next to it :p. Glad to help and support the project when I can! Can you maybe clarify something first? Are people requesting ordered tests or spreading tests? My goal was to spread unit tests over time, to prevent failures because of cycle time exceeds. You are focusing on test order. Of course with my suggested implementation there will be a certain order in the tests, but that is more a side effect than the goal. As you also rightfully note that there should not be any order in the tests. Won't implementing ordered test only encourage "wrong" unit test writing? |

|

Hi @Roald87! The requested feature is to purely be able to spread the execution of the tests. I've had a few requests now where users want to use TcUnit on cheap PLCs. So you are entirely correct, tests should not be dependable on each other or the running order, but in this case it's just a side effect of achieving the goal of spreading the execution (test of course still should be written in such a way that the outcome of one test should not affect the outcome of another test). |

Not sure if I understand this. The

Make a new FB: Until now I have been using my I just thought of an alternative. What about adding a method And then |

But if you were to do a delayed test, wouldn't you want it to be delayed long enough so that all previous tests would be finished? Wasn't the idea to make sure that one test could run, finished, and then the next one etc in a sequence? (so that we wouldn't get exceed overruns?) Or do we possibly talk about apples and pears here? :-)

Wouldn't this still require to have a special TEST()-method? The thing here is that we don't want any code to run inside of a test unless it's time for it to run. Wouldn't that still require us to somehow define sequence order of tests?

Yes, exactly this is what I want to avoid, that you should not have to care about how much time any previous (or following) tests took.

But wouldn't that mean that the tests would still run parallell with each other (but only those one defined in spread_test()?) The use case that people have been asking about is to solve the problem that they get exceed overruns, because all tests are run in parallell, and they want to be able to run them in sequence (one after the other, so that it's possible to do tests on a target CPU and now have timers that are timing out because of overruns). I just want to make sure we have the same goal :-) |

Maybe I was thinking of a simpler approach. Of course running al your tests in sequence would be the safest bet that there are no exceed overruns. I think that my suggestion with

Yeah probably. I think that this approach would only enable test suites to run in sequence, not the tests themselves. |

|

Hi guys, I would like to join the discussion. My idea: Only the test suits are executed one after the other I would write 2 new FUN. This function calls a new method in Here the For loop is replaced and the counter is incremented when the TestSuit is finished. 2.) This function calls a new method in The same implementation as 1.) only that before increasing the counter of the testsuits a time x is waited. So the time between two TestSuits can be set. This is to get possibly more time for the evaluation of the ADSBuffer. If now the TestSuits run one after the other, the evaluation must be adapted to this process. This new FB will only be called from the 2 new Methodes With such an extension it should be possible to have the program downward compatible When switching between parallel and sequence processing only The testsuits run according to the instantiation order. Would this be a possibility that could be considered? |

|

@Beidendorfer I think the suggestion with I'll look into this as I personally need this type of functionality for a project I use myself. |

|

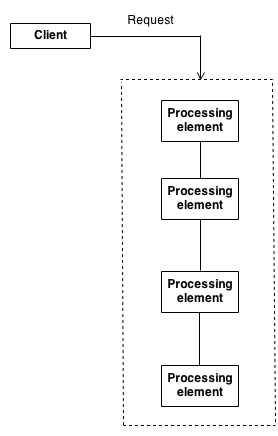

Hello, I thought maybe the chain of responsibility pattern could help out in this matter in order to streamline the tests atomically one by one. |

|

@Beidendorfer Gahhh this was a much harder problem to solve than I initially thought. I'll hopefully have something commited today or latest tomorrow for test. Initially I will include the RUN_IN_SEQUENCE(), but for release 1.2 of TcUnit I would like to have something where it's possible to run individiual tests in a sequence/ordered as well. |

When the code is pushed I'll have a look at it, I'm curious which implementation it has become. For the ordering I could imagine For the sequence I could imagine, that first the The programmers are responsible for the correct order iOrderID's. I can't tell how huge the work is to adjust the reporting to the new order. Another question: Is it possible to run |

|

You don't need any special FB_TestSuite_Ordered. The only difference for the developer is to call For running the tests, and specifying the order, I thought the best thing is to do it the same way as test suites, that is calling the tests in the order you want to run them. In this way the developer doesn't need to:

|

If the order of the test in Or is there a

Maybe we should lock that internally, that only one can run and otherwise abort and give out an error message. I am very glad that this extension |

This executes the test suites one after the other (as opposed to RUN() which executes all test suites in parallel). For more details, see #95. Also changed method access modifiers for all methods in TcUnit so that none is left with the default (PUBLIC). Incremented version of TcUnit to 1.2.0.0.

|

Added the RUN_IN_SEQUENCE(). Please see commit d152c95 for details. |

If we have two test suites: Then all tests in

Yes I think we should also add the TEST_ORDERED to this.

This should give maximum flexibility of how to run the tests, without being too confusing for the user.

good idea!

me too 😃 |

|

For the upcoming release of TcUnit 1.2.0.0 I've written some instructions of this new functionality in the FAQ of TcUnit. Please review and comment if you think something is unclear/missing: |

|

Hi I have made the first tests. First of all, thanks @sagatowski for the implementation. I ran some worst-case tests. After approx. 2000 messages TC Unit stops without making an evaluation, no error message, no indication whether TCUnit is still working. When I increase the ADS memory For performance reasons I want to keep the memory small To achieve this, I started the 10 TestSuits one after the other with a delay.(10s) Now I can not only start the individual tests 1 after another but also distribute them over a period of time (cycles). My implementation: pass this to changed Code now it waits a Time between one Test Suite and another. What is your opinion? Is it just a special case or interesting for everyone? I think it is a combination with @Roald87 idea. |

|

@Beidendorfer Although I find it a very special use case with over 10 test suites with over 200 test cases in each, I also like the idea that this makes it possible to run tests on less capable machines (such as the CX8100-series or the new CX7000-series) that most likely will have even less capable ADS routers. I wish the problem with too many ADS-messages didn't exist, but with this reality and the fact that there probably are people using tiny/low performant PLCs just to run their tests (especially in a CI/CD toolchain with TcUnit-Runner), I think yours and @Roald87 suggestion is great. Could you do a PR with your code? Thanks for this suggestion! Edit: Thanks for putting your time and effort into doing this investigation and test. It's highly appreciated. |

|

@Roald87 would this solution ( Time to stop coding and prepare for some new years celebrations. Happy new 2021 to you @Roald87 @Beidendorfer @Aliazzzz ! |

|

I think the |

…sue #95. Merged changes from @Beidendorfer that allows RUN_IN_SEQUENCE() to have a time that defines how long the time will be between test suites and that solves issue #128. Reverted changes where <LineId> was introduced and TwinCAT project files were updated to 4024 (whilst it should use 4022).

|

I've just made a commit that includes the TEST_ORDERED. See 26f40b4 for all details. |

|

I've also created three example TwinCAT projects (+ describing documentation) for some examples of how to run test suites and tests in sequence. I think we are getting close to closing this issue. |

|

I like the idea of TEST_ORDERED to allow the developer to limit the number of concurrent tests occurring in a TestSuite, and RUN_IN_SEQUENCE to limit the number of concurrent TestSuites to 1. Can I suggest an enhancement? Have a MAX_CONCURRENT_TEST_SUITES parameter (input to RUN_IN_SEQUENCE or even RUN) to allow multiple suites to run concurrently. This would allow a developer to speed up the testing run, but still allow them to limit resource usage. |

|

I tried I think @RodneyRichardson has a good suggestion by allowing multiple concurrent test suites to run. Maybe an even better (or additional) solution would be to have a

Some other suggestions:

|

|

@RodneyRichardson Thanks for the suggestion. This is possible to do today, but only with TESTS, and not TEST-SUITES. The question that arises is though how we prioritize the test-suites? If you only want two test-suites to be executed at the same time, how do we know which ones should go first? Declaration decides? More parameters? @Roald87, do you mean there is a bug or is it just a general notation that it doesn't work for your usecase? If a test takes too long time, why don't you run it as a All these suggestions make me realize that I will most likely remove the Regarding the point with initial delay for the test-suites, I think this is what Again, 1.2 is not yet released so things can still change. |

|

It didn't work for my use case. Currently I have some custom methods, which delay tests by a certain number of cycles. I got this to work with some trail and error. I wanted to see if I also could run the tests by converting to v1.2 and running all tests in sequence using I could probably optimize the tests by using a mix of

I meant an initial delay for all the unit tests to start. |

|

So if you changed your Why is an initial delay to start all test suites necessary? |

I think so yes.

There is a lot of cpu intensive initialization going on in this project. So it is better to wait with the unit tests. |

I see, never thought of this use case. I guess one option would be to add a parameter where you specify how long TcUnit should wait before it executes the tests, would that solve that particular problem? Edit: All of this makes me really curious of how you and other are using TcUnit. Should have a "TcUnit users group"-day ;-) |

That would solve it indeed. I also once tried to put a timer around

That would be an online meeting then, but big online meetings are not really a success usually. Even with people you know. But it would be nice to exchange ideas. |

|

I consider this issue to be solved. |

|

These changes have been made that are related to this issue.

|

When a lot of unit tests or calculation intensive tests have to be executed, the task cycle time can get exceeded. If the cycle times exceeds, unit tests can fail which make use of timers.

Maybe it is a good idea to somehow enable the spreading of the execution of unit tests?

The text was updated successfully, but these errors were encountered: