-

Notifications

You must be signed in to change notification settings - Fork 13.6k

Run translation and LLVM in parallel when compiling with multiple CGUs #43506

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

r? @arielb1 (rust_highfive has picked a reviewer for you, use r? to override) |

|

Pre-assigning @alexcrichton for review, so he can already start reading |

|

🎊 |

|

As a future thought for how |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looking great!

The general framework of who's running what when seemed a little confusing to follow, but I think a comment would go a long way towards helping that.

src/librustc_trans/back/write.rs

Outdated

| if let Ok(token) = token { | ||

| tokens.push(token); | ||

| } else { | ||

| shared_emitter.fatal("failed to acquire jobserver token"); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could the error be emitted here as well?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What do you mean? Just panic?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Oh just something like:

match token {

Ok(token) => tokens.push(token),

Err(e) => shared_emitter.fatal(&format!("failed to acquire jobserver token: {}", e)),

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ah ok, yes.

src/librustc_trans/back/write.rs

Outdated

| } | ||

|

|

||

| Message::TranslationDone { llvm_work_item, is_last } => { | ||

| work_items.insert(0, llvm_work_item); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How come this is inserted on the front?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No reason. I changed that during a debugging session. I'll switch it back to a push.

src/librustc_trans/back/write.rs

Outdated

| assert_eq!(trans_worker_state, TransWorkerState::LLVMing); | ||

| trans_worker_state = TransWorkerState::Idle; | ||

| } else { | ||

| drop(tokens.pop()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we discussed this awhile ago, but should we perhaps not drop the token here? If we greedily hold on to our tokens then that means we can more quickly finish this compilation, which in theory may be desirable to reduce overall memory usage?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, good idea. The truncate above should take care of dropping the Token if we actually don't need it.

src/librustc_trans/back/write.rs

Outdated

| } | ||

| } | ||

| } else { | ||

| match trans_worker_state { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm currently finding the logic here sort of hard to follow in terms of what this "trans worker" is doing. Could you be sure to add a comment with a high-level architecture of what the relationship is between this coordinator thread, the main translation thread, and the worker codegen threads?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok so to see if I understand this:

- The documentation above refers to a "translation worker"

- This trans worker seems to represent the "ephemeral token" that we inherently have to run work from a jobserver

- The literal thread representing the trans worker can change I think? Sometimes it's the literally the thread doing translation, sometimes it's a spawned worker here to translate an existing module.

- If we happen to reach 4 translated codegen units but not codegen'd codegen units then we request the translating main thread to stop, and continue its work with a freshly spawned thread to codegen a module.

Does that sound roughly right?

Ah I see now there's also a crucial piece where when any codegen thread finishes we consider it "trans worker" thread now available again. This means after any codegen thread finishes we may be candidate to start translation of another unit of work I think, right?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah I see now there's also a crucial piece where when any codegen thread finishes we consider it "trans worker" thread now available again. This means after any codegen thread finishes we may be candidate to start translation of another unit of work I think, right?

No, but it's an excellent idea :D

d2cdb54 to

b6c9e69

Compare

|

Docs look great! So one overall meta-comment as well now. I'm having some difficulty articulating this but I'm slightly worried about a situation like:

I haven't convinced myself this is possible and I think that the various handling makes it ok? I've found the interaction between all these threads and the implicit token a little difficult to follow, but does this make sense to you? Can you think of a case where we accidentally starve the translation thread due to our heuristic? |

b6c9e69 to

dbaee99

Compare

So with the latest change, the main thread will be free again as soon as the first LLVM worker is done (thanks to your suggestion). It's still possible to run into a LLVM work shortage the way you describe though. I just adapted the strategy to estimate the cost of a LLVM WorkItem. Maybe the main thread should always start the cheapest one available, so it can get back to translating sooner, if needed? I don't think this is much of a problem though (with no data to back this claim up in any way |

|

Thinking about this a bit more, I think this is my mental model for what's happening: on each turn of the loop we'll have N slots of work to fill up depending on what's currently running and what amount of tokens we have. Given the choice of whether to translate a new unit or codegen an existing unit it seems fine to have a heuristic. Whenever something happens though it'll turn the loop and cause everything to start over. I think that's roughly what's implemented right now, but I think that it means that we should consider the translation thread idle as soon as we've acquired a new token? That way if the translation thread was blocked and we get a token it should get unblocked? (which I don't think happens today?) I may not be following the code quite right though...

Neat! |

Yes, that makes sense. It's a variation of considering the translation thread idle when a package is finished (another way of getting an additional token). |

dbaee99 to

29989ef

Compare

…free up the main thread for translation.

…rough a channel instead of upfront.

…compilation process.

|

Thanks, @kennytm. Compiling with LLVM 3.7 right now... |

|

Can't reproduce with LLVM 3.7 either |

3b2af87 to

b8d4413

Compare

|

I pushed another change that should give a sensible error message at a likely point of failure. Also adapted the scheduler heuristic slightly. Let's see. |

|

New error, excellent |

…t being available in memory.

|

@bors r=alexcrichton OK, passes travis now. |

|

📌 Commit 6468cad has been approved by |

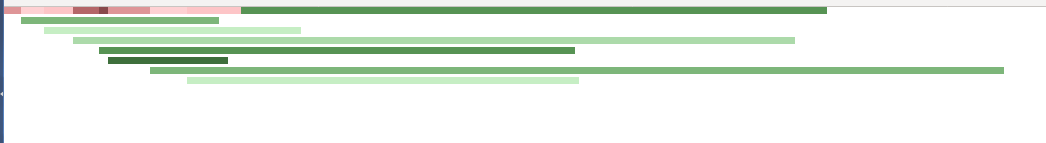

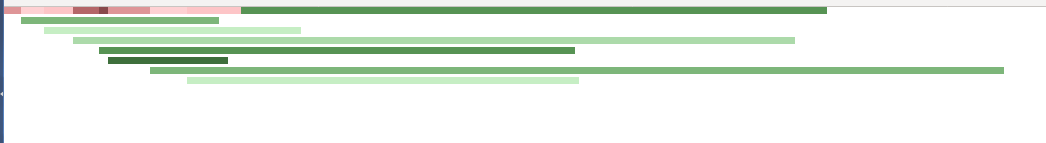

Run translation and LLVM in parallel when compiling with multiple CGUs This is still a work in progress but the bulk of the implementation is done, so I thought it would be good to get it in front of more eyes. This PR makes the compiler start running LLVM while translation is still in progress, effectively allowing for more parallelism towards the end of the compilation pipeline. It also allows the main thread to switch between either translation or running LLVM, which allows to reduce peak memory usage since not all LLVM module have to be kept in memory until linking. This is especially good for incr. comp. but it works just as well when running with `-Ccodegen-units=N`. In order to help tuning and debugging the work scheduler, the PR adds the `-Ztrans-time-graph` flag which spits out html files that show how work packages where scheduled:  (red is translation, green is llvm) One side effect here is that `-Ztime-passes` might show something not quite correct because trans and LLVM are not strictly separated anymore. I plan to have some special handling there that will try to produce useful output. One open question is how to determine whether the trans-thread should switch to intermediate LLVM processing. TODO: - [x] Restore `-Z time-passes` output for LLVM. - [x] Update documentation, esp. for work package scheduling. - [x] Tune the scheduling algorithm. cc @alexcrichton @rust-lang/compiler

|

☀️ Test successful - status-appveyor, status-travis |

…hton Don't unwrap work item results as the panic trace is useless Fixes #43402 now there's no multithreaded panic printouts Also update a comment -------- Likely regressed in #43506, where the code was changed to panic in worker threads on error. Unwrapping gives zero extra information since the stack trace is so short, so we may as well just surface that there was an error and exit the thread properly. Because there are then no multithreaded printouts, I think it should mean the output of the test for #26199 is deterministic and not interleaved (thanks to @philipc #43402 (comment) for a hint). Sadly the output is now: ``` thread '<unnamed>' panicked at 'aborting due to worker thread panic', src/librustc_trans/back/write.rs:1643:20 note: Run with `RUST_BACKTRACE=1` for a backtrace. error: could not write output to : No such file or directory error: aborting due to previous error ``` but it's an improvement over the multi-panic situation before. r? @alexcrichton

Changelog:

Version 1.21.0 (2017-10-12)

==========================

Language

--------

- [You can now use static references for literals.][43838]

Example:

```rust

fn main() {

let x: &'static u32 = &0;

}

```

- [Relaxed path syntax. Optional `::` before `<` is now allowed in all contexts.][43540]

Example:

```rust

my_macro!(Vec<i32>::new); // Always worked

my_macro!(Vec::<i32>::new); // Now works

```

Compiler

--------

- [Upgraded jemalloc to 4.5.0][43911]

- [Enabled unwinding panics on Redox][43917]

- [Now runs LLVM in parallel during translation phase.][43506]

This should reduce peak memory usage.

Libraries

---------

- [Generate builtin impls for `Clone` for all arrays and tuples that

are `T: Clone`][43690]

- [`Stdin`, `Stdout`, and `Stderr` now implement `AsRawFd`.][43459]

- [`Rc` and `Arc` now implement `From<&[T]> where T: Clone`, `From<str>`,

`From<String>`, `From<Box<T>> where T: ?Sized`, and `From<Vec<T>>`.][42565]

Stabilized APIs

---------------

[`std::mem::discriminant`]

Cargo

-----

- [You can now call `cargo install` with multiple package names][cargo/4216]

- [Cargo commands inside a virtual workspace will now implicitly

pass `--all`][cargo/4335]

- [Added a `[patch]` section to `Cargo.toml` to handle

prepublication dependencies][cargo/4123] [RFC 1969]

- [`include` & `exclude` fields in `Cargo.toml` now accept gitignore

like patterns][cargo/4270]

- [Added the `--all-targets` option][cargo/4400]

- [Using required dependencies as a feature is now deprecated and emits

a warning][cargo/4364]

Misc

----

- [Cargo docs are moving][43916]

to [doc.rust-lang.org/cargo](https://doc.rust-lang.org/cargo)

- [The rustdoc book is now available][43863]

at [doc.rust-lang.org/rustdoc](https://doc.rust-lang.org/rustdoc)

- [Added a preview of RLS has been made available through rustup][44204]

Install with `rustup component add rls-preview`

- [`std::os` documentation for Unix, Linux, and Windows now appears on doc.rust-lang.org][43348]

Previously only showed `std::os::unix`.

Compatibility Notes

-------------------

- [Changes in method matching against higher-ranked types][43880] This may cause

breakage in subtyping corner cases. [A more in-depth explanation is available.][info/43880]

- [rustc's JSON error output's byte position start at top of file.][42973]

Was previously relative to the rustc's internal `CodeMap` struct which

required the unstable library `libsyntax` to correctly use.

- [`unused_results` lint no longer ignores booleans][43728]

[42565]: rust-lang/rust#42565

[42973]: rust-lang/rust#42973

[43348]: rust-lang/rust#43348

[43459]: rust-lang/rust#43459

[43506]: rust-lang/rust#43506

[43540]: rust-lang/rust#43540

[43690]: rust-lang/rust#43690

[43728]: rust-lang/rust#43728

[43838]: rust-lang/rust#43838

[43863]: rust-lang/rust#43863

[43880]: rust-lang/rust#43880

[43911]: rust-lang/rust#43911

[43916]: rust-lang/rust#43916

[43917]: rust-lang/rust#43917

[44204]: rust-lang/rust#44204

[cargo/4123]: rust-lang/cargo#4123

[cargo/4216]: rust-lang/cargo#4216

[cargo/4270]: rust-lang/cargo#4270

[cargo/4335]: rust-lang/cargo#4335

[cargo/4364]: rust-lang/cargo#4364

[cargo/4400]: rust-lang/cargo#4400

[RFC 1969]: rust-lang/rfcs#1969

[info/43880]: rust-lang/rust#44224 (comment)

[`std::mem::discriminant`]: https://doc.rust-lang.org/std/mem/fn.discriminant.html

…ster fix faulty comment after rust-lang#43506 there is no fixed number of request sent.

…ster fix faulty comment after rust-lang#43506 there is no fixed number of request sent.

…ster fix faulty comment after rust-lang#43506 there is no fixed number of request sent.

…ster fix faulty comment after rust-lang#43506 there is no fixed number of request sent.

This is still a work in progress but the bulk of the implementation is done, so I thought it would be good to get it in front of more eyes.

This PR makes the compiler start running LLVM while translation is still in progress, effectively allowing for more parallelism towards the end of the compilation pipeline. It also allows the main thread to switch between either translation or running LLVM, which allows to reduce peak memory usage since not all LLVM module have to be kept in memory until linking. This is especially good for incr. comp. but it works just as well when running with

-Ccodegen-units=N.In order to help tuning and debugging the work scheduler, the PR adds the

-Ztrans-time-graphflag which spits out html files that show how work packages where scheduled:(red is translation, green is llvm)

One side effect here is that

-Ztime-passesmight show something not quite correct because trans and LLVM are not strictly separated anymore. I plan to have some special handling there that will try to produce useful output.One open question is how to determine whether the trans-thread should switch to intermediate LLVM processing.

TODO:

-Z time-passesoutput for LLVM.cc @alexcrichton @rust-lang/compiler