-

Notifications

You must be signed in to change notification settings - Fork 131

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

drainer/: Reduce memory usage (#735) #737

drainer/: Reduce memory usage (#735) #737

Conversation

|

LGTM |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

|

DNM now. This PR should better be merged after release-2.1 version. |

|

do you run it at the case there lag ? for this, the mem will not take only less than 1G also you may need to change pkg/loader ' input buf size. |

…czli/drainer/reduceMemoryUsage

…ip binlog by previous test case

…czli/drainer/reduceMemoryUsage

…czli/drainer/reduceMemoryUsage

…ichunzhu/tidb-binlog into czli/drainer/reduceMemoryUsage

|

@suzaku PTAL |

|

DNM now, I will add some unit tests about |

|

please complete it today @lichunzhu |

… when block at cond.Wait() or input is closed. add one unit test to test whether txnManager can handle txn whose size if bigger than maxCacheSize

|

/run-all-tests tidb=release-3.0 tikv=release-3.0 pd=release-3.0 |

|

@GregoryIan PTAL |

pkg/loader/load_test.go

Outdated

| select { | ||

| case input <- txn: | ||

| case <-time.After(50 * time.Microsecond): | ||

| c.Fatal("txnManager gets blocked while receiving txns") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If the 5th txn get blocked sending to input, why didn't this test fail?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The 5th txn was picked from input but can't be added to the txnManager.cacheChan. Although it gets blocked at cond.Wait() but it's still picked out from input. By the way, we can't know a txn's size when it is not picked out from input.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The check c.Assert(output, check.HasLen, 4) can make sure it's not added.

pkg/loader/load_test.go

Outdated

| case t := <-output: | ||

| txnManager.pop(t) | ||

| c.Assert(t, check.DeepEquals, txn) | ||

| case <-time.After(50 * time.Microsecond): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No need to wait? Just use default, because if we can't get a txn at this point, something must be wrong.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This place default can be used.

pkg/loader/load_test.go

Outdated

| output := txnManager.run() | ||

| select { | ||

| case input <- txnSmall: | ||

| case <-time.After(50 * time.Microsecond): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Use default?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In txnManager.run() it will start a new goroutine and start watching input channel which will take some time. Using default may get fault.

|

/run-unit-tests |

pkg/loader/load_test.go

Outdated

| @@ -413,13 +413,13 @@ func (s *txnManagerSuite) TestRunTxnManager(c *check.C) { | |||

| case t := <-output: | |||

| txnManager.pop(t) | |||

| c.Assert(t, check.DeepEquals, txn) | |||

| case <-time.After(50 * time.Microsecond): | |||

| default: | |||

| c.Fatal("Fail to pick txn from txnManager") | |||

| } | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

select {

case t := <-output:

case <-time.After...

}This pattern appears a lot in this test, is it possible to extract this as a helper function to simplify the test?

|

@suzaku PTAL |

|

/run-unit-tests |

|

Rest LGTM |

Co-Authored-By: satoru <satorulogic@gmail.com>

|

/run-unit-tests |

|

/run-all-tests tidb=release-3.0 tikv=release-3.0 pd=release-3.0 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

|

/run-integration-tests tidb=release-3.0 tikv=release-3.0 pd=release-3.0 |

What problem does this PR solve?

Cherry pick of #735.

TOOL-1480

Current drainer uses buffed channel to cache some instances which may cause OOM when facing binlogs which consumes too much memory.

What is changed and how it works?

txnManagerin loader to manage cached txn memory usage. WithouttxnManagerthe performance of loader will be affected.To check the effectiveness, we make two tests

In the following:

v1 means 097db42, which is the newest master branch.

v2 means 1e51514, which is the current branch.

In v1,

maxBinlogItemCount=512In v2,

maxBinlogItemCount=0TEST 1: Small binlogs, to validate the efficiency of drainer

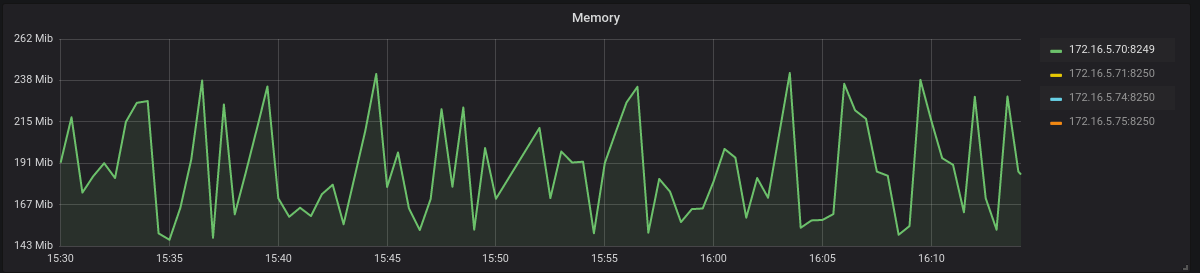

I put the test of v1 and v2 in one picture. 15:30 ~ 15:50 is test of v1 while 15:53 ~ 16:13 is test of v2. As we can see the memory usage and drainer event of both branches are similar.

drainer event:

memory:

execution time:

TEST 2: the upstream data one binlog near 60M, to validate the momery usage of drainer

For v1 and situations we set

loader.input,loader.success,dsyncer.successbuffer to 1024 drainer will get an OOM problem. The memory usage will rise up to more than 100G.For v2, the memory usage is around 2G. The drainer event for DDL is around 2k and is around 14k for DMLs.

drainer event:

memory:

execute time:

Check List

Tests

Code changes

Side effects

Related changes

tidb-ansiblerepository