-

Notifications

You must be signed in to change notification settings - Fork 86

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

0 parents

commit 6e82f38

Showing

15 changed files

with

1,148 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,16 @@ | ||

| .DS_Store | ||

| .remote-sync.json | ||

| .sync-config.cson | ||

|

|

||

| *__pycache__* | ||

| *.pyc | ||

| *.png | ||

| *.model | ||

| *.ipynb_checkpoints | ||

| *.ipynb | ||

| *.c | ||

| *.so | ||

| *.o | ||

| *.jpg | ||

|

|

||

| faster_rcnn_models |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,50 @@ | ||

| # Faster R-CNN | ||

|

|

||

| This is an experimental implementation of Faster R-CNN using Chainer based on Ross Girshick's [py-faster-rcnn codes](https://github.com/rbgirshick/py-faster-rcnn). | ||

|

|

||

| ## Requirement | ||

|

|

||

| - Python 2.7.6+, 3.4.3+, 3.5.1+ | ||

|

|

||

| - [Chainer](https://github.com/pfnet/chainer) 1.9.1+ | ||

| - NumPy 1.9, 1.10, 1.11 | ||

| - Cython 0.23+ | ||

| - OpenCV 2.9+, 3.1+ | ||

|

|

||

| ## Inference | ||

|

|

||

| ### 1. Download pre-trained model | ||

|

|

||

| ``` | ||

| wget https://www.dropbox.com/s/2fadbs9q50igar8/VGG16_faster_rcnn_final.model?dl=0 | ||

| mv VGG16_faster_rcnn_final.model?dl=0 VGG16_faster_rcnn_final.model | ||

| ``` | ||

|

|

||

| ### 2. Build extensions | ||

|

|

||

| ``` | ||

| cd lib | ||

| python setup.py build_ext -i | ||

| ``` | ||

|

|

||

| ### 3. Use forward.py | ||

|

|

||

| ``` | ||

| wget http://vision.cs.utexas.edu/voc/VOC2007_test/JPEGImages/004545.jpg | ||

| python forward.py --img_fn 004545.jpg --gpu 0 | ||

| ``` | ||

|

|

||

| `--gpu 0` turns on GPU. When you turn off GPU, use `--gpu -1` or remove `--gpu` option. | ||

|

|

||

| To use forward.py with CPU, you have to apply this diff due to a known bug in Chainer: <https://github.com/pfnet/chainer/pull/1273> | ||

|

|

||

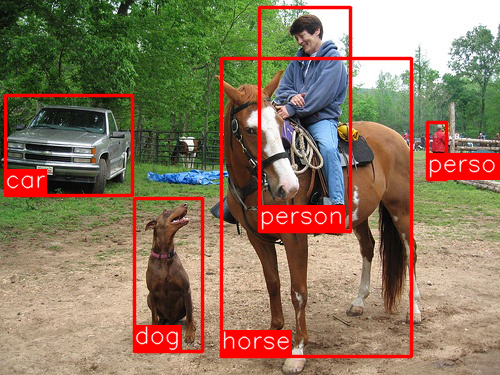

|  | ||

|

|

||

| ## Training | ||

|

|

||

| will be updated soon | ||

|

|

||

| ## Framework | ||

|

|

||

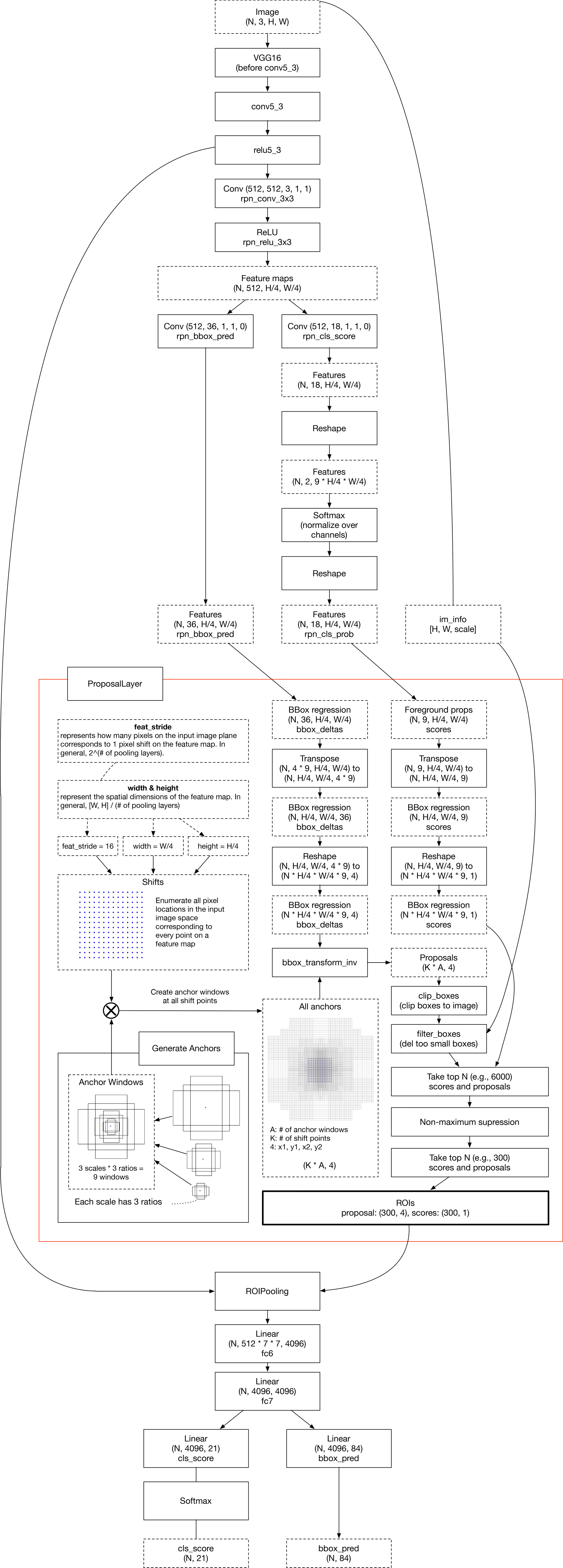

|  |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,94 @@ | ||

| #!/usr/bin/env python | ||

| # -*- coding: utf-8 -*- | ||

|

|

||

| from chainer import serializers | ||

| from lib.cpu_nms import cpu_nms as nms | ||

| from lib.models.faster_rcnn import FasterRCNN | ||

|

|

||

| import argparse | ||

| import chainer | ||

| import cv2 as cv | ||

| import numpy as np | ||

|

|

||

| CLASSES = ('__background__', | ||

| 'aeroplane', 'bicycle', 'bird', 'boat', | ||

| 'bottle', 'bus', 'car', 'cat', 'chair', | ||

| 'cow', 'diningtable', 'dog', 'horse', | ||

| 'motorbike', 'person', 'pottedplant', | ||

| 'sheep', 'sofa', 'train', 'tvmonitor') | ||

| PIXEL_MEANS = np.array([[[102.9801, 115.9465, 122.7717]]]) | ||

|

|

||

|

|

||

| def get_model(): | ||

| model = FasterRCNN() | ||

| model.train = False | ||

| serializers.load_npz('VGG16_faster_rcnn_final.model', model) | ||

|

|

||

| return model | ||

|

|

||

|

|

||

| def img_preprocessing(orig_img, pixel_means, max_size=1000, scale=600): | ||

| img = orig_img.astype(np.float32, copy=True) | ||

| img -= pixel_means | ||

| im_size_min = np.min(img.shape[0:2]) | ||

| im_size_max = np.max(img.shape[0:2]) | ||

| im_scale = float(scale) / float(im_size_min) | ||

| if np.round(im_scale * im_size_max) > max_size: | ||

| im_scale = float(max_size) / float(im_size_max) | ||

| img = cv.resize(img, None, None, fx=im_scale, fy=im_scale, | ||

| interpolation=cv.INTER_LINEAR) | ||

|

|

||

| return img.transpose([2, 0, 1]).astype(np.float32), im_scale | ||

|

|

||

|

|

||

| def draw_result(out, im_scale, clss, bbox, nms_thresh, conf): | ||

| CV_AA = 16 | ||

| for cls_id in range(1, 21): | ||

| _cls = clss[:, cls_id][:, np.newaxis] | ||

| _bbx = bbox[:, cls_id * 4: (cls_id + 1) * 4] | ||

| dets = np.hstack((_bbx, _cls)) | ||

| keep = nms(dets, nms_thresh) | ||

| dets = dets[keep, :] | ||

|

|

||

| inds = np.where(dets[:, -1] >= conf)[0] | ||

| for i in inds: | ||

| x1, y1, x2, y2 = map(int, dets[i, :4]) | ||

| cv.rectangle(out, (x1, y1), (x2, y2), (0, 0, 255), 2, CV_AA) | ||

| ret, baseline = cv.getTextSize( | ||

| CLASSES[cls_id], cv.FONT_HERSHEY_SIMPLEX, 0.8, 1) | ||

| cv.rectangle(out, (x1, y2 - ret[1] - baseline), | ||

| (x1 + ret[0], y2), (0, 0, 255), -1) | ||

| cv.putText(out, CLASSES[cls_id], (x1, y2 - baseline), | ||

| cv.FONT_HERSHEY_SIMPLEX, 0.8, (255, 255, 255), 1, CV_AA) | ||

|

|

||

| return out | ||

|

|

||

|

|

||

| if __name__ == '__main__': | ||

| parser = argparse.ArgumentParser() | ||

| parser.add_argument('--img_fn', type=str, default='sample.jpg') | ||

| parser.add_argument('--out_fn', type=str, default='result.jpg') | ||

| parser.add_argument('--nms_thresh', type=float, default=0.3) | ||

| parser.add_argument('--conf', type=float, default=0.8) | ||

| parser.add_argument('--gpu', type=int, default=-1) | ||

| args = parser.parse_args() | ||

|

|

||

| xp = chainer.cuda.cupy if chainer.cuda.available and args.gpu >= 0 else np | ||

| model = get_model() | ||

| if chainer.cuda.available and args.gpu >= 0: | ||

| model.to_gpu(args.gpu) | ||

|

|

||

| orig_image = cv.imread(args.img_fn) | ||

| img, im_scale = img_preprocessing(orig_image, PIXEL_MEANS) | ||

| img = np.expand_dims(img, axis=0) | ||

| img = chainer.Variable(xp.asarray(img, dtype=np.float32), volatile=True) | ||

| h, w = img.data.shape[2:] | ||

| cls_score, bbox_pred = model(img, np.array([[h, w, im_scale]])) | ||

| cls_score = cls_score.data | ||

|

|

||

| if args.gpu >= 0: | ||

| cls_score = chainer.cuda.cupy.asnumpy(cls_score) | ||

| bbox_pred = chainer.cuda.cupy.asnumpy(bbox_pred) | ||

| result = draw_result(orig_image, im_scale, cls_score, bbox_pred, | ||

| args.nms_thresh, args.conf) | ||

| cv.imwrite(args.out_fn, result) |

Empty file.

Empty file.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,217 @@ | ||

| #!/usr/bin/env python | ||

| # -*- coding: utf-8 -*- | ||

|

|

||

| # Mofidied by: | ||

| # Copyright (c) 2016 Shunta Saito | ||

|

|

||

| # Original work by: | ||

| # -------------------------------------------------------- | ||

| # Faster R-CNN | ||

| # Copyright (c) 2015 Microsoft | ||

| # Licensed under The MIT License [see LICENSE for details] | ||

| # Written by Ross Girshick and Sean Bell | ||

| # https://github.com/rbgirshick/py-faster-rcnn | ||

| # -------------------------------------------------------- | ||

|

|

||

| import chainer | ||

| import numpy as np | ||

| from generate_anchors import generate_anchors | ||

| from utils.cython_bbox import bbox_overlaps | ||

| from fast_rcnn.bbox_transform import bbox_transform | ||

|

|

||

|

|

||

| class AnchorTarget(object): | ||

| """ | ||

| Args: | ||

| feat_stride (int): | ||

| """ | ||

|

|

||

| RPN_NEGATIVE_OVERLAP = 0.3 | ||

| RPN_POSITIVE_OVERLAP = 0.7 | ||

| RPN_CLOBBER_POSITIVES = False | ||

| RPN_FG_FRACTION = 0.5 | ||

| RPN_BATCHSIZE = 256 | ||

| RPN_BBOX_INSIDE_WEIGHTS = (1.0, 1.0, 1.0, 1.0) | ||

| RPN_POSITIVE_WEIGHT = -1.0 | ||

|

|

||

| def __init__(self, feat_stride=16): | ||

| self.feat_stride = feat_stride | ||

| self.anchors = generate_anchors() | ||

| self.n_anchors = self.anchors.shape[0] | ||

| self.allowed_border = 0 | ||

|

|

||

| def __call__(self, x, gt_boxes, im_info): | ||

| height, width = x.data.shape[2:] | ||

|

|

||

| shift_x = np.arange(0, width) * self.feat_stride | ||

| shift_y = np.arange(0, height) * self.feat_stride | ||

| shift_x, shift_y = np.meshgrid(shift_x, shift_y) | ||

| shifts = np.vstack((shift_x.ravel(), shift_y.ravel(), | ||

| shift_x.ravel(), shift_y.ravel())).transpose() | ||

|

|

||

| A = self.n_anchors | ||

| K = shifts.shape[0] | ||

| all_anchors = (self.anchors.reshape((1, A, 4)) + | ||

| shifts.reshape((1, K, 4)).transpose((1, 0, 2))) | ||

| all_anchors = all_anchors.reshape((K * A, 4)) | ||

| total_anchors = int(K * A) | ||

|

|

||

| # only keep anchors inside the image | ||

| inds_inside = np.where( | ||

| (all_anchors[:, 0] >= -self.allowed_border) & | ||

| (all_anchors[:, 1] >= -self.allowed_border) & | ||

| (all_anchors[:, 2] < im_info[1] + self.allowed_border) & # width | ||

| (all_anchors[:, 3] < im_info[0] + self.allowed_border) # height | ||

| )[0] | ||

|

|

||

| # keep only inside anchors | ||

| anchors = all_anchors[inds_inside, :] | ||

|

|

||

| # label: 1 is positive, 0 is negative, -1 is dont care | ||

| labels = np.empty((len(inds_inside), ), dtype=np.float32) | ||

| labels.fill(-1) | ||

|

|

||

| # overlaps between the anchors and the gt boxes | ||

| # overlaps (ex, gt) | ||

| overlaps = bbox_overlaps( | ||

| np.ascontiguousarray(anchors, dtype=np.float), | ||

| np.ascontiguousarray(gt_boxes, dtype=np.float)) | ||

| argmax_overlaps = overlaps.argmax(axis=1) | ||

| max_overlaps = overlaps[np.arange(len(inds_inside)), argmax_overlaps] | ||

| gt_argmax_overlaps = overlaps.argmax(axis=0) | ||

| gt_max_overlaps = overlaps[gt_argmax_overlaps, | ||

| np.arange(overlaps.shape[1])] | ||

| gt_argmax_overlaps = np.where(overlaps == gt_max_overlaps)[0] | ||

|

|

||

| if not self.RPN_CLOBBER_POSITIVES: | ||

| # assign bg labels first so that positive labels can clobber them | ||

| labels[max_overlaps < self.RPN_NEGATIVE_OVERLAP] = 0 | ||

|

|

||

| # fg label: for each gt, anchor with highest overlap | ||

| labels[gt_argmax_overlaps] = 1 | ||

|

|

||

| # fg label: above threshold IOU | ||

| labels[max_overlaps >= self.RPN_POSITIVE_OVERLAP] = 1 | ||

|

|

||

| if self.RPN_CLOBBER_POSITIVES: | ||

| # assign bg labels last so that negative labels can clobber | ||

| # positives | ||

| labels[max_overlaps < self.RPN_NEGATIVE_OVERLAP] = 0 | ||

|

|

||

| # subsample positive labels if we have too many | ||

| num_fg = int(self.RPN_FG_FRACTION * self.RPN_BATCHSIZE) | ||

| fg_inds = np.where(labels == 1)[0] | ||

| if len(fg_inds) > num_fg: | ||

| disable_inds = np.random.choice( | ||

| fg_inds, size=(len(fg_inds) - num_fg), replace=False) | ||

| labels[disable_inds] = -1 | ||

|

|

||

| # subsample negative labels if we have too many | ||

| num_bg = self.RPN_BATCHSIZE - np.sum(labels == 1) | ||

| bg_inds = np.where(labels == 0)[0] | ||

| if len(bg_inds) > num_bg: | ||

| disable_inds = np.random.choice( | ||

| bg_inds, size=(len(bg_inds) - num_bg), replace=False) | ||

| labels[disable_inds] = -1 | ||

| # print "was %s inds, disabling %s, now %s inds" % ( | ||

| # len(bg_inds), len(disable_inds), np.sum(labels == 0)) | ||

|

|

||

| bbox_targets = np.zeros((len(inds_inside), 4), dtype=np.float32) | ||

| bbox_targets = _compute_targets(anchors, gt_boxes[argmax_overlaps, :]) | ||

|

|

||

| bbox_inside_weights = np.zeros((len(inds_inside), 4), dtype=np.float32) | ||

| bbox_inside_weights[labels == 1, :] = np.array( | ||

| self.RPN_BBOX_INSIDE_WEIGHTS) | ||

|

|

||

| bbox_outside_weights = np.zeros( | ||

| (len(inds_inside), 4), dtype=np.float32) | ||

| if self.RPN_POSITIVE_WEIGHT < 0: | ||

| # uniform weighting of examples (given non-uniform sampling) | ||

| num_examples = np.sum(labels >= 0) | ||

| positive_weights = np.ones((1, 4)) * 1.0 / num_examples | ||

| negative_weights = np.ones((1, 4)) * 1.0 / num_examples | ||

| else: | ||

| assert ((self.RPN_POSITIVE_WEIGHT > 0) & | ||

| (self.RPN_POSITIVE_WEIGHT < 1)) | ||

| positive_weights = (self.RPN_POSITIVE_WEIGHT / | ||

| np.sum(labels == 1)) | ||

| negative_weights = ((1.0 - self.RPN_POSITIVE_WEIGHT) / | ||

| np.sum(labels == 0)) | ||

| bbox_outside_weights[labels == 1, :] = positive_weights | ||

| bbox_outside_weights[labels == 0, :] = negative_weights | ||

|

|

||

| # map up to original set of anchors | ||

| labels = _unmap(labels, total_anchors, inds_inside, fill=-1) | ||

| bbox_targets = _unmap(bbox_targets, total_anchors, inds_inside, fill=0) | ||

| bbox_inside_weights = _unmap( | ||

| bbox_inside_weights, total_anchors, inds_inside, fill=0) | ||

| bbox_outside_weights = _unmap( | ||

| bbox_outside_weights, total_anchors, inds_inside, fill=0) | ||

|

|

||

| # labels | ||

| labels = labels.reshape((1, height, width, A)).transpose(0, 3, 1, 2) | ||

|

|

||

| # bbox_targets | ||

| bbox_targets = bbox_targets \ | ||

| .reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2) | ||

|

|

||

| # bbox_inside_weights | ||

| bbox_inside_weights = bbox_inside_weights \ | ||

| .reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2) | ||

| assert bbox_inside_weights.shape[2] == height | ||

| assert bbox_inside_weights.shape[3] == width | ||

|

|

||

| # bbox_outside_weights | ||

| bbox_outside_weights = bbox_outside_weights \ | ||

| .reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2) | ||

| assert bbox_outside_weights.shape[2] == height | ||

| assert bbox_outside_weights.shape[3] == width | ||

|

|

||

| return labels, bbox_targets, bbox_inside_weights, bbox_outside_weights | ||

|

|

||

|

|

||

| def _unmap(data, count, inds, fill=0): | ||

| """ Unmap a subset of item (data) back to the original set of items (of | ||

| size count) """ | ||

| if len(data.shape) == 1: | ||

| ret = np.empty((count, ), dtype=np.float32) | ||

| ret.fill(fill) | ||

| ret[inds] = data | ||

| else: | ||

| ret = np.empty((count, ) + data.shape[1:], dtype=np.float32) | ||

| ret.fill(fill) | ||

| ret[inds, :] = data | ||

| return ret | ||

|

|

||

|

|

||

| def _compute_targets(ex_rois, gt_rois): | ||

| """Compute bounding-box regression targets for an image.""" | ||

|

|

||

| assert ex_rois.shape[0] == gt_rois.shape[0] | ||

| assert ex_rois.shape[1] == 4 | ||

| assert gt_rois.shape[1] == 5 | ||

|

|

||

| return bbox_transform(ex_rois, gt_rois[:, :4]).astype( | ||

| np.float32, copy=False) | ||

|

|

||

| if __name__ == '__main__': | ||

| height, width = 480, 640 | ||

| x = chainer.Variable(np.ones((1, 3, height, width), dtype=np.float32)) | ||

| gt_boxes = np.array([ | ||

| [100, 100, 150, 150, 0], | ||

| [250, 250, 300, 350, 1] | ||

| ], dtype=np.int32) | ||

| im_info = np.array([height, width], dtype=np.int32) | ||

|

|

||

| anchor_target = AnchorTarget() | ||

| labels, bbox_targets, bbox_inside_weights, bbox_outside_weights = \ | ||

| anchor_target(x, gt_boxes, im_info) | ||

|

|

||

| print(labels.shape) | ||

| print(bbox_targets.shape) | ||

| print(bbox_inside_weights.shape) | ||

| print(bbox_outside_weights.shape) | ||

|

|

||

| from skimage import io | ||

| io.imsave('a.png', (labels[0, 0] == 0).astype(np.uint8) * 255) |

Oops, something went wrong.