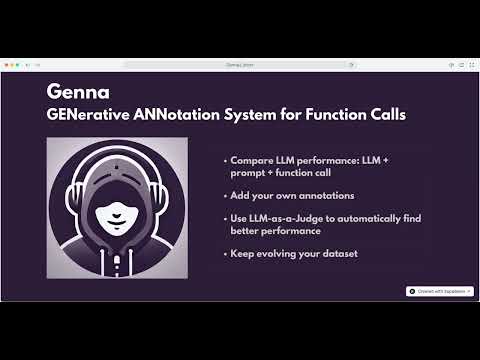

Genna (GENerative ANNotation) is a LLM-centric text annotation system designed for efficient text annotation and comparison of AI model outputs. Genna makes it easy to test out different LLMs, prompts, temperature and scalability of structured calls.

- 🤖 AI Model Integration: Configure multiple AI models as annotators: supports OpenAI, Anthropic and Ollama

- 📊 Custom Dataset Management: Evolve your dataset, add new files, remove files, while keeping track of metrics

- 📋 Human Annotation Interface:

- Interactive annotation with thumbs up/down feedback

- Real-time disagreement detection between AI models

- Expandable annotation view for each row

- Dark mode support for comfortable viewing

- 🔍 Smart Filtering:

- Filter by content across any column

- Show only rows with AI model disagreements

- Category-based multi-filtering

- 📈 Annotation Analysis:

- Visual indicators for model agreements/disagreements

- Summary view showing differences between model outputs

- Quick toggle to show/hide all annotations

- Start and environment and install requirements:

conda create --name genna

conda activate genna

pip install -r requirements.txt

- Start the application

python app.py

- Create a new project

- Upload your CSV file

- Configure column settings:

- Show: Columns to display

- Label: Columns to annotate

- Content: Columns containing text to annotate

- Filter: Category columns for filtering

- Go to project settings

- Click "Add New Model"

- Configure the annotator:

- Give it a unique name

- Select the AI model

- Set temperature

- Write base prompt

- Configure label prompts

- Save the annotator

- Select a file to annotate

- Use the expand/collapse buttons to view annotations

- Review AI model outputs

- Provide feedback using thumbs up/down

- Use filters to focus on specific content:

- Text search in any column

- Show only rows with model disagreements

- Category-based filtering

- Toggle dark mode for comfortable viewing

- Use bulk actions to show/hide all annotations

- Create a judge model similar to annotators

- When running the judge:

- Click "Annotate" on the judge

- Select two annotators to compare

- Judge will evaluate their annotations

- Results appear in the scores section

- Files are automatically organized by project

- Annotations stored separately from source files

- Easy deletion of files when needed

- Category-based filtering for better organization

- Create and manage multiple annotation projects

- Upload CSV files to projects

- Configure which columns to show, label, use as content, or filter

- Set project objectives and annotation guidelines

- Configure AI models (like GPT-3, GPT-4) as annotators or judges

- Customize model parameters:

- Temperature for controlling randomness

- Custom prompts for different annotation tasks

- Label types (text, boolean, numeric)

-

Annotators

- AI models configured to annotate text

- Each annotator has a unique name and configuration

- Can handle multiple label types per annotation

- Annotations are stored separately from source data

- Real-time visual feedback for model agreements/disagreements

- Interactive thumbs up/down interface for manual annotation

-

Annotation Interface

- Expandable rows showing all model annotations

- Summary row indicating differences between model outputs

- Dark mode support for reduced eye strain

- Quick filters to show only rows with disagreements

- Bulk show/hide annotations across all rows

-

Judges

- Special AI models that evaluate annotations

- Compare annotations from two different annotators

- Selected dynamically at annotation time

- Help evaluate annotation quality and consistency

- Filter data using category columns

- Multi-select filtering capabilities

- Organize and manage annotations by categories

- Quick text search within any column

project_name/

├── data/

│ ├── source_files/

│ │ └── uploaded_csv_files

│ └── annotations/

│ └── annotator_results

├── settings/

│ └── project_settings.json

└── models/

└── model_configurations

Annotations are stored in a structured format:

{

"annotator_id": "unique_id",

"timestamp": "ISO_timestamp",

"labels": {

"column1": "value1",

"column2": true,

"column3": 5

}

}Judges compare annotations by:

- Analyzing both annotators' results

- Evaluating consistency and quality

- Providing numerical scores

- Highlighting discrepancies

-

Project Setup

- Clear project objectives

- Well-defined annotation guidelines

- Consistent label schemas

-

Model Configuration

- Descriptive model names

- Clear, specific prompts

- Appropriate temperature settings

-

Annotation Review

- Use the disagreement filter to focus on problematic cases

- Review all model outputs before providing feedback

- Pay attention to the summary indicators

- Regular evaluation using judges

-

Data Management

- Regular backups

- Clear category organization

- Periodic cleanup of unused files

- Use filters effectively to manage large datasets