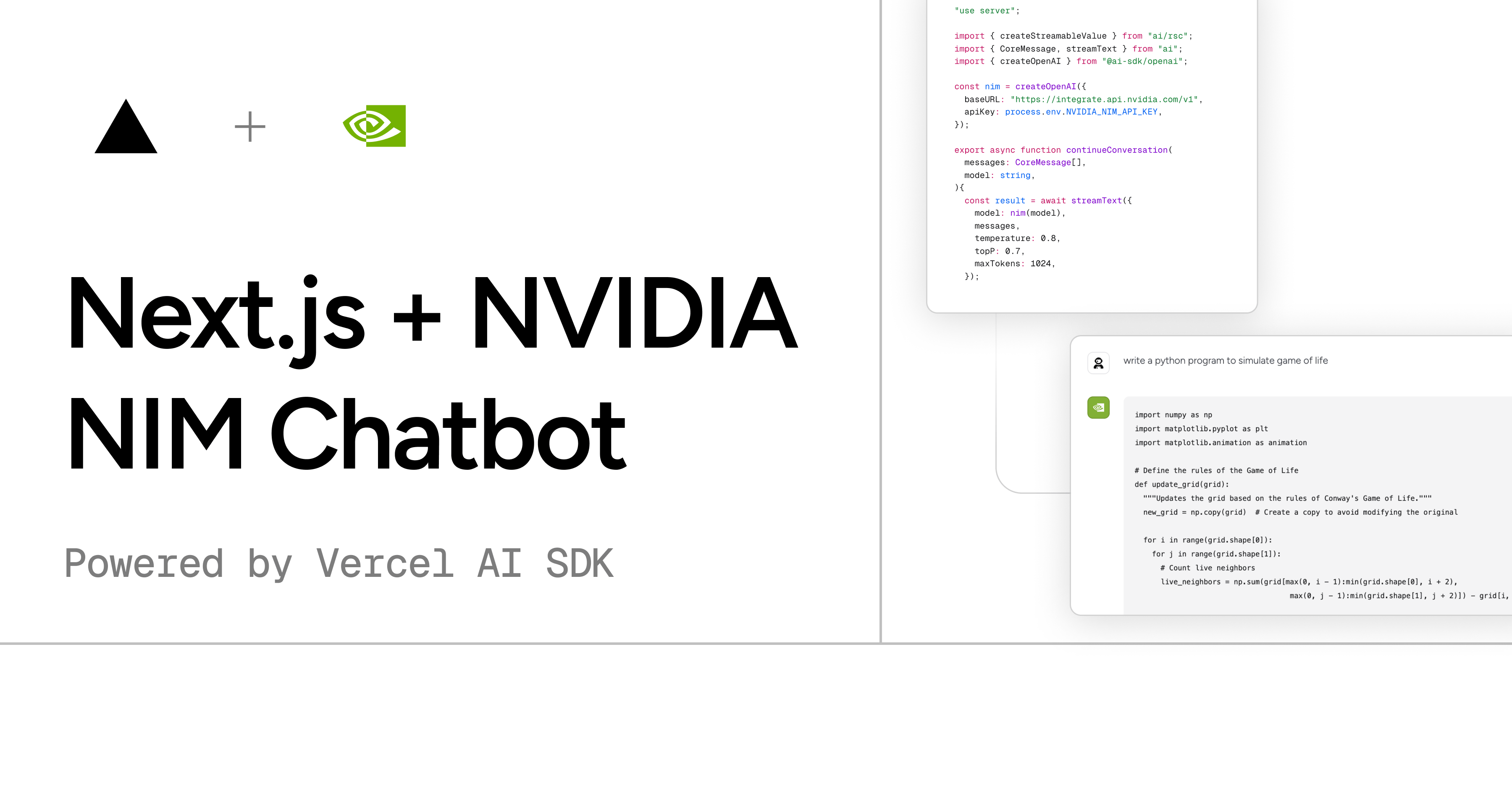

An open-source AI chatbot app template built with Next.js, the Vercel AI SDK and NVIDIA NIM.

- Next.js 14 App Router

- React Server Components (RSCs) for better performance

- NVIDIA NIM API Inference

- Vercel AI SDK for streaming chat responses

- shadcn/ui for UI components

- Tailwind CSS for styling and design

- custom rate limiter for server actions

- Sonner for beautiful toast notifications

- Vercel OG for open graph images

This template uses the NVIDIA NIM API to fetch the models and make inferences. The Vercel AI SDK is used to stream the responses from the server to the client in real-time.

NVIDIA NIM provides 1000 credits for free to every new user. So, I've implemented a custom rate limiter to prevent the users from exceeding the limit. The rate limiter is set to 10 request per hour per IP address. You can change the rate limiter settings in the ratelimit.ts file when deploying your own version of this template.

I've only included text-to-text models in this template. You can easily add more models by following the instructions in the NVIDIA NIM documentation.

The models available in this template are:

gemma-2bgemma-2-9b-itgemma-2-27b-it

Meta

llama3-8b-instructllama3-70b-instruct

NVIDIA

llama3-chatqa-1.5-8bllama3-chatqa-1.5-70bnemotron-4-340b-instruct

IBM

granite-8b-code-instructgranite-34b-code-instruct

Mistral AIand many other models available via NVIDIA NIM are not working with the Vercel AI SDK at the moment. So, I've excluded them from this template. However, you can still use them with the NVIDIA NIM API directly.

You can deploy your own version of this template with Vercel by clicking the button below.

First, you will need to use the environment variables defined in .env.example to create a .env.local file in the root of the project. And make sure not to commit your .env.local file to the repository.

NVIDIA_NIM_API_KEY=To get the NVIDIA NIM API key, you need to sign up on the NVIDIA NIM website.

Then clone the repository and install the dependencies. This project uses bun as the package manager.

bun installRun the development server:

bun devNow the app should be running at http://localhost:3000.

Contributions are welcome! Feel free to open an issue or submit a pull request if you have any ideas or suggestions.

I don't know what to put here. I'm not a lawyer. Use this template however you want. It's open-source and free to use.