This repo includes the source code of the paper: "Cascade residual learning: A two-stage convolutional neural network for stereo matching" by J. Pang, W. Sun, J.S. Ren, C. Yang and Q. Yan. Please cite our paper if you find this repo useful for your work:

@inproceedings{pang2017cascade,

title={Cascade residual learning: A two-stage convolutional neural network for stereo matching},

author={Pang, Jiahao and Sun, Wenxiu and Ren, Jimmy SJ and Yang, Chengxi and Yan, Qiong},

booktitle = {ICCV Workshop on Geometry Meets Deep Learning},

month = {Oct},

year = {2017}

}

- MATLAB (Our scripts has been tested on MATLAB R2015a)

- Download our trained model through this MEGA link or this Baiduyun link

- The KITTI Stereo 2015 dataset from the KITTI website

- Compile our modified Caffe and its MATLAB interface (matcaffe), our work uses the Remap layer (both

remap_layer.cppandremap_layer.hpp) from the repo of "View Synthesis by Appearance Flow" for warping. - Put the downloaded model "crl.caffemodel" and the "kitti_test" folder of the KITTI dataset in the "crl-release/models/crl" folder.

- Run

test_kitti.min the "crl-release/models/crl" folder for testing, our model definitiondeploy_kitti.prototxtis also in this folder. - Check out the newly generated folder

disp_0and you should see the results.

We do not provide the code for training. For training, we need an in-house differentiable interpolation layer developed by our company, SenseTime Group Limited. To make the code publically available, we have replaced the interpolation layer to the downsample layer of DispNet. Since the backward pass of downsample layer is not implemented, the code provided in this repo cannot be applied for training.

On the other hand, using the downsample layer provided in DispNet does not affect the performance of the network. In fact, the network definition deploy_kitti.prototxt (with downsample layer) can produced a D1-all error of 2.67% on the KITTI stereo 2015 leaderboard, exactly the same as our original CRL with the in-house interpolation layer.

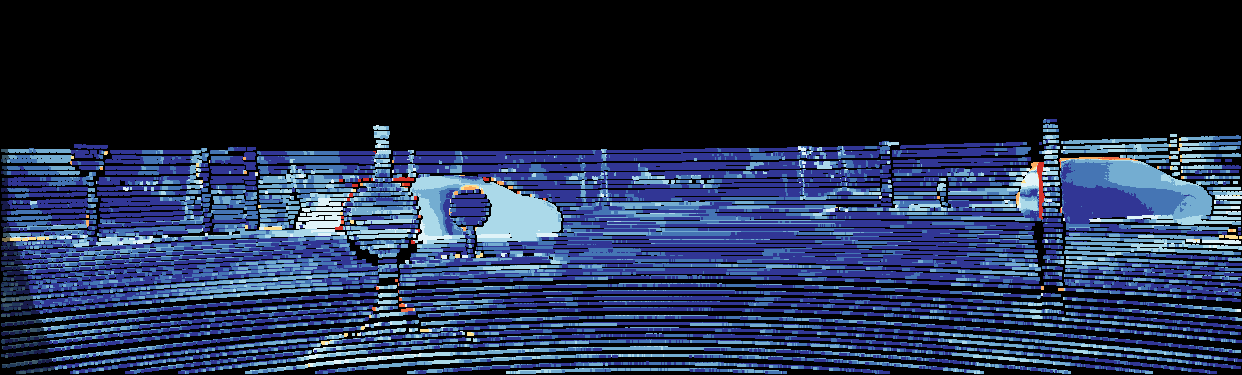

For your information, this is a group of results taken from the evaluation page of KITTI. To browse for more results, please click this link.