-

Notifications

You must be signed in to change notification settings - Fork 18.3k

Closed

Labels

FrozenDueToAgeNeedsInvestigationSomeone must examine and confirm this is a valid issue and not a duplicate of an existing one.Someone must examine and confirm this is a valid issue and not a duplicate of an existing one.

Milestone

Description

What version of Go are you using (go version)?

$ go version 1.15.4-1.15.7

Does this issue reproduce with the latest release?

1.15.7

What operating system and processor architecture are you using (go env)?

go env Output

$ go env GO111MODULE="" GOARCH="amd64" GOBIN="" GOCACHE="/root/.cache/go-build" GOENV="/root/.config/go/env" GOEXE="" GOFLAGS="" GOHOSTARCH="amd64" GOHOSTOS="linux" GOINSECURE="" GOMODCACHE="/home/test/go/pkg/mod" GONOPROXY="" GONOSUMDB="" GOOS="linux" GOPATH="/home/test/go/" GOPRIVATE="" GOPROXY="https://proxy.golang.org,direct" GOROOT="/usr/lib/go" GOSUMDB="sum.golang.org" GOTMPDIR="" GOTOOLDIR="/usr/lib/go/pkg/tool/linux_amd64" GCCGO="gccgo" AR="ar" CC="gcc" CXX="g++" CGO_ENABLED="1" GOMOD="" CGO_CFLAGS="-I/home/db2inst2/sqllib/include" CGO_CPPFLAGS="" CGO_CXXFLAGS="-g -O2" CGO_FFLAGS="-g -O2" CGO_LDFLAGS="-L/home/db2inst2/sqllib/lib" PKG_CONFIG="pkg-config" GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build439006933=/tmp/go-build -gno-record-gcc-switches"

What did you do?

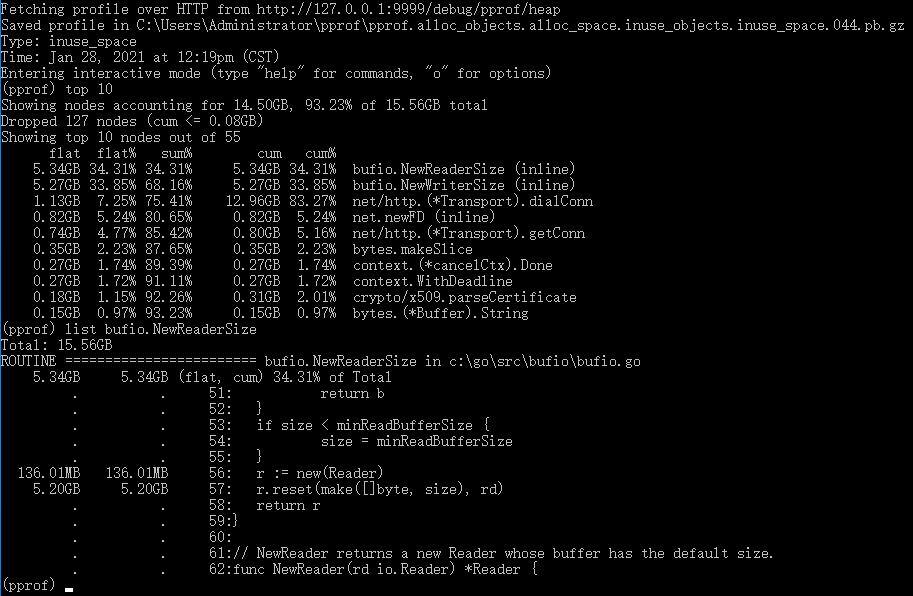

I used a common "http.Transport" object for every “http.Client” HTTP request,After running for a long time, memory usage is getting higher and higher, Use pprof for debugging, as shown below:

10602: 43425792 [70321: 288034816] @ 0x4e4047 0x4e3fef 0x4e278d 0x1d1961

# 0x4e4046 bufio.NewWriterSize+0xc26 c:/go/src/bufio/bufio.go:578

# 0x4e3fee net/http.(*Transport).dialConn+0xbce c:/go/src/net/http/transport.go:1706

# 0x4e278c net/http.(*Transport).dialConnFor+0xcc c:/go/src/net/http/transport.go:1421

my codes like this:

common.HttpTransport = &http.Transport{

TLSClientConfig: &tls.Config{InsecureSkipVerify: true},

MaxIdleConns: 100,

MaxIdleConnsPerHost: 100,

IdleConnTimeout: 60 * time.Second,

DisableKeepAlives: false,

Dial: func(netw, addr string) (net.Conn, error) {

deadline := time.Now().Add(90 * time.Second)

c, err := net.DialTimeout(netw, addr, time.Second*45)

if err != nil {

return nil, err

}

c.SetDeadline(deadline)

return c, nil

},

ForceAttemptHTTP2: true,

TLSHandshakeTimeout: 12 * time.Second,

ResponseHeaderTimeout: 12 * time.Second,

ExpectContinueTimeout: 1 * time.Second,

DisableCompression: true,

}

var (

client *http.Client

)

client = &http.Client{

Transport: common.HttpTransport,

}

client.Timeout = time.Duration(50) * time.Second

req, err := http.NewRequest("GET", URL, nil)

if err != nil {

return false

}

req.Header.Add("Accept", "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8")

resp, err := client.Do(req)

if err != nil {

if resp != nil {

if resp.Body != nil {

io.Copy(ioutil.Discard, resp.Body)

resp.Body.Close()

}

}

return false

}

defer func() {

io.Copy(ioutil.Discard, resp.Body)

resp.Body.Close()

}()What did you expect to see?

What did you see instead?

10602: 43425792 [70321: 288034816] @ 0x4e4047 0x4e3fef 0x4e278d 0x1d1961

# 0x4e4046 bufio.NewWriterSize+0xc26 c:/go/src/bufio/bufio.go:578

# 0x4e3fee net/http.(*Transport).dialConn+0xbce c:/go/src/net/http/transport.go:1706

# 0x4e278c net/http.(*Transport).dialConnFor+0xcc c:/go/src/net/http/transport.go:1421

fatal error: runtime: out of memory

runtime stack:

runtime.throw(0x8a2950, 0x16)

/usr/lib/go/src/runtime/panic.go:1116 +0x72

runtime.sysMap(0xc53c000000, 0x4000000, 0x13956d8)

/usr/lib/go/src/runtime/mem_linux.go:169 +0xc6

runtime.(*mheap).sysAlloc(0xe752e0, 0x400000, 0x7fffffffffff, 0x9d1048)

/usr/lib/go/src/runtime/malloc.go:727 +0x1e5

runtime.(*mheap).grow(0xe752e0, 0x1, 0x0)

/usr/lib/go/src/runtime/mheap.go:1344 +0x85

runtime.(*mheap).allocSpan(0xe752e0, 0x1, 0x7ff1aaf31100, 0x13956e8, 0x7ff1aa8e2220)

/usr/lib/go/src/runtime/mheap.go:1160 +0x6b6

runtime.(*mheap).alloc.func1()

/usr/lib/go/src/runtime/mheap.go:907 +0x65

runtime.systemstack(0x0)

/usr/lib/go/src/runtime/asm_amd64.s:370 +0x66

runtime.mstart()

/usr/lib/go/src/runtime/proc.go:1116

goroutine 41707067 [running]:

runtime.systemstack_switch()

/usr/lib/go/src/runtime/asm_amd64.s:330 fp=0xc35abd11c0 sp=0xc35abd11b8 pc=0x46c600

runtime.(*mheap).alloc(0xe752e0, 0x1, 0xc53b7f0111, 0x9)

/usr/lib/go/src/runtime/mheap.go:901 +0x85 fp=0xc35abd1210 sp=0xc35abd11c0 pc=0x42ac25

runtime.(*mcentral).grow(0xe86898, 0x0)

/usr/lib/go/src/runtime/mcentral.go:506 +0x7a fp=0xc35abd1258 sp=0xc35abd1210 pc=0x41bfba

runtime.(*mcentral).cacheSpan(0xe86898, 0x7ff1aa8e2330)

/usr/lib/go/src/runtime/mcentral.go:177 +0x3e5 fp=0xc35abd12d0 sp=0xc35abd1258 pc=0x41bd45

runtime.(*mcache).refill(0x7ff26091a9b8, 0x11)

/usr/lib/go/src/runtime/mcache.go:142 +0xa5 fp=0xc35abd12f0 sp=0xc35abd12d0 pc=0x41b6e5

runtime.(*mcache).nextFree(0x7ff26091a9b8, 0xc53b7fd911, 0x12, 0x4785a5, 0x6)

/usr/lib/go/src/runtime/malloc.go:880 +0x8d fp=0xc35abd1328 sp=0xc35abd12f0 pc=0x41080d

runtime.mallocgc(0x70, 0x7f42a0, 0x881301, 0xc43a2647e0)

/usr/lib/go/src/runtime/malloc.go:1061 +0x834 fp=0xc35abd13c8 sp=0xc35abd1328 pc=0x4111f4

runtime.makeslice(0x7f42a0, 0x9, 0xd, 0xc53b7fda20)

/usr/lib/go/src/runtime/slice.go:98 +0x6c fp=0xc35abd13f8 sp=0xc35abd13c8 pc=0x45000c

math/big.nat.make(...)

/usr/lib/go/src/math/big/nat.go:69

math/big.nat.divLarge(0x0, 0x0, 0x0, 0xc53b7fda20, 0x11, 0x16, 0xc53b7fda20, 0x11, 0x16, 0xc00f869680, ...)

/usr/lib/go/src/math/big/nat.go:722 +0x48d fp=0xc35abd14e0 sp=0xc35abd13f8 pc=0x554f4d

math/big.nat.div(0x0, 0x0, 0x0, 0xc53b7fda20, 0x11, 0x16, 0xc53b7fda20, 0x11, 0x16, 0xc00f869680, ...)

/usr/lib/go/src/math/big/nat.go:672 +0x410 fp=0xc35abd15b0 sp=0xc35abd14e0 pc=0x554890

math/big.(*Int).QuoRem(0xc35abd16b0, 0xc35abd17f8, 0xc00f829d40, 0xc35abd17f8, 0xc35abd16b0, 0xc35abd1818)

/usr/lib/go/src/math/big/int.go:239 +0xbf fp=0xc35abd1650 sp=0xc35abd15b0 pc=0x54bdff

math/big.(*Int).Mod(0xc35abd17f8, 0xc35abd17f8, 0xc00f829d40, 0xc35abd17f8)

/usr/lib/go/src/math/big/int.go:270 +0x113 fp=0xc35abd1700 sp=0xc35abd1650 pc=0x54c0f3

crypto/elliptic.(*CurveParams).doubleJacobian(0xc00f8763c0, 0xc53b7b5e00, 0xc53b7b5de0, 0xc53b7b5e20, 0xc53b7b5e00, 0xc53b7b5de0, 0xc53b7b5e20)

/usr/lib/go/src/crypto/elliptic/elliptic.go:206 +0x110 fp=0xc35abd1848 sp=0xc35abd1700 pc=0x584150

crypto/elliptic.(*CurveParams).ScalarMult(0xc00f8763c0, 0xc00f829e00, 0xc00f829e40, 0xc2d540bcc0, 0x42, 0x42, 0xb, 0xffffffffffffffff)

/usr/lib/go/src/crypto/elliptic/elliptic.go:266 +0x15d fp=0xc35abd1948 sp=0xc35abd1848 pc=0x584bfd

crypto/elliptic.(*CurveParams).ScalarBaseMult(0xc00f8763c0, 0xc2d540bcc0, 0x42, 0x42, 0x42, 0x42)

/usr/lib/go/src/crypto/elliptic/elliptic.go:278 +0x5b fp=0xc35abd1998 sp=0xc35abd1948 pc=0x584d3b

crypto/elliptic.GenerateKey(0x925dc0, 0xc00f8763c0, 0x91a580, 0xc00007e570, 0x0, 0x709897009fe066ce, 0x4, 0x7ff26091a9b8, 0x9b90c3e467e2ca96, 0x979870ce66e09f, ...)

/usr/lib/go/src/crypto/elliptic/elliptic.go:308 +0x2a8 fp=0xc35abd1a50 sp=0xc35abd1998 pc=0x585028

crypto/tls.generateECDHEParameters(0x91a580, 0xc00007e570, 0xc00f870019, 0x1, 0x58, 0x0, 0x0)

/usr/lib/go/src/crypto/tls/key_schedule.go:132 +0x330 fp=0xc35abd1b10 sp=0xc35abd1a50 pc=0x5f9210

crypto/tls.(*clientHandshakeStateTLS13).processHelloRetryRequest(0xc35abd1df0, 0x0, 0x0)

/usr/lib/go/src/crypto/tls/handshake_client_tls13.go:227 +0x29d fp=0xc35abd1c00 sp=0xc35abd1b10 pc=0x5e8cdd

crypto/tls.(*clientHandshakeStateTLS13).handshake(0xc35abd1df0, 0xc2d94eed50, 0x4)

/usr/lib/go/src/crypto/tls/handshake_client_tls13.go:65 +0x38d fp=0xc35abd1c50 sp=0xc35abd1c00 pc=0x5e848d

crypto/tls.(*Conn).clientHandshake(0xc52563aa80, 0x0, 0x0)

/usr/lib/go/src/crypto/tls/handshake_client.go:209 +0x66b fp=0xc35abd1ee0 sp=0xc35abd1c50 pc=0x5e202b

crypto/tls.(*Conn).clientHandshake-fm(0xc373492f00, 0x912ff0)

/usr/lib/go/src/crypto/tls/handshake_client.go:136 +0x2a fp=0xc35abd1f08 sp=0xc35abd1ee0 pc=0x60d9aa

crypto/tls.(*Conn).Handshake(0xc52563aa80, 0x0, 0x0)

/usr/lib/go/src/crypto/tls/conn.go:1362 +0xc9 fp=0xc35abd1f78 sp=0xc35abd1f08 pc=0x5e0229

net/http.(*persistConn).addTLS.func2(0x0, 0xc52563aa80, 0xc525a8b310, 0xc525a926c0)

/usr/lib/go/src/net/http/transport.go:1509 +0x45 fp=0xc35abd1fc0 sp=0xc35abd1f78 pc=0x6dd745

runtime.goexit()

/usr/lib/go/src/runtime/asm_amd64.s:1374 +0x1 fp=0xc35abd1fc8 sp=0xc35abd1fc0 pc=0x46e3e1

created by net/http.(*persistConn).addTLS

/usr/lib/go/src/net/http/transport.go:1505 +0x177

liuhao2050 and 7abhishek

Metadata

Metadata

Assignees

Labels

FrozenDueToAgeNeedsInvestigationSomeone must examine and confirm this is a valid issue and not a duplicate of an existing one.Someone must examine and confirm this is a valid issue and not a duplicate of an existing one.