forked from FIWARE/tutorials.Big-Data-Spark

-

Notifications

You must be signed in to change notification settings - Fork 0

/

Copy pathFIWARE Real-time Processing (Spark).postman_collection.json

307 lines (307 loc) · 31.9 KB

/

FIWARE Real-time Processing (Spark).postman_collection.json

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

{

"info": {

"_postman_id": "c5e9ec44-1e49-4561-8b40-9c8636838f08",

"name": "FIWARE Real-time Processing (Spark)",

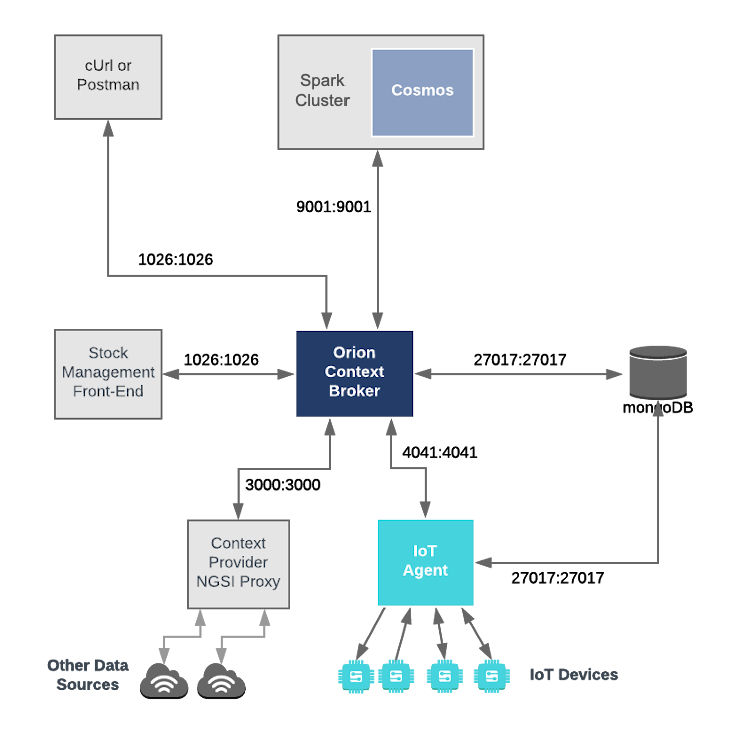

"description": "[](https://github.com/FIWARE/catalogue/blob/master/processing/README.md)\n[](https://fiware-ges.github.io/orion/api/v2/stable/)\n\nThis tutorial is an introduction to the [FIWARE Cosmos Orion Spark Connector](http://fiware-cosmos-spark.rtfd.io), which\nenables easier Big Data analysis over context, integrated with one of the most popular BigData platforms:\n[Apache Spark](https://spark.apache.org/). Apache Spark is a framework and distributed processing engine for stateful\ncomputations over unbounded and bounded data streams. Spark has been designed to run in all common cluster environments,\nperform computations at in-memory speed and at any scale.\n\nThe `docker-compose` files for this tutorial can be found on GitHub: \n\n [https://github.com/FIWARE/tutorials.Big-Data-Spark](https://github.com/FIWARE/tutorials.Big-Data-Spark)\n\n# Real-time Processing and Big Data Analysis\n\n> \"You have to find what sparks a light in you so that you in your own way can illuminate the world.\"\n>\n> — Oprah Winfrey\n\nSmart solutions based on FIWARE are architecturally designed around microservices. They are therefore are designed to\nscale-up from simple applications (such as the Supermarket tutorial) through to city-wide installations base on a large\narray of IoT sensors and other context data providers.\n\nThe massive amount of data involved eventually becomes too much for a single machine to analyse, process and store, and\ntherefore the work must be delegated to additional distributed services. These distributed systems form the basis of\nso-called **Big Data Analysis**. The distribution of tasks allows developers to be able to extract insights from huge\ndata sets which would be too complex to be dealt with using traditional methods. and uncover hidden patterns and\ncorrelations.\n\nAs we have seen, context data is core to any Smart Solution, and the Context Broker is able to monitor changes of state\nand raise [subscription events](https://github.com/Fiware/tutorials.Subscriptions) as the context changes. For smaller\ninstallations, each subscription event can be processed one-by-one by a single receiving endpoint, however as the system\ngrows, another technique will be required to avoid overwhelming the listener, potentially blocking resources and missing\nupdates.\n\n**Apache Spark** is an open-source distributed general-purpose cluster-computing framework. It provides an interface for\nprogramming entire clusters with implicit data parallelism and fault tolerance. The **Cosmos Spark** connector allows\ndevelopers write custom business logic to listen for context data subscription events and then process the flow of the\ncontext data. Spark is able to delegate these actions to other workers where they will be acted upon either in\nsequentially or in parallel as required. The data flow processing itself can be arbitrarily complex.\n\nObviously, in reality, our existing Supermarket scenario is far too small to require the use of a Big Data solution, but\nwill serve as a basis for demonstrating the type of real-time processing which may be required in a larger solution\nwhich is processing a continuous stream of context-data events.\n\n# Architecture\n\nThis application builds on the components and dummy IoT devices created in\n[previous tutorials](https://github.com/FIWARE/tutorials.IoT-Agent/). It will make use of three FIWARE components - the\n[Orion Context Broker](https://fiware-orion.readthedocs.io/en/latest/), the\n[IoT Agent for Ultralight 2.0](https://fiware-iotagent-ul.readthedocs.io/en/latest/), and the\n[Cosmos Orion Spark Connector](https://fiware-cosmos-spark.readthedocs.io/en/latest/) for connecting Orion to an\n[Apache Spark cluster](https://spark.apache.org/docs/latest/cluster-overview.html). The Spark cluster itself will\nconsist of a single **Cluster Manager** _master_ to coordinate execution and some **Worker Nodes** _worker_ to execute\nthe tasks.\n\nBoth the Orion Context Broker and the IoT Agent rely on open source [MongoDB](https://www.mongodb.com/) technology to\nkeep persistence of the information they hold. We will also be using the dummy IoT devices created in the\n[previous tutorial](https://github.com/FIWARE/tutorials.IoT-Agent/).\n\nTherefore the overall architecture will consist of the following elements:\n\n- Two **FIWARE Generic Enablers** as independent microservices:\n - The FIWARE [Orion Context Broker](https://fiware-orion.readthedocs.io/en/latest/) which will receive requests\n using [NGSI](https://fiware.github.io/specifications/OpenAPI/ngsiv2)\n - The FIWARE [IoT Agent for Ultralight 2.0](https://fiware-iotagent-ul.readthedocs.io/en/latest/) which will\n receive northbound measurements from the dummy IoT devices in\n [Ultralight 2.0](https://fiware-iotagent-ul.readthedocs.io/en/latest/usermanual/index.html#user-programmers-manual)\n format and convert them to [NGSI](https://fiware.github.io/specifications/OpenAPI/ngsiv2) requests for the\n context broker to alter the state of the context entities\n- An [Apache Spark cluster](https://spark.apache.org/docs/latest/cluster-overview.html) consisting of a single\n **ClusterManager** and **Worker Nodes**\n - The FIWARE [Cosmos Orion Spark Connector](https://fiware-cosmos-spark.readthedocs.io/en/latest/) will be\n deployed as part of the dataflow which will subscribe to context changes and make operations on them in\n real-time\n- One [MongoDB](https://www.mongodb.com/) **database** :\n - Used by the **Orion Context Broker** to hold context data information such as data entities, subscriptions and\n registrations\n - Used by the **IoT Agent** to hold device information such as device URLs and Keys\n- Three **Context Providers**:\n - A webserver acting as set of [dummy IoT devices](https://github.com/FIWARE/tutorials.IoT-Sensors) using the\n [Ultralight 2.0](https://fiware-iotagent-ul.readthedocs.io/en/latest/usermanual/index.html#user-programmers-manual)\n protocol running over HTTP.\n - The **Stock Management Frontend** is not used in this tutorial. It does the following:\n - Display store information and allow users to interact with the dummy IoT devices\n - Show which products can be bought at each store\n - Allow users to \"buy\" products and reduce the stock count.\n - The **Context Provider NGSI** proxy is not used in this tutorial. It does the following:\n - receive requests using [NGSI](https://fiware.github.io/specifications/OpenAPI/ngsiv2)\n - makes requests to publicly available data sources using their own APIs in a proprietary format\n - returns context data back to the Orion Context Broker in\n [NGSI](https://fiware.github.io/specifications/OpenAPI/ngsiv2) format.\n\nThe overall architecture can be seen below:\n\n\n\n## Spark Cluster Configuration\n\n```yaml\nspark-master:\n image: bde2020/spark-master:2.4.5-hadoop2.7\n container_name: spark-master\n expose:\n - \"8080\"\n - \"9001\"\n ports:\n - \"8080:8080\"\n - \"7077:7077\"\n - \"9001:9001\"\n environment:\n - INIT_DAEMON_STEP=setup_spark\n - \"constraint:node==spark-master\"\n```\n\n```yaml\nspark-worker-1:\n image: bde2020/spark-worker:2.4.5-hadoop2.7\n container_name: spark-worker-1\n depends_on:\n - spark-master\n ports:\n - \"8081:8081\"\n environment:\n - \"SPARK_MASTER=spark://spark-master:7077\"\n - \"constraint:node==spark-master\"\n```\n\nThe `spark-master` container is listening on three ports:\n\n- Port `8080` is exposed so we can see the web frontend of the Apache Spark-Master Dashboard.\n- Port `7070` is used for internal communications.\n\nThe `spark-worker-1` container is listening on one port:\n\n- Port `9001` is exposed so that the installation can receive context data subscriptions.\n- Ports `8081` is exposed so we can see the web frontend of the Apache Spark-Worker-1 Dashboard.\n\n# Prerequisites\n\n## Docker and Docker Compose\n\nTo keep things simple, all components will be run using [Docker](https://www.docker.com). **Docker** is a container\ntechnology which allows to different components isolated into their respective environments.\n\n- To install Docker on Windows follow the instructions [here](https://docs.docker.com/docker-for-windows/)\n- To install Docker on Mac follow the instructions [here](https://docs.docker.com/docker-for-mac/)\n- To install Docker on Linux follow the instructions [here](https://docs.docker.com/install/)\n\n**Docker Compose** is a tool for defining and running multi-container Docker applications. A series of\n[YAML files](https://github.com/FIWARE/tutorials.Big-Data-Spark/tree/master/docker-compose) are used to configure the\nrequired services for the application. This means all container services can be brought up in a single command. Docker\nCompose is installed by default as part of Docker for Windows and Docker for Mac, however Linux users will need to\nfollow the instructions found [here](https://docs.docker.com/compose/install/)\n\nYou can check your current **Docker** and **Docker Compose** versions using the following commands:\n\n```console\ndocker-compose -v\ndocker version\n```\n\nPlease ensure that you are using Docker version 18.03 or higher and Docker Compose 1.21 or higher and upgrade if\nnecessary.\n\n## Maven\n\n[Apache Maven](https://maven.apache.org/download.cgi) is a software project management and comprehension tool. Based on\nthe concept of a project object model (POM), Maven can manage a project's build, reporting and documentation from a\ncentral piece of information. We will use Maven to define and download our dependencies and to build and package our\ncode into a JAR file.\n\n## Cygwin for Windows\n\nWe will start up our services using a simple Bash script. Windows users should download [cygwin](http://www.cygwin.com/)\nto provide a command-line functionality similar to a Linux distribution on Windows.\n\n# Start Up\n\nBefore you start, you should ensure that you have obtained or built the necessary Docker images locally. Please clone\nthe repository and create the necessary images by running the commands shown below. Note that you might need to run some\nof the commands as a privileged user:\n\n```console\ngit clone https://github.com/ging/fiware-cosmos-orion-spark-connector-tutorial.git\ncd fiware-cosmos-orion-spark-connector-tutorial\n./services create\n```\n\nThis command will also import seed data from the previous tutorials and provision the dummy IoT sensors on startup.\n\nTo start the system, run the following command:\n\n```console\n./services start\n```\n\n> :information_source: **Note:** If you want to clean up and start over again you can do so with the following command:\n>\n> ```console\n> ./services stop\n> ```",

"schema": "https://schema.getpostman.com/json/collection/v2.1.0/collection.json"

},

"item": [

{

"name": "Receiving context data and performing operations",

"item": [

{

"name": "Orion - Subscribe to Context Changes",

"request": {

"method": "POST",

"header": [

{

"key": "Content-Type",

"value": "application/json"

},

{

"key": "fiware-service",

"value": "openiot"

},

{

"key": "fiware-servicepath",

"value": "/"

}

],

"body": {

"mode": "raw",

"raw": "{\n \"description\": \"Notify Spark of all context changes\",\n \"subject\": {\n \"entities\": [\n {\n \"idPattern\": \".*\"\n }\n ]\n },\n \"notification\": {\n \"http\": {\n \"url\": \"http://spark-worker-1:9001\"\n }\n }\n}"

},

"url": {

"raw": "http://{{orion}}/v2/subscriptions/",

"protocol": "http",

"host": [

"{{orion}}"

],

"path": [

"v2",

"subscriptions",

""

]

},

"description": "Once a dynamic context system is up and running (we have deployed the `Logger` job in the Spark cluster), we need to\ninform **Spark** of changes in context.\n\nThis is done by making a POST request to the `/v2/subscription` endpoint of the Orion Context Broker.\n\n- The `fiware-service` and `fiware-servicepath` headers are used to filter the subscription to only listen to\n measurements from the attached IoT Sensors, since they had been provisioned using these settings\n\n- The notification `url` must match the one our Spark program is listening to.\n\n- The `throttling` value defines the rate that changes are sampled.\n"

},

"response": []

},

{

"name": "Orion - Check Subscription is working",

"request": {

"method": "GET",

"header": [

{

"key": "fiware-service",

"value": "openiot"

},

{

"key": "fiware-servicepath",

"value": "/"

}

],

"url": {

"raw": "http://{{orion}}/v2/subscriptions/",

"protocol": "http",

"host": [

"{{orion}}"

],

"path": [

"v2",

"subscriptions",

""

]

},

"description": "If a subscription has been created, we can check to see if it is firing by making a GET request to the\n`/v2/subscriptions` endpoint.\n\nWithin the `notification` section of the response, you can see several additional `attributes` which describe the health\nof the subscription\n\nIf the criteria of the subscription have been met, `timesSent` should be greater than `0`. A zero value would indicate\nthat the `subject` of the subscription is incorrect or the subscription has created with the wrong `fiware-service-path`\nor `fiware-service` header\n\nThe `lastNotification` should be a recent timestamp - if this is not the case, then the devices are not regularly\nsending data. Remember to unlock the **Smart Door** and switch on the **Smart Lamp**\n\nThe `lastSuccess` should match the `lastNotification` date - if this is not the case then **Cosmos** is not receiving\nthe subscription properly. Check that the hostname and port are correct.\n\nFinally, check that the `status` of the subscription is `active` - an expired subscription will not fire."

},

"response": []

}

],

"description": "According to the [Apache Spark documentation](https://spark.apache.org/documentation.html), Spark Streaming is an\nextension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data\nstreams. Data can be ingested from many sources like Kafka, Flume, Kinesis, or TCP sockets, and can be processed using\ncomplex algorithms expressed with high-level functions like map, reduce, join and window. Finally, processed data can be\npushed out to filesystems, databases, and live dashboards. In fact, you can apply Spark’s machine learning and graph\nprocessing algorithms on data streams.\n\n\n\nInternally, it works as follows. Spark Streaming receives live input data streams and divides the data into batches,\nwhich are then processed by the Spark engine to generate the final stream of results in batches.\n\n\n\nThis means that to create a streaming data flow we must supply the following:\n\n- A mechanism for reading Context data as a **Source Operator**\n- Business logic to define the transform operations\n- A mechanism for pushing Context data back to the context broker as a **Sink Operator**\n\nThe **Cosmos Spark** connector - `orion.spark.connector-1.2.1.jar` offers both **Source** and **Sink** operators. It\ntherefore only remains to write the necessary Scala code to connect the streaming dataflow pipeline operations together.\nThe processing code can be complied into a JAR file which can be uploaded to the spark cluster. Two examples will be\ndetailed below, all the source code for this tutorial can be found within the\n[cosmos-examples](https://github.com/ging/fiware-cosmos-orion-spark-connector-tutorial/tree/master/cosmos-examples)\ndirectory.\n\nFurther Spark processing examples can be found on\n[Spark Connector Examples](https://fiware-cosmos-spark-examples.readthedocs.io/).\n\n### Compiling a JAR file for Spark\n\nAn existing `pom.xml` file has been created which holds the necessary prerequisites to build the examples JAR file\n\nIn order to use the Orion Spark Connector we first need to manually install the connector JAR as an artifact using\nMaven:\n\n```console\ncd cosmos-examples\ncurl -LO https://github.com/ging/fiware-cosmos-orion-spark-connector/releases/download/FIWARE_7.9/orion.spark.connector-1.2.1.jar\nmvn install:install-file \\\n -Dfile=./orion.spark.connector-1.2.1.jar \\\n -DgroupId=org.fiware.cosmos \\\n -DartifactId=orion.spark.connector \\\n -Dversion=1.2.1 \\\n -Dpackaging=jar\n```\n\nThereafter the source code can be compiled by running the `mvn package` command within the same directory\n(`cosmos-examples`):\n\n```console\nmvn package\n```\n\nA new JAR file called `cosmos-examples-1.2.1.jar` will be created within the `cosmos-examples/target` directory.\n\n### Generating a stream of Context Data\n\nFor the purpose of this tutorial, we must be monitoring a system in which the context is periodically being updated. The\ndummy IoT Sensors can be used to do this. Open the device monitor page at `http://localhost:3000/device/monitor` and\nunlock a **Smart Door** and switch on a **Smart Lamp**. This can be done by selecting an appropriate the command from\nthe drop down list and pressing the `send` button. The stream of measurements coming from the devices can then be seen\non the same page:\n\n\n\n## Logger - Reading Context Data Streams\n\nThe first example makes use of the `OrionReceiver` operator in order to receive notifications from the Orion Context\nBroker. Specifically, the example counts the number notifications that each type of device sends in one minute. You can\nfind the source code of the example in\n[org/fiware/cosmos/tutorial/Logger.scala](https://github.com/ging/fiware-cosmos-orion-spark-connector-tutorial/blob/master/cosmos-examples/src/main/scala/org/fiware/cosmos/tutorial/Logger.scala)\n\n### Logger - Installing the JAR\n\nRestart the containers if necessary, then access the worker container:\n\n```console\ndocker exec -it spark-worker-1 bin/bash\n```\n\nAnd run the following command to run the generated JAR package in the Spark cluster:\n\n```console\n/spark/bin/spark-submit \\\n--class org.fiware.cosmos.tutorial.Logger \\\n--master spark://spark-master:7077 \\\n--deploy-mode client /home/cosmos-examples/target/cosmos-examples-1.2.1.jar \\\n--conf \"spark.driver.extraJavaOptions=-Dlog4jspark.root.logger=WARN,console\"\n```\n",

"event": [

{

"listen": "prerequest",

"script": {

"id": "0477a1da-a1f5-42f3-a91e-fc90c4ed139a",

"type": "text/javascript",

"exec": [

""

]

}

},

{

"listen": "test",

"script": {

"id": "121ba677-9693-4482-89b3-2b30dc08fbf8",

"type": "text/javascript",

"exec": [

""

]

}

}

],

"protocolProfileBehavior": {}

},

{

"name": "Logger - Checking the Output",

"item": [],

"description": "Leave the subscription running for **one minute**. Then, the output on the console on which you ran the Spark job will\nbe like the following:\n\n```text\nSensor(Bell,3)\nSensor(Door,4)\nSensor(Lamp,7)\nSensor(Motion,6)\n```\n\n### Logger - Analyzing the Code\n\n```scala\npackage org.fiware.cosmos.tutorial\n\nimport org.apache.spark.SparkConf\nimport org.apache.spark.streaming.{Seconds, StreamingContext}\nimport org.fiware.cosmos.orion.spark.connector.OrionReceiver\n\nobject Logger{\n\n def main(args: Array[String]): Unit = {\n\n val conf = new SparkConf().setAppName(\"Logger\")\n val ssc = new StreamingContext(conf, Seconds(60))\n // Create Orion Receiver. Receive notifications on port 9001\n val eventStream = ssc.receiverStream(new OrionReceiver(9001))\n\n // Process event stream\n val processedDataStream= eventStream\n .flatMap(event => event.entities)\n .map(ent => {\n new Sensor(ent.`type`)\n })\n .countByValue()\n .window(Seconds(60))\n\n processedDataStream.print()\n\n ssc.start()\n ssc.awaitTermination()\n }\n case class Sensor(device: String)\n}\n```\n\nThe first lines of the program are aimed at importing the necessary dependencies, including the connector. The next step\nis to create an instance of the `OrionReceiver` using the class provided by the connector and to add it to the\nenvironment provided by Spark.\n\nThe `OrionReceiver` constructor accepts a port number (`9001`) as a parameter. This port is used to listen to the\nsubscription notifications coming from Orion and converted to a `DataStream` of `NgsiEvent` objects. The definition of\nthese objects can be found within the\n[Orion-Spark Connector documentation](https://github.com/ging/fiware-cosmos-orion-spark-connector/blob/master/README.md#orionreceiver).\n\nThe stream processing consists of five separate steps. The first step (`flatMap()`) is performed in order to put\ntogether the entity objects of all the NGSI Events received in a period of time. Thereafter the code iterates over them\n(with the `map()` operation) and extracts the desired attributes. In this case, we are interested in the sensor `type`\n(`Door`, `Motion`, `Bell` or `Lamp`).\n\nWithin each iteration, we create a custom object with the property we need: the sensor `type`. For this purpose, we can\ndefine a case class as shown:\n\n```scala\ncase class Sensor(device: String)\n```\n\nThereafter can count the created objects by the type of device (`countByValue()`) and perform operations such as\n`window()` on them.\n\nAfter the processing, the results are output to the console:\n\n```scala\nprocessedDataStream.print()\n```\n",

"protocolProfileBehavior": {}

},

{

"name": "Receiving context data, performing operations and persisting context data",

"item": [

{

"name": "Orion - Subscribe to Context Changes",

"request": {

"method": "POST",

"header": [

{

"key": "Content-Type",

"value": "application/json"

},

{

"key": "fiware-service",

"value": "openiot"

},

{

"key": "fiware-servicepath",

"value": "/"

}

],

"body": {

"mode": "raw",

"raw": "{\n \"description\": \"Notify Spark of all motion sensor\",\n \"subject\": {\n \"entities\": [\n {\n \"idPattern\": \"Motion.*\"\n }\n ]\n },\n \"notification\": {\n \"http\": {\n \"url\": \"http://spark-worker-1:9001\"\n }\n }\n}"

},

"url": {

"raw": "http://{{orion}}/v2/subscriptions/",

"protocol": "http",

"host": [

"{{orion}}"

],

"path": [

"v2",

"subscriptions",

""

]

},

"description": "If the previous example has not been run, a new subscription will need to be set up. A narrower subscription can be set\nup to only trigger a notification when a motion sensor detects movement.\n\n> **Note:** If the previous subscription already exists, this step creating a second narrower Motion-only subscription\n> is unnecessary. There is a filter within the business logic of the scala task itself."

},

"response": []

},

{

"name": "Orion - Check Subscription is working",

"request": {

"method": "GET",

"header": [

{

"key": "fiware-service",

"value": "openiot"

},

{

"key": "fiware-servicepath",

"value": "/"

}

],

"url": {

"raw": "http://{{orion}}/v2/subscriptions/",

"protocol": "http",

"host": [

"{{orion}}"

],

"path": [

"v2",

"subscriptions",

""

]

},

"description": "If a subscription has been created, you can check to see if it is firing by making a GET \nrequest to the `/v2/subscriptions` endpoint.\n\nWithin the `notification` section of the response, you can see several additional `attributes` which describe the health of the subscription\n\nIf the criteria of the subscription have been met, `timesSent` should be greater than `0`.\nA zero value would indicate that the `subject` of the subscription is incorrect or the subscription \nhas created with the wrong `fiware-service-path` or `fiware-service` header\n\nThe `lastNotification` should be a recent timestamp - if this is not the case, then the devices\nare not regularly sending data. Remember to unlock the **Smart Door** and switch on the **Smart Lamp**\n\nThe `lastSuccess` should match the `lastNotification` date - if this is not the case \nthen **Draco** is not receiving the subscription properly. Check that the host name\nand port are correct. \n\nFinally, check that the `status` of the subscription is `active` - an expired subscription\nwill not fire."

},

"response": []

},

{

"name": "Orion - Delete Subscription",

"request": {

"method": "DELETE",

"header": [

{

"key": "fiware-service",

"value": "openiot"

},

{

"key": "fiware-servicepath",

"value": "/"

}

],

"body": {

"mode": "raw",

"raw": ""

},

"url": {

"raw": "http://{{orion}}/v2/subscriptions/{{subscriptionId}}",

"protocol": "http",

"host": [

"{{orion}}"

],

"path": [

"v2",

"subscriptions",

"{{subscriptionId}}"

]

},

"description": "If a subscription has been created, you can check to see if it is firing by making a GET \nrequest to the `/v2/subscriptions` endpoint.\n\nWithin the `notification` section of the response, you can see several additional `attributes` which describe the health of the subscription\n\nIf the criteria of the subscription have been met, `timesSent` should be greater than `0`.\nA zero value would indicate that the `subject` of the subscription is incorrect or the subscription \nhas created with the wrong `fiware-service-path` or `fiware-service` header\n\nThe `lastNotification` should be a recent timestamp - if this is not the case, then the devices\nare not regularly sending data. Remember to unlock the **Smart Door** and switch on the **Smart Lamp**\n\nThe `lastSuccess` should match the `lastNotification` date - if this is not the case \nthen **Draco** is not receiving the subscription properly. Check that the host name\nand port are correct. \n\nFinally, check that the `status` of the subscription is `active` - an expired subscription\nwill not fire."

},

"response": []

}

],

"description": "The second example switches on a lamp when its motion sensor detects movement.\n\nThe dataflow stream uses the `OrionReceiver` operator in order to receive notifications and filters the input to only\nrespond to motion senseors and then uses the `OrionSink` to push processed context back to the Context Broker. You can\nfind the source code of the example in\n[org/fiware/cosmos/tutorial/Feedback.scala](https://github.com/ging/fiware-cosmos-orion-spark-connector-tutorial/blob/master/cosmos-examples/src/main/scala/org/fiware/cosmos/tutorial/Feedback.scala)\n\n### Feedback Loop - Installing the JAR\n\n```console\n/spark/bin/spark-submit \\\n--class org.fiware.cosmos.tutorial.Feedback \\\n--master spark://spark-master:7077 \\\n--deploy-mode client /home/cosmos-examples/target/cosmos-examples-1.2.1.jar \\\n--conf \"spark.driver.extraJavaOptions=-Dlog4jspark.root.logger=WARN,console\"\n```",

"event": [

{

"listen": "prerequest",

"script": {

"id": "304ca99a-e4d0-42b1-bb12-c622a653d9af",

"type": "text/javascript",

"exec": [

""

]

}

},

{

"listen": "test",

"script": {

"id": "cb7aca3f-0b0a-4afa-850b-af050c4240f7",

"type": "text/javascript",

"exec": [

""

]

}

}

],

"protocolProfileBehavior": {}

},

{

"name": "Feedback Loop - Checking the Output",

"item": [],

"description": "Go to `http://localhost:3000/device/monitor`\n\nWithin any Store, unlock the door and wait. Once the door opens and the Motion sensor is triggered, the lamp will switch\non directly\n\n### Feedback Loop - Analyzing the Code\n\n```scala\npackage org.fiware.cosmos.tutorial\n\nimport org.apache.spark.SparkConf\nimport org.apache.spark.streaming.{Seconds, StreamingContext}\nimport org.fiware.cosmos.orion.spark.connector._\n\nobject Feedback {\n final val CONTENT_TYPE = ContentType.JSON\n final val METHOD = HTTPMethod.PATCH\n final val CONTENT = \"{\\n \\\"on\\\": {\\n \\\"type\\\" : \\\"command\\\",\\n \\\"value\\\" : \\\"\\\"\\n }\\n}\"\n final val HEADERS = Map(\"fiware-service\" -> \"openiot\",\"fiware-servicepath\" -> \"/\",\"Accept\" -> \"*/*\")\n\n def main(args: Array[String]): Unit = {\n\n val conf = new SparkConf().setAppName(\"Feedback\")\n val ssc = new StreamingContext(conf, Seconds(10))\n // Create Orion Receiver. Receive notifications on port 9001\n val eventStream = ssc.receiverStream(new OrionReceiver(9001))\n\n // Process event stream\n val processedDataStream = eventStream\n .flatMap(event => event.entities)\n .filter(entity=>(entity.attrs(\"count\").value == \"1\"))\n .map(entity=> new Sensor(entity.id))\n .window(Seconds(10))\n\n val sinkStream= processedDataStream.map(sensor => {\n val url=\"http://localhost:1026/v2/entities/Lamp:\"+sensor.id.takeRight(3)+\"/attrs\"\n OrionSinkObject(CONTENT,url,CONTENT_TYPE,METHOD,HEADERS)\n })\n // Add Orion Sink\n OrionSink.addSink( sinkStream )\n\n // print the results with a single thread, rather than in parallel\n processedDataStream.print()\n ssc.start()\n\n ssc.awaitTermination()\n }\n\n case class Sensor(id: String)\n}\n```\n\nAs you can see, it is similar to the previous example. The main difference is that it writes the processed data back in\nthe Context Broker through the **`OrionSink`**.\n\nThe arguments of the **`OrionSinkObject`** are:\n\n- **Message**: `\"{\\n \\\"on\\\": {\\n \\\"type\\\" : \\\"command\\\",\\n \\\"value\\\" : \\\"\\\"\\n }\\n}\"`. We send 'on' command\n- **URL**: `\"http://localhost:1026/v2/entities/Lamp:\"+node.id.takeRight(3)+\"/attrs\"`. TakeRight(3) gets the number of\n the room, for example '001')\n- **Content Type**: `ContentType.Plain`.\n- **HTTP Method**: `HTTPMethod.POST`.\n- **Headers**: `Map(\"fiware-service\" -> \"openiot\",\"fiware-servicepath\" -> \"/\",\"Accept\" -> \"*/*\")`. Optional parameter.\n We add the headers we need in the HTTP Request.",

"event": [

{

"listen": "prerequest",

"script": {

"id": "c38a5871-639b-4f83-8326-2d0c6e247a87",

"type": "text/javascript",

"exec": [

""

]

}

},

{

"listen": "test",

"script": {

"id": "7c1b3690-4a40-419d-9e4f-dd8f3acde622",

"type": "text/javascript",

"exec": [

""

]

}

}

],

"protocolProfileBehavior": {}

}

],

"event": [

{

"listen": "prerequest",

"script": {

"id": "e3ee3d45-9b02-4548-b269-b1d13f810c9f",

"type": "text/javascript",

"exec": [

""

]

}

},

{

"listen": "test",

"script": {

"id": "b18ca78d-898a-4efc-97cd-95e8072bee01",

"type": "text/javascript",

"exec": [

""

]

}

}

],

"variable": [

{

"id": "016189d7-34ff-4413-953e-15ea05c4842d",

"key": "orion",

"value": "localhost:1026"

},

{

"id": "798ae722-6f27-4ccb-8ce4-eb184528e4ec",

"key": "subscriptionId",

"value": "5e134a0c924f6d7d27b63844"

}

],

"protocolProfileBehavior": {}

}