-

Notifications

You must be signed in to change notification settings - Fork 48

Add SimpleAuth implementation #106

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add SimpleAuth implementation #106

Conversation

|

Yes, I'll work with the person who raised the issue in EG. Currently, my Hadoop cluster is unavailable (not sure why) so I can't do much. I'll post back here. |

|

hi dmitry, this change doesn't seem to work. It only request with user.name param when preparing request, while there's no change when i call such method as ResourceManager.cluster_information. btw, I make a test with the change below and it works, but don't know whether there're side effects. |

|

Hm, I wonder why does it say in first browser interaction then. Based on suggested change it would send it all the time. Either way, can you confirm if this works for some other endpoints too? UPD: perhaps because we don't save some kind of session header. I wonder what kind of header it is. Could you also print keys in response? |

|

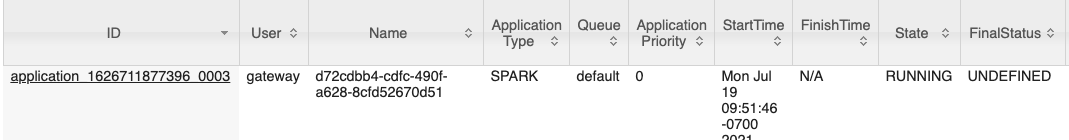

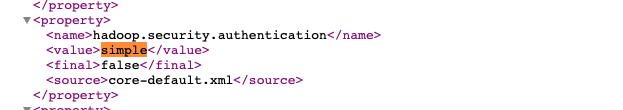

I deployed both this package and a version of EnterpriseGateway (EG) that creates a SimpleAuth for its access to the YARN RM. The code attempts to honor the KERNEL_USERNAME conveyed to the EG kernel start request. If no value is provided, the current username is used (which will be the user in which the EG server process is running). In my case, EG is running as user If I convey a KERNEL_USERNAME of and the launch succeeds. However, when looking at the status of the job in YARN, I see the job is under user There are a couple of mentions of impersonation on jupyter-server/enterprise_gateway#979 and that clearly is not happening. Is this approach actually supposed to impersonate the referenced user? In my case, user 'alice' is not an actual user, just a name. If this is working as intended, I really don't see what value it provides over not specifying an auth instance at all. I just realized I need to set |

|

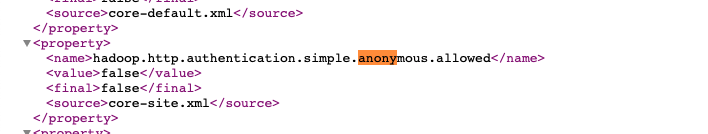

After disabling anonymous access, I get 401's when using either the process user ( |

|

@kevin-bates Indeed, makes sense, session headers/cookies are not shared between requests (since I didn't add logic for that, assuming that hadoop will do my work), and I think thats the root cause for this. I would appreciate if you could collect debugging information I mentioned above. The list of headers/cookie keys that have to be saved in Auth object and passed along. |

|

Here's the updated code in the exception handler in if response.status_code != 200:

log.warning("Failed to access RM '{url}' - HTTP Code '{status}', continuing...".format(url=url, status=response.status_code))

log.warning(response.headers.keys())

cookiejar = response.cookies

for cookie in cookiejar:

log.warning(cookie.name)

return Falseand corresponding output... |

|

@kevin-bates give updated code another try, see my commit |

|

Thanks Dmitry. Looks like we get about the same thing. However, it dawned on my that the "response" you wanted to debug is the response you just updated. Previously, I was logging the response that is returned in I went ahead and moved the debug statements into the Here's how the method looks... def __call__(self, request):

if not self.auth_done:

_session = requests.Session()

r = _session.get(request.url, params={"user.name": self.username})

r.raise_for_status()

self.auth_token = _session.cookies.get_dict()['hadoop.auth']

self.auth_done = True

log.warning(r.headers.keys())

cookiejar = r.cookies

for cookie in cookiejar:

log.warning(cookie.name)

else:

r.cookies.set("hadoop.auth", self.auth_token)

return requestFYI, I will be unavailable for the rest of Monday (July 19). |

|

Might have figured the problem, another commit... |

|

I removed the other debug logging. Looks like we don't always have a |

... without losing them from session

|

Didn't realize it was preparedrequest, now that use case should also be covered. |

|

i tested the change on EG 2.5.0 with Aliyun EMR Cluster and it worked both anonymous and kerberos is disabled |

|

I have added default user |

|

The latest set of changes appear to work - thank you! I'm still not clear on how the user identity is actually used as it all seems to work even when providing a non-existent user name. Is it more about just including a value, primarily for auditing purposes? Since SimpleAuth appears to work even when anonymous is allowed, I'm inclined to make this the default behavior in EG. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you Dmitry!

|

You're right on this. I think the it was meant to be called SimpleNoAuth :) Either way, merging this in. |

|

Reminder to include example of usage on README before we release new version of this package. |

Fix #105

@kevin-bates I would appreciate some assistance with testing, as I don't have such configuration of cluster available.