This repository contains material from the third Udacity DRL procjet and the coding exercice DDPG-pendulum.

In this project, we train a MADDPG multi-agent to solve two types of environment.

First Tennis :

In this environment, two agents control rackets to bounce a ball over a net. If an agent hits the ball over the net, it receives a reward of

The observation space consists of

Second, Soccer :

In this discrete control environment, four agents compete in a

An environment is considered solved, when an average score of +0.5 over 100 consecutive episodes, and for each agent is obtained.

To set up your python environment to run the code in this repository, follow the instructions below.

-

Create (and activate) a new environment with Python 3.9.

- Linux or Mac:

conda create --name drlnd source activate drlnd- Windows:

conda create --name drlnd activate drlnd

-

Follow the instructions in Pytorch web page to install pytorch and its dependencies (PIL, numpy,...). For Windows and cuda 11.6

conda install pytorch torchvision torchaudio cudatoolkit=11.6 -c pytorch -c conda-forge

-

Follow the instructions in this repository to perform a minimal install of OpenAI gym.

- Install the box2d environment group by following the instructions here.

pip install gym[box2d]

-

Follow the instructions in third Udacity DRL procjet to get the environment.

-

Clone the repository, and navigate to the

python/folder. Then, install several dependencies.

git clone https://github.com/eljandoubi/MADDPG-for-Collaboration-and-Competition.git

cd MADDPG-for-Collaboration-and-Competition/python

pip install .- Create an IPython kernel for the

drlndenvironment.

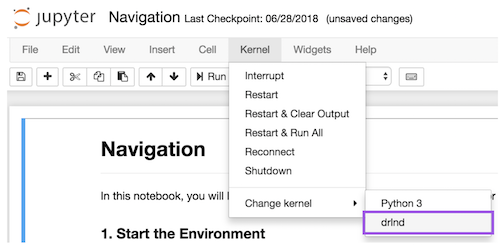

python -m ipykernel install --user --name drlnd --display-name "drlnd"- Before running code in a notebook, change the kernel to match the

drlndenvironment by using the drop-downKernelmenu.

You can train and/or inference Tennis environment:

Run the training and/or inference cell of Tennis.ipynb.

The pre-trained models with the highest score are stored in Tennis_checkpoint.

Same for Soccer but the current checkpoint isn't the best.

The implementation and resultats are discussed in the report.