-

Notifications

You must be signed in to change notification settings - Fork 4.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Enable torch.autocast with ZeRO #6993

Open

tohtana

wants to merge

62

commits into

master

Choose a base branch

from

tohtana/support_autocast

base: master

Could not load branches

Branch not found: {{ refName }}

Loading

Could not load tags

Nothing to show

Loading

Are you sure you want to change the base?

Some commits from the old base branch may be removed from the timeline,

and old review comments may become outdated.

Open

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Fix #6772 --------- Co-authored-by: Logan Adams <114770087+loadams@users.noreply.github.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

…#6967) - Issues with nv-sd updates, will follow up with a subsequent PR Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

NVIDIA Blackwell GPU generation has number 10. The SM code and architecture should be `100`, but the current code generates `1.`, because it expects a 2 characters string. This change modifies the logic to consider it as a string that contains a `.`, hence splits the string and uses the array of strings. Signed-off-by: Fabien Dupont <fdupont@redhat.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Olatunji Ruwase <olruwase@microsoft.com> Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Fabien Dupont <fdupont@redhat.com> Co-authored-by: Fabien Dupont <fabiendupont@fabiendupont.fr> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

1. update intel oneAPI basekit to 2025.0 2. update torch/ipex/oneccl to 2.5 Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Same as [this PR](#6922). [affeb88](affeb88) I noticed the CI updated the DCO check recently. Using the suggested rebase method for sign-off would reintroduce many conflicts, so I opted for a squash merge with sign-off instead. thanks: ) Signed-off-by: inkcherry <mingzhi.liu@intel.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Those files have code that gets run when importing them, so in systems that doesn't support triton but have triton installed this causes issues. In general, I think it is better to import triton when it is installed and supported. Signed-off-by: Omar Elayan <oelayan@habana.ai> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Logan Adams <loadams@microsoft.com> Co-authored-by: Stas Bekman <stas00@users.noreply.github.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Fix #7014 Avoid naming collision on `partition()` --------- Signed-off-by: Olatunji Ruwase <olruwase@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Fix typos Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

BUGFIX for Apple Silicon hostname #6497 --------- Signed-off-by: Fabien Dupont <fdupont@redhat.com> Signed-off-by: Olatunji Ruwase <olruwase@microsoft.com> Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: inkcherry <mingzhi.liu@intel.com> Signed-off-by: Roman Fitzjalen <romaactor@gmail.com> Co-authored-by: Logan Adams <114770087+loadams@users.noreply.github.com> Co-authored-by: Fabien Dupont <fabiendupont@fabiendupont.fr> Co-authored-by: Olatunji Ruwase <olruwase@microsoft.com> Co-authored-by: Liangliang Ma <1906710196@qq.com> Co-authored-by: inkcherry <mingzhi.liu@intel.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

- Update existing workflows that use cu121 to cu124. Note, this means that where we download torch latest, we will now be getting torch 2.6 rather than the torch latest 2.5 provided with cuda 12.1. - Note, nv-nightly is failing in master currently due to unrelated errors, so this could be ignored in this PR (nv-nightly tested locally, where it passes with 12.1 and it also passes with 12.4). --------- Signed-off-by: Fabien Dupont <fdupont@redhat.com> Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Olatunji Ruwase <olruwase@microsoft.com> Signed-off-by: inkcherry <mingzhi.liu@intel.com> Signed-off-by: Omar Elayan <oelayan@habana.ai> Co-authored-by: Fabien Dupont <fabiendupont@fabiendupont.fr> Co-authored-by: Olatunji Ruwase <olruwase@microsoft.com> Co-authored-by: Liangliang Ma <1906710196@qq.com> Co-authored-by: inkcherry <mingzhi.liu@intel.com> Co-authored-by: Omar Elayan <142979319+oelayan7@users.noreply.github.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

This change is required to successfully build fp_quantizer extension on ROCm. --------- Co-authored-by: Logan Adams <114770087+loadams@users.noreply.github.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Fix #7029 - Add Chinese blog for deepspeed windows - Fix format in README.md Co-authored-by: Logan Adams <114770087+loadams@users.noreply.github.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Adding compile support for AIO library on AMD GPUs. --------- Co-authored-by: Olatunji Ruwase <olruwase@microsoft.com> Co-authored-by: Logan Adams <114770087+loadams@users.noreply.github.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Update CUDA compute capability for cross compile according to wiki page. https://en.wikipedia.org/wiki/CUDA#GPUs_supported --------- Signed-off-by: Hongwei <hongweichen@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Propagate API change. Signed-off-by: Olatunji Ruwase <olruwase@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

@fukun07 and I discovered a bug when using the `offload_states` and `reload_states` APIs of the Zero3 optimizer. When using grouped parameters (for example, in weight decay or grouped lr scenarios), the order of the parameters mapping in `reload_states` ([here](https://github.com/deepspeedai/DeepSpeed/blob/14b3cce4aaedac69120d386953e2b4cae8c2cf2c/deepspeed/runtime/zero/stage3.py#L2953)) does not correspond with the initialization of `self.lp_param_buffer` ([here](https://github.com/deepspeedai/DeepSpeed/blob/14b3cce4aaedac69120d386953e2b4cae8c2cf2c/deepspeed/runtime/zero/stage3.py#L731)), which leads to misaligned parameter loading. This issue was overlooked by the corresponding unit tests ([here](https://github.com/deepspeedai/DeepSpeed/blob/master/tests/unit/runtime/zero/test_offload_states.py)), so we fixed the bug in our PR and added the corresponding unit tests. --------- Signed-off-by: Wei Wu <wuwei211x@gmail.com> Co-authored-by: Masahiro Tanaka <81312776+tohtana@users.noreply.github.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Following changes in Pytorch trace rules , my previous PR to avoid graph breaks caused by logger is no longer relevant. So instead I've added this functionality to torch dynamo - pytorch/pytorch@16ea0dd This commit allows the user to config torch to ignore logger methods and avoid associated graph breaks. To enable ignore logger methods - os.environ["DISABLE_LOGS_WHILE_COMPILING"] = "1" To ignore logger methods except for a specific method / methods (for example, info and isEnabledFor) - os.environ["DISABLE_LOGS_WHILE_COMPILING"] = "1" and os.environ["LOGGER_METHODS_TO_EXCLUDE_FROM_DISABLE"] = "info, isEnabledFor" Signed-off-by: ShellyNR <shelly.nahir@live.biu.ac.il> Co-authored-by: snahir <snahir@habana.ai> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

The partition tensor doesn't need to move to the current device when meta load is used. Signed-off-by: Lai, Yejing <yejing.lai@intel.com> Co-authored-by: Olatunji Ruwase <olruwase@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

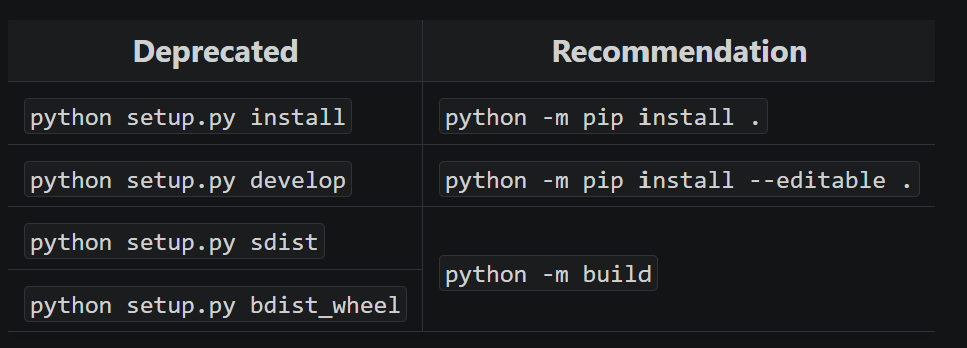

…t` (#7069) With future changes coming to pip/python/etc, we need to modify to no longer call `python setup.py ...` and replace it instead: https://packaging.python.org/en/latest/guides/modernize-setup-py-project/#should-setup-py-be-deleted  This means we need to install the build package which is added here as well. Additionally, we pass the `--sdist` flag to only build the sdist rather than the wheel as well here. --------- Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Add deepseekv3 autotp. Signed-off-by: Lai, Yejing <yejing.lai@intel.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Fixes: #7082 --------- Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Latest transformers causes failures when cpu-torch-latest test, so we pin it for now to unblock other PRs. --------- Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Co-authored-by: Logan Adams <114770087+loadams@users.noreply.github.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

…/runner (#7086) Signed-off-by: Logan Adams <loadams@microsoft.com> Co-authored-by: Olatunji Ruwase <olruwase@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

These jobs haven't been run in a long time and were originally used when compatibility with torch <2 was more important. Signed-off-by: Logan Adams <loadams@microsoft.com> Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

453cc16 to

f2b89ec

Compare

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Signed-off-by: Masahiro Tanaka <mtanaka@microsoft.com>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

DeepSpeed supports mixed precision training, but the behavior is different from

torch.autocast. DeepSpeed maintains parameters and gradients both in FP32 and a lower precision (FP16/BF16) (NVIDIA Apex AMP style) and computes all modules in the lower precision whiletorch.autocastmaintains parameters in FP32 but computes only certain operators in the lower precision.This leads to differences in:

torch.autocastneeds downcast in forward/backwardtorch.autocasthas a list of modules that can safely be computed in lower precision. Some precision-sensitive operators (e.g. softmax) are computed in FP32.To align DeepSpeed's behavior with

torch.autocastwhen necessary, this PR adds the integration withtorch.autocastwith ZeRO. Here is an examples of the configuration.Each configuration works as follows:

enabled: Enable the integration withtorch.autocastif this is set toTrue. You don't need to calltorch.autocastin your code. The grad scaler is also applied in the DeepSpeed optimizer.dtype: lower precision dtype passed totorch.autocast. Gradients for allreduce (reduce-scatter) and parameters for allgather (only for ZeRO3) oflower_precision_safe_modulesare also downcasted to this dtype.lower_precision_safe_modules: Downcast for allreduce (reduce-scatter) and allgather (ZeRO3) are applied only to modules specified in this list. (The precision for PyTorch operators in forward/backward followstorch.autocast's policy, not this list.) You can set names of classes with their packages. If you don't set this item, DeepSpeed uses the default list:[torch.nn.Linear, torch.nn.Conv1d, torch.nn.Conv2d, torch.nn.Conv3d].Note that we only maintain FP32 parameters with this feature enabled. For consistency, you cannot enable

fp16orbf16in DeepSpeed config.