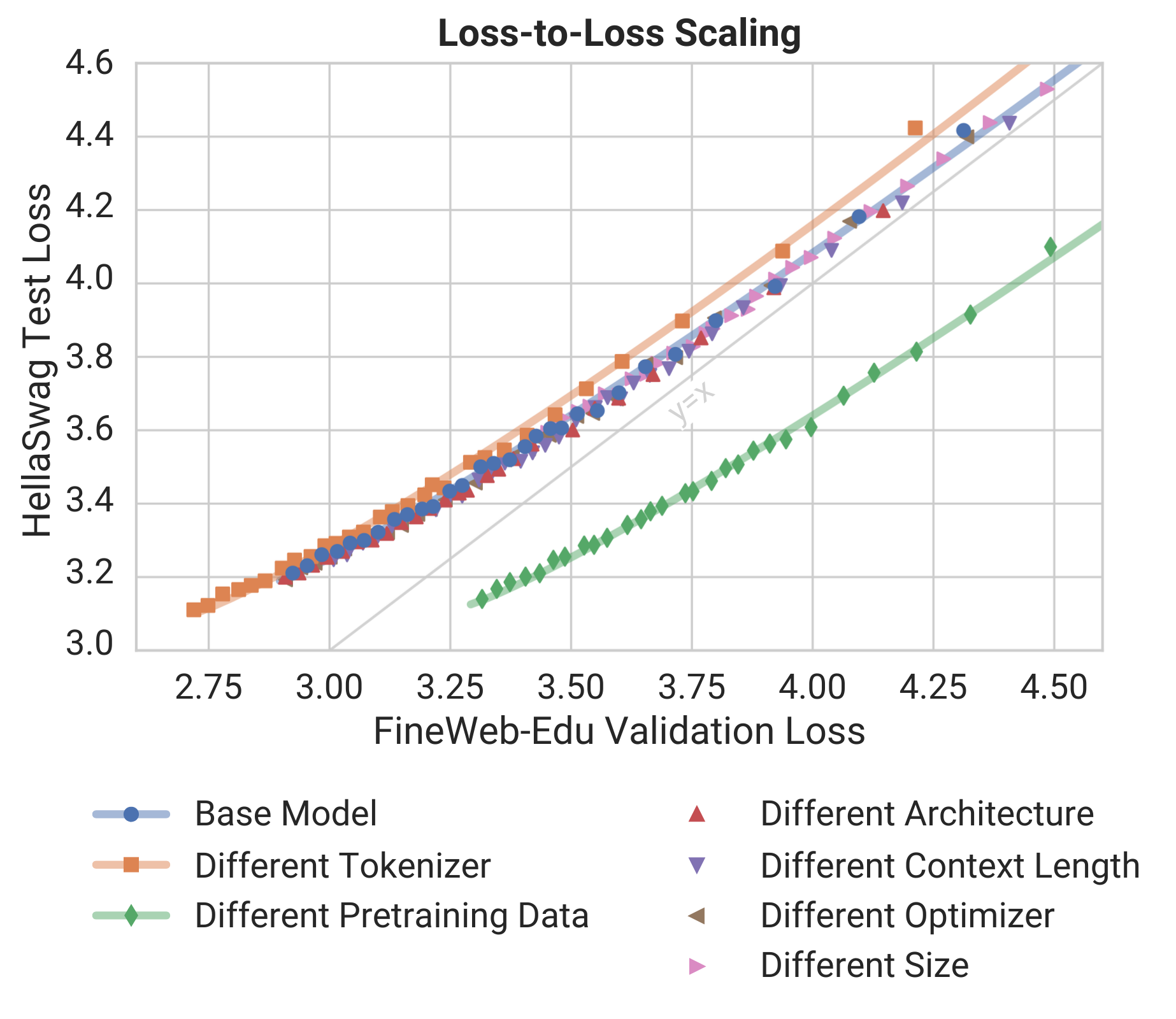

Official code to reproduce the results and data presented in the paper LLMs on the Line: Data Determines Loss-To-Loss Scaling Laws.

main.py: Main evaluation script for huggingface modelsconfig.py: Configuration handling and argument parsingutils.py: Utility classes and functionsmodels.yaml: Model configurationstasks.yaml: Task configurations with few-shot settingsconfig/: Configuration files for different modelsdata/: Contains a csv file with all evaluation resultslingua: Contains the lingua-huggingface repository with all the changes and config files to train / evaluate models from scratch

- Load required CUDA modules:

module purge

module load cuda/11.7- Initialize and update submodules:

git submodule update --init --recursive- Create and activate virtual environment:

python3.10 -m venv ~/.llm_line

source ~/.llm_line/bin/activate- Install dependencies: Basic requirements:

- Python 3.10

- CUDA 11.7

- CUDNN 8.4.1

Then install the rest of the dependencies:

pip install --upgrade pip

pip install -r requirements.txtSpecifies models to evaluate with their configurations:

models:

- name: "model/name"

cls: "hf" # lm_eval argument

batch_size: 8

device: "cuda"Defines tasks and their few-shot settings:

task_configs:

commonsense_qa:

shots: [0, 5, 10] # Will evaluate with 0, 5, and 10 shotsBasic usage:

python main.pyWith custom paths:

python main.py \

--models_yaml path/to/models.yaml \

--tasks_yaml path/to/tasks.yaml \

--results_dir custom_results_dir--models_yaml: Path to models configuration file (default: 'models.yaml')--tasks_yaml: Path to tasks configuration file (default: 'tasks.yaml')--results_dir: Directory to store evaluation results (default: 'results')

If you encounter CUDA-related errors:

- Ensure you have the correct CUDA modules loaded (cuda/11.7, cudnn/8.4.1-cu11.6)

- Try cleaning the virtual environment and reinstalling:

rm -rf ~/.llm_line

python3.10 -m venv ~/.llm_line

source ~/.llm_line/bin/activate

pip install --upgrade pip

pip install -r requirements.txtWhen adding new arguments to model configurations, several files need to be updated to ensure proper handling:

-

Model YAML Files (

models.yaml, etc.):models: - name: "model/name" revision: "step-123" # New argument cls: "hf" batch_size: 8 device: "cuda"

-

Configuration Class (

config.py):- Add the new field to

ModelConfigclass - Update

from_dictmethod if special handling is needed

@dataclass class ModelConfig: name: str revision: Optional[str] = None # New field

- Add the new field to

-

Filename Generation (

config.py):- Update

format_model_infoto include new args in filenames

def format_model_info(model_config): filename = f"name={model_config.name}" if model_config.revision: filename += f"__checkpoint={model_config.revision}"

- Update

-

Metadata Storage (

config.py):- Update

get_model_metadatato include new args in results

def get_model_metadata(model_config): metadata = { "model_name": model_config.name, # Add new fields "checkpoint": model_config.revision if model_config.revision else None }

- Update

-

Model Loading (

main.py):- Update model loading logic if the new argument affects how models are loaded

model_args = f"pretrained={model_config.name}" if model_config.revision: model_args += f",revision={model_config.revision}"

This ensures that:

- New arguments are properly parsed from YAML

- Arguments are included in result filenames for tracking

- Metadata in result files includes new information

- Model loading uses new arguments correctly