It's a CLI tool developed by Rungutan and designed to automatically create, update and delete CloudFormation stacks in multiple AWS accounts and regions at the same time.

This CLI has been designed for:

- versioning AWS CloudFormation parameters in GIT

- deploying to multiple AWS accounts or AWS regions either in PARALLEL or SEQUENTIAL

- send notifications to Slack channels with AWS errors based on operation

- support create, update and delete commands

- can be ran either manually or through a pipeline definition in your CI/CD system

- supports parent/child stacks

- it supports NoEcho parameters

- it supports tagging of resources at stack level

- it supports unattended deployment (through a CI/CD system)

- it supports both JSON and YAML versions of AWS CloudFormation

And this is just the tip of the iceberg...

Unfortunately, it cannot understand contracted forms of verbs in AWS CloudFormation when using YAML templates.

In short, if your AWS CF templates written in YAML use stuff like !If, then you have to update them to use their respective version -> Fn::If.

We, at Rungutan, in order to support global concurrency for load testing and ensure high availability as well, have around 200 stacks on average deployed in each and every of the 15 regions our platform currently supports.

In short, yes, we use Stackuchin to handle updates for around 3000 AWS CloudFormation stacks.

And no, we're not exagerating or bumping the numbers :-)

If simply the fact that you can now git-version all your stacks AND their stack parameters, isn't enough, then:

- your developers can now manage AWS CloudFormation stack themselves, WITHOUT needing to have any "write" IAM permissions

- you can use CI/CD for automated deployments

- you can use pull requests to review parameter/stack changes

pip install stackuchin- Check the overall help menu

$ stackuchin help

usage: stackuchin <command> [<args>]

To see help text, you can run:

stackuchin help

stackuchin version

stackuchin create --help

stackuchin delete --help

stackuchin update --help

stackuchin pipeline --help

CLI tool to automatically create, update and delete AWS CloudFormation stacks in multiple AWS accounts and regions at the same time

positional arguments:

command Command to run

optional arguments:

-h, --help show this help message and exit

- Check the help menu for a specific command

$ stackuchin create --help

usage: stackuchin [-h] [--stack_file STACK_FILE] --stack_name STACK_NAME [--secret Parameter=Value] [--slack_webhook SLACK_WEBHOOK] [--s3_bucket S3_BUCKET] [--s3_prefix S3_PREFIX] [-p PROFILE]

Create command system

optional arguments:

-h, --help show this help message and exit

--stack_file STACK_FILE

The YAML file which contains your stack definitions.

Defaults to "./cloudformation-stacks.yaml" if not specified.

--stack_name STACK_NAME

The stack that you wish to create

--secret Parameter=Value

Argument used to specify values for NoEcho parameters in your stack

--slack_webhook SLACK_WEBHOOK

Argument used to overwrite environment variable STACKUCHIN_SLACK.

If argument is specified, any notifications will be sent to this URL.

If not specified, the script will check for env var STACKUCHIN_SLACK.

If neither argument nor environment variable is specified, then no notifications will be sent.

--s3_bucket S3_BUCKET

Argument used to overwrite environment variable STACKUCHIN_BUCKET_NAME.

If argument is specified, then the template is first uploaded here before used in the stack.

If not specified, the script will check for env var STACKUCHIN_BUCKET_NAME.

If neither argument nor environment variable is specified, then the script will attempt to feed the template directly to the AWS API call, however, due to AWS CloudFormation API call limitations, you might end up with a bigger template in byte size than the max value allowed by AWS.

Details here -> https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/cloudformation-limits.html

--s3_prefix S3_PREFIX

Argument used to overwrite environment variable STACKUCHIN_BUCKET_PREFIX.

The bucket prefix path to be used when the S3 bucket is defined.

-p PROFILE, --profile PROFILE

The AWS profile you'll be using.

If not specified, the "default" profile will be used.

If no profiles are defined, then the default AWS credential mechanism starts.

The logic of the app is simple:

- Specify the operation that you want to perform

- Specify the file which contains the parameters for your stack

- (optional) Add any secrets (aka NoEcho parameters) that your stack might need

Here's the most basic simple definition of a "stack file":

your-first-stack:

Account: 123112321123

Region: us-east-1

Template: cloudformation-template.yaml

# All parameters except NoEcho.

Parameters:

paramA: valA

Tags:

Environment: UTILITIES

Team: DevOps

MaintainerEmail: support@rungutan.com

MaintainerTeam: Rungutan

another-stack-name:

Account: 123112321123

Region: us-east-1

Template: some-folder/cloudformation-some-other-template.yaml

# Stack without readable parameters.

Parameters: {}

Tags:

Environment: UTILITIES

Team: DevOps

MaintainerEmail: support@rungutan.com

MaintainerTeam: Rungutan

stack-different-account-assume-role:

Account: 456445654456

Region: us-east-1

Template: some-folder/cloudformation-some-other-template.yaml

AssumedRoleArn: arn:aws:iam::456445654456:role/SomeRoleThatCanBeAssumed

# Stack without readable parameters.

Parameters: {}

Tags:

Environment: UTILITIES

Team: DevOps

MaintainerEmail: support@rungutan.com

MaintainerTeam: Rungutancat > input.yaml <<EOL

pipeline:

update:

- stack_name: TestUpdateStack

delete:

- stack_name: TestDeleteStack

create:

- stack_name: TestCreateStack

secrets:

- Name: SomeSecretName

Value: SomeSecreValue

EOL

stackuchin pipeline --pipeline_file input.yaml

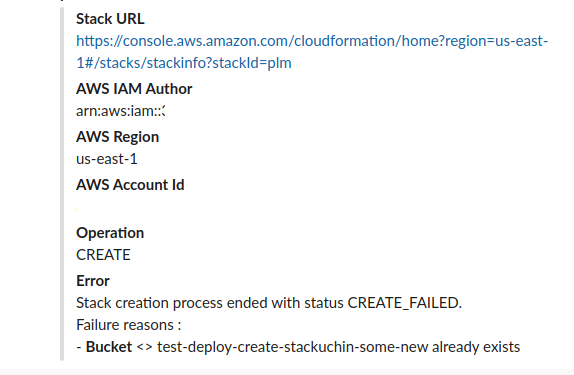

Use the environment variable STACKUCHIN_SLACK or the argument --slack_webhook to specify a Slack incoming webhook to push your alerts.

You get notified ALL with PROPER MESSAGES, so that you wouldn't need to have to open your AWS Console to fix your stuff.

Here's a sample:

Here's a sample pipeline that uses our official Docker image to run it in using GitLab CI/CD:

image: rungutancommunity/stackuchin:latest

stages:

- deploy_updates

variables:

AWS_DEFAULT_REGION: us-east-1

STACKUCHIN_SLACK: https://hooks.slack.com/services/some_slack_webhook

STACKUCHIN_BUCKET_NAME: some-deployment-bucket-in-us-east-1

STACKUCHIN_BUCKET_PREFIX: some/prefix/this/is/optional

deploy_updates:

only:

refs:

- master

stage: deploy_updates

script:

- |

cat > pipeline.yaml <<EOF

pipeline:

pipeline_type: parallel

update:

- stack_name: My-First-Stack

- stack_name: My-Second-Stack

EOF

- stackuchin pipeline --stack_file stack_file.yaml --pipeline_file pipeline.yaml- If you don't specify a value for a specific stack parameter, then the script will automatically:

- Set the value as "True" for "UsePreviousValue" (as per AWS documentation), which works just fine for UPDATE and DELETE commands, but of course won't work for CREATE commands

- If the parameter was not previously defined (aka you're running a CREATE command), it uses (as per AWS Documentation as well) the "Default" value of the parameter as defined in your stack's cloudformation template

- Using secrets (NoEcho) parameters

Defining them as parameters kinda defeats their purpose of being secret.

You should specify them through the --secret argument for simple commands, or through the secrets property in pipelines.

- The pipeline, if "sequential", will execute the following operations in order: You can specify only 1, 2 or 3 types of operations mentioned above, but regardless of their order, the script will forcefully process them as:

- CREATE

- UPDATE

- DELETE

-

The pipeline, if "parallel", will execute ALL operations at the same time.

-

Due to AWS CloudFormation limitations, a template cannot be supplied as string to the API call if it goes over a certain value in bytes.

https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/cloudformation-limits.html

For that, you can use the arguments --s3_bucket / --s3_prefix (or their respective environment variable equivalentsSTACKUCHIN_BUCKET_NAME / STACKUCHIN_BUCKET_PREFIX) to specify an intermediate place where to upload the cloudformation template before using it in the API call.

The command will work without supplying these values, but it is recommended that you use them so that you don't encounter any non-necessary errors.