This repository contains code, experiments and writing aimed at developing a perceptually-lossless audio codec that is sparse, interpretable, and easy to manipulate. The work is currently focused on "natural" sounds, and leverages knowledge about physics, acoustics, and human perception to remove perceptually-irrelevant or redundant information.

The basis of modern-day, lossy audio codecs is a model that slices audio into fixed-size and fixed-rate "frames" or "windows" of audio. This is even true of cutting-edge , "neural" audio codecs such as Descript's Audio Codec. While convenient, flexible, and generally able to represent the universe of possible sounds, it isn't easy to understand or manipulate, and is not the way humans conceptualize sound. While modern music can sometimes deviate from this model, humans in natural settings typically perceive sound as the combination of various streams or sound sources sharing some physical space.

This small model attempts to decompose audio featuring acoustic instruments into the following components:

Some maximum number of small (16-dimensional) event vectors, representing individual audio events Times at which each event occurs.

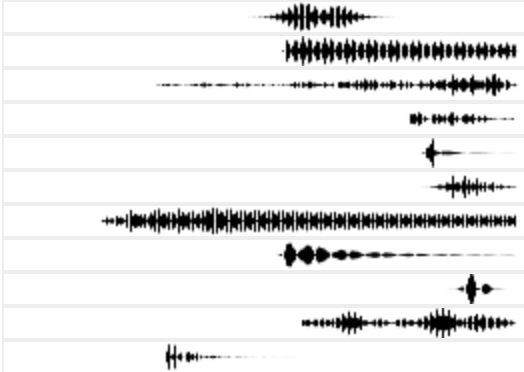

In this work, we apply a Gaussian Splatting-like approach to audio to produce a lossy, sparse, interpretable, and manipulatable representation of audio. We use a source-excitation model for each audio "atom" implemented by convolving a burst of band-limited noise with a variable-length "resonance", which is built using a number of exponentially decaying harmonics, meant to mimic the resonance of physical objects. Envelopes are built in both the time and frequency domain using gamma and/or gaussian distributions. Sixty-four atoms are randomly initialized and then fitted (3000 iterations) to a short segment of audio via a loss using multiple STFT resolutions. A sparse solution, with few active atoms is encouraged by a second, weighted loss term. Complete code for the experiment can be found on GitHub. Trained segments come from the MusicNet dataset.

Assuming that our decoder is parameter-less (i.e., only the encoded representation is needed), and that the path from decoder to loss function is fully differentiable, then we can perform gradient descent to find the best encoded representation for a given piece of audio. The Gaussian/Gamma Splatting Experiment is an example of this; audio is defined as a set of events/parameters passed to a fixed synthesizer.

This work takes a slightly different approach to extracting a sparse audio representation, but ties in to the Overfitting as Encoder idea. We attempt to decompose a single musical audio signal into two distinct components:

- A state-space model representing the resonances, or transfer function of the system as whole, including the instrument and the room in which it was played

- A sparse control signal, representing the ways in which energy is injected into the system

- simpler, linear sparse decompositions such as matching pursuit

- perceptually-motivated loss functions, inspired by Mallat's scattering transform and the Auditory Image Model.

- approximate convolutions for long kernels

- since many useful kernels in music are highly redundant, can we find a low-rank approximation in the frequency domain and perform the multiplication/convolution there?

- approximate convolutions via hyperdimensional computing

AUDIO_PATH=

PORT=9999

IMPULSE_RESPONSE_PATH=

S3_BUCKET=The MusicNet dataset should be downloaded and extracted to a location on your local machine.

You can then update the AUDIO_PATH environment variable to point to the musicnet/train_data directory, wherever that

may be on your local machine.

Room impulse responses to support convolution-based reverb can be downloaded here.

You can then update the IMPULSE_RESPONSE environment variable to point at the directory on your local machine that

contains the

impulse response audio files.