- About

- App Architecture

- Project Directories

- How to Start?

- Railway 101

- Railway Development

- Railway Build and Deployment Config

- Railway Build and Deployment Architecture

- High Level Architecture

- Further Challenge

I developed this POC to showcase the capabilities of Railway throughout its development, building, and deployment phases. While there's considerable potential for refinement in the POC, the primary intent is to highlight how Railway can enhance developer efficiency.

This application is RSS-based and supports the following API-based functionalities:

- A healthcheck endpoint for the service.

- User registration and login capabilities.

- Ability for users to add feeds to their profiles.

- Redis functions both as a cache server and a celery queue.

- A celery task worker operates continuously, monitoring the queue for new tasks.

- Postgres serves as the persistent data storage solution.

- There's a basic cron script app which utilizes Railway's CRON. This runs at predefined intervals, querying internal services through an API endpoint.

| Directories | Purpose | Readme |

|---|---|---|

| backend_api | API Server built using Bun and Hono (Node.JS) | README.md |

| celery_worker | Celery Worker written in Python | README.md |

| database | Contains the database schema | ERD Diagram |

| internal_service | A simple internal API server using Bun | README.md |

| refresh_script | Cron script that calls the simple internal API server | README.md |

| img | Contains the images used in this README |

For more commands, refer to the package.json of each project.

/backend_api

railway run bun run --hot src/index.ts/celery_worker

railway run celery --app=tasks worker -l INFOOR

You will have to uncomment the

if __name__ == '__main__':code in tasks.py to use this command

railway run python tasks.py/internal_service

railway run bun run --hot index.ts/refresh_script

railway run bun run --hot index.tsThis diagram will provide a clear picture of Railway's different features along with their hierarchy.

- To see all railway cli commands:

railway --help- Navigate to your project root. In this repo, if working on backend_api, navigate to

/backend_api. Then run:

railway link

# Select team > project > environmentAlternatively, you can use the project ID to link the project. Go to your Railway dashboard and click on “Setup project locally” from bottom left.

You can also change the env later if needed using

railway environment- Create a service and add db variable and JWT secret to it in the Railway dashboard. To link the service to your local project, run:

railway service- To get a list of all available environment variables, run:

railway variables-

Deploy Postgres and Redis services and use env variables to connect to them. You will need to add the Database connecion string variable to your service. You can get the connection string from the Railway dashboard.

-

To access the env variables in your local development, run:

railway run bun run --hot src/index.ts- To unlink the project from the Railway service, run:

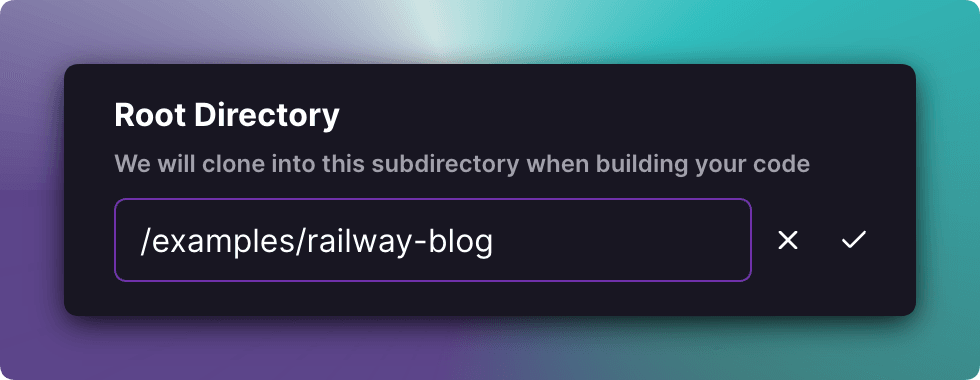

railway unlinkSince this is a monorepo, you will need to define the Root Directory in your Railway dashboard before deployment. Check out Monorepo.

We're using nixpacks.toml and railway.toml to define build configs for our projects. Let's take a look at both files from /backend_api.

In nixpacks.toml, we have defined the setup and install phases. In the setup phase, we're installing nodejs and bun. In the install phase, we're installing the dependencies using bun.

/backend_api/nixpacks.toml

[phases.setup]

nixPkgs = ['nodejs', 'bun']

[phases.install]

cmds = ['bun install --production']In railway.toml, we have defined the build and deploy configs. In the build config, we're using nixpacks to build the project. The watch pattern is set to trigger auto-deployment when we push new changes to the files in the specified watch path.

In the deploy config, we're using bun to start the server. We've defined a base deployment config, and a production environment deployment config. You can create as many as you want based on the environments you have created on Railway.

Most of the fields are self explanatory, for more details you can check out:

/backend_api/railway.toml

[build]

builder = "nixpacks"

nixpacksConfigPath = "nixpacks.toml"

watchPatterns = ["src/**"]

buildCommand = "echo buildng!"

[deploy]

startCommand = "bun run src/index.ts"

healthcheckPath = "/healthcheck"

healthcheckTimeout = 100

restartPolicyType = "NEVER"

[environments.production.deploy]

numReplicas = 2

restartPolicyType = "ON_FAILURE"

restartPolicyMaxRetries = 3- User sends a request to the server to add feeds with array of URLs in JSON body

- For Each feed(URL) the API server looks for it in Redis cache:

- If Found

- Retrieves articles from the cache which contains feed_id & article_id

- Saves the feed in the user_feeds table (on conflict do nothing)

- Saves the articles in the user_articles table (on conflict do nothing)

- If Not Found

- Saves the feed in feeds table (on conflict do nothing)

- Saves the feed_id in the user_feeds table (on conflict do nothing)

- Schedules a task in Celery queue to fetch the articles for the feed

- If Found

- Celery worker picks up the task

- It fetches the article for the feed

- Saves the articles to the articles table (on conflict gracefully fail task)

- Saves the article_id in user_arcticles table (on conflict gracefully fail task)

- Celery worker adds the feed_id & articles to a redis cache with 3 hours expiration time and

feed:{feed_url}as key.

sequenceDiagram

autonumber

participant User as User

participant Server as Server

participant Redis as Redis Cache

participant Database as Database

participant CeleryQueue as Celery Queue

participant CeleryWorker as Celery Worker

Note over User,CeleryWorker: The user must be logged in to submit feed URLs

User->>Server: Send feed URLs

activate Server

loop for each feed URL

Server->>Redis: Check if feed exists in cache

activate Redis

alt Feed found in Redis

Redis-->>Server: Return cached feed data

Server->>Database: Save feed to user_feeds (on conflict do nothing)

activate Database

Database-->>Server: Confirm save

deactivate Database

Server->>Database: Save articles to user_articles (on conflict do nothing)

activate Database

Database-->>Server: Confirm save

deactivate Database

else Feed not found in Redis

Redis-->>Server: Feed not found

deactivate Redis

Server->>Database: Save feed to feeds (on conflict do nothing)

activate Database

Database-->>Server: Return feed_id

deactivate Database

Server->>Database: Save feed_id to user_feeds (on conflict do nothing)

activate Database

Database-->>Server: Confirm save

deactivate Database

Server->>CeleryQueue: Schedule task to fetch articles

activate CeleryQueue

end

end

Server-->>User: Confirm feed and articles processing

deactivate Server

Note right of CeleryWorker: User can continue using the app <br/>while CeleryWorker fetches articles

loop for each Task in CeleryQueue

activate CeleryWorker

CeleryQueue-->>CeleryWorker: Task to fetch articles

CeleryWorker->>CeleryWorker: Fetch articles for feed

CeleryWorker->>Database: Save articles to articles table (on conflict do nothing)

activate Database

Database-->>CeleryWorker: Confirm save

deactivate Database

CeleryWorker->>Database: Save article_id to user_articles (on conflict do nothing)

activate Database

Database-->>CeleryWorker: Confirm save

deactivate Database

CeleryWorker->>Redis: Cache feed_id & articles with 3h expiration

activate Redis

Redis-->>CeleryWorker: Confirm cache

deactivate Redis

deactivate CeleryWorker

deactivate CeleryQueue

end

You can update refresh_script to suit following workflow:

- Add last_polled to

feedstable- Celery worker picks up the task -Add- Update last_polled in Feeds table

- Independent Refresh Script (runs every 12 hours):

- Call the API server to retrieve all feed URLs that need refreshing.

- API server queries Redis to get the list of feed URLs currently cached.

- API server queries the database to fetch all feed URLs.

- API server filters out feed URLs that are already in Redis cache and returns the remaining URLs to the script.

- For each feed URL that needs refreshing, the script schedules a task in the Celery queue.

- Call the API server to retrieve all feed URLs that need refreshing.

- Celery Worker Processing:

- Upon picking up a task, the Celery worker fetches the latest articles for the provided feed URL.

- Save any new articles to the articles table. Handle conflicts gracefully, e.g., if an article with the same unique identifier already exists, skip/ignore or update as needed.

- Update last_polled in Feeds table

- Cache the feed ID and its respective articles in Redis with a key format like feed:{feed_url} and set a 3-hour expiration.

sequenceDiagram

autonumber

participant Server as Server

participant Redis as Redis Cache

participant Database as Database

participant CeleryQueue as Celery Queue

participant CeleryWorker as Celery Worker

participant RefreshScript as Refresh Script

activate RefreshScript

RefreshScript->>Server: Request feeds needing refresh (Every 12 hours)

activate Server

Server->>Database: Fetch all feed URLs that were last_polled before 12 hours

activate Database

Database-->>Server: Return feeds

deactivate Database

Server-->>RefreshScript: Return queried feeds

deactivate Server

activate CeleryQueue

loop for each feed URL not in cache

RefreshScript->>CeleryQueue: Schedule task to fetch articles

end

deactivate RefreshScript

loop for each Task in CeleryQueue

activate CeleryWorker

CeleryQueue-->>CeleryWorker: Task to fetch articles

CeleryWorker->>CeleryWorker: Fetch articles for feed

CeleryWorker->>Database: Save articles to articles table (on conflict do nothing)

activate Database

Database-->>CeleryWorker: Confirm save

deactivate Database

CeleryWorker->>Database: Update last_polled in Feeds

activate Database

Database-->>CeleryWorker: Confirm update

deactivate Database

CeleryWorker->>Redis: Cache feed_id & articles with 3h expiration

activate Redis

Redis-->>CeleryWorker: Confirm cache

deactivate Redis

end

deactivate CeleryWorker

deactivate CeleryQueue