-

Notifications

You must be signed in to change notification settings - Fork 1

/

Copy pathREADME.Rmd

206 lines (168 loc) · 12 KB

/

README.Rmd

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

---

title: "Readme"

output:

github_document:

toc: true

html_preview: false

---

```{r setup, include=FALSE}

# Knitr setup

knitr::opts_chunk$set(

echo = FALSE,

warning = FALSE,

message = FALSE,

collapse = TRUE,

comment = "#>",

dpi = 600,

fig.retina = 2

)

# Globals

days_to_year <- 365.2425

file_size <- utils:::format.object_size(file.size('results/drive_dates.csv'), 'Mb', digits=0)

custom_palette <- c(

"#1f78b4", "#ff7f00", "#6a3d9a", "#33a02c", "#e31a1c", "#b15928",

"#a6cee3", "#fdbf6f", "#cab2d6", "#b2df8a", "#fb9a99", "black",

"grey10", "grey20", "grey30", "grey40", "grey50"

)

min_unique_drives <- 50

# Load data

cox_model = readRDS('results/cox_model.rds')

dat = data.table::fread('results/survival.csv')

drive_dates = data.table::fread('results/drive_dates.csv')

drive_dates[,days := as.numeric(max_date - min_date)]

# Drop capacities with low data — they clutter the plots

drives_by_cap <- dat[,list(n_drives=sum(n_unique)), by=capacity_tb][order(-n_drives),]

caps_to_keep <- drives_by_cap[n_drives > min_unique_drives, capacity_tb]

dat <- dat[capacity_tb %in% caps_to_keep,]

drive_dates <- drive_dates[capacity_tb %in% caps_to_keep,]

# Sort capacity factors by size

dat[,capacity_tb := stringi::stri_pad_left(capacity_tb, 2, '0')]

drive_dates[,capacity_tb := stringi::stri_pad_left(capacity_tb, 2, '0')]

# Choose best drive

best_drive_by_size <- dat[!duplicated(capacity_tb),]

best_drive = dat[1, as.character(model)]

best_drive_surv = dat[1, sprintf("%1.2f%%", 100*surv_5yr_lower)]

# Make a dataframe to use later in survival plots

model_list <- best_drive_by_size[, model]

plot_dat <- drive_dates[model %in% model_list,]

```

# Data Sources

I'm buying a hard drive for backups, and I want to buy a drive that's not going to fail. I'm going to use data from [BackBlaze](https://www.backblaze.com/b2/hard-drive-test-data.html#downloading-the-raw-hard-drive-test-data) to assess drive reliability. Backblaze [did their own analysis](https://www.backblaze.com/blog/backblaze-drive-stats-for-q2-2024/) of drive failures, but I don't like their approach for 2 reasons:

1. Their "annualized failure rate" `Drive Failures / (Drive Days / 365)` assumes that failure rates are constant over time. E.g. this assumption means that observing 1 drive for 100 days gives you the exact same information as observing 100 drives for 1 day. If drives fail at a constant rate over time, this is fine, but I suspect that drives actually fail at a higher rate early in their lives. So their analysis is biased against newer drives.

2. I want to compute a confidence interval, so I can select a drive where we have enough observations to be very confident in a low failure rate. For example, if I have a model drive that's been observed for one drive for 1 day with 0 failures, I probably don't want to buy it, despite it's zero percent failure rate. I'd rather buy a drive model thats been observed for 100 drives for 1000 days with one failure. [This blog post](https://www.evanmiller.org/how-not-to-sort-by-average-rating.html) has some good details on why confidence intervals are useful for sorting things.

# Results

I chose to order the drives by their expected 5 year survival rate. I calculated a 95% confidence interval on the 5-year survival rate, and I used that interval to sort the drives. Based on this analysis, the `r best_drive` is the most reliable model in our data, with an estimated 5-year survival rate that is at least `r best_drive_surv`. (In other words we are 95% confident that at least `r best_drive_surv` of the `r best_drive`'s will last at least 5 years).

Here are the top drives from this analysis, by size. (for example, many manufacturers have drives in the 16TB range that are very reliable, but I'm only showing the single best model in this size range).

```{r best_by_size, echo=FALSE}

knitr::kable(

best_drive_by_size[,

list(

model,

capacity_tb,

N=n_unique,

drive_days,

failures=failed,

years_97pct=sprintf("%1.1f", years_97pct),

surv_5yr_lo=sprintf("%1.2f%%", 100*surv_5yr_lower),

surv_5yr=sprintf("%1.2f%%", 100*surv_5yr),

surv_5yr_hi=sprintf("%1.2f%%", 100*surv_5yr_upper)

)]

)

```

Data details:

* **model** is the drive model.

* **capacity_tb** is the size of the drive.

* **N** is the number of unique drives in the analysis.

* **drive_days** is the total number of days that we've observed for drives of this model in the sample.

* **failures** is the number of failures observed so far.

* **years_97pct** Is the 97th percentile survival time for the drives. 97% of the drives will last at least this long.

* **surv_5yr_lo** is the lower bound of the 95% confidence interval of the 5-year survival rate.

* **surv_5yr** is the 5-year survival rate.

* **surv_5yr_hi** is the upper bound of the 95% confidence interval of the 5-year survival rate.

# Technical Details

Survival analysis is a little weird, because you don't observe the full distribution of the data. This makes some traditional statistics impossible to calculate. For example, until you observe every hard drive in the sample fail, you can't know the mean time to failure: if you have one drive left that hasn't failed, and becomes an outlier in survival time, that might have a big impact on mean survival time. You won't know the true mean until that last drive fails.

Similarly, to find the median survival time, you need to wait for half of the drives in your sample fail, which can take a decade or more!

Modern hard drives are **so reliable**, that even after 5+ years of observation, we've barely observed the distribution of failures! (This is a good thing, but it makes it hard to chose between drives!).

To compare models with different observational periods (e.g. 22 TB vs 4TB drives), I fit a [Cox Proportional Hazard model](https://en.wikipedia.org/wiki/Proportional_hazards_model). This enabled me to estimate 5 years survival rates for all of the drives, as well as a confidence interval on that rate. The confidence interval narrows as you observe more drives and as you observe those drives for a longer time.

The Cox model is semi-parametric. It assumes a non-parametric, baseline hazard rate that is the same for all drives. It then fits a single parameter for each drive that is a multiple on that baseline hazard rate. So every drive has the same "shape" for its survival curve, but multiplied by a fixed coefficient per model that makes that "shape" steeper or shallower.

# Plots

Here is a plot of the survival for each of the best drive models.

Each curve ends with the oldest drive we've observed (these are called [Kaplan–Meier](https://en.wikipedia.org/wiki/Kaplan%E2%80%93Meier_estimator) curves):

```{r km_curves, echo=FALSE, warning=FALSE}

plot_model <- plot_dat[, survival::survfit(survival::Surv(time=days, failed) ~ 1 + capacity_tb)]

out <- survminer::ggsurvplot(

plot_model, data=plot_dat,

palette = custom_palette, conf.int = F, legend='top', censor=F,

xlim=days_to_year * c(0, 5), ylim=c(.90, 1.0),

xlab = 'Time (years)', title='Kaplan-Meier Survival Curves',

break.x.by=days_to_year, xscale=days_to_year)

print(out)

```

The "proportional hazards" assumption from the Cox model allows us to extend these curves and estimate survival times at 5 years for all of the drives:

```{r cox_curves, echo=FALSE, warning=FALSE}

# Plot survival curves for just the best drives by capacity

plot_model <- survival::survfit(cox_model, best_drive_by_size[, list(model = as.character(model))])

colnames(plot_model$surv) <- best_drive_by_size$capacity_tb

# Actually plot

out <- survminer::ggsurvplot(

plot_model, data=best_drive_by_size,

palette = custom_palette, conf.int = F, legend='top', censor=F,

xlim=days_to_year * c(0, 5), ylim=c(.90, 1.0),

xlab = 'Time (years)', title='Cox Proportional Hazards Survival Curves',

break.x.by=days_to_year, xscale=days_to_year)

print(out)

```

Note that the curves all have the same shape, but each model has a different slope. Compare this plot to the Kaplan-Meier plot above: The proportional hazards assumption works pretty well, but isn't perfect.

# Cost effectiveness

I manually gatherted some [hard drive prices](prices.csv) from ebay and amazon. I limited this search to drives with >70% expected 5 years survival, as I want to buy drives that are unlikely to fail on me. I can then use the price data to calculate the cost to store 1TB of data for 5 years for each drive. Note that these prices could be wrong, and also not that only one drive may be available at the given price.

```{r prices, echo=FALSE}

ebay_url <- function(x, text=x){

sapply(seq_along(x), function(i) {

model = rev(stringi::stri_split_fixed(x[i], ' ')[[1]])[1]

sprintf("[%s](https://www.ebay.com/sch/i.html?_from=R40&_nkw=%s&_sacat=0&LH_BIN=1&_sop=15&rt=nc&LH_ItemCondition=1000|1500)", text[i], model)

})

}

prices <- data.table::fread('prices.csv')

prices <- data.table::merge.data.table(prices, dat, by='model', all.x=T)

prices[,cost_per_tb := price / as.numeric(capacity_tb)]

prices[,cost_per_tb_5yr := cost_per_tb / surv_5yr_lower]

prices <- prices[order(cost_per_tb_5yr),]

prices <- prices[,list(model = ebay_url(model), price, capacity_tb, surv_5yr_lower=surv_5yr_lower*100, cost_per_tb, cost_per_tb_5yr)]

best_model <- prices[1,]

knitr::kable(prices, digits=1)

```

According to this analysis, the most cost effective drive is the `r best_model$model`, which costs $`r best_model$price` and has a 5 year survival rate of `r sprintf("%1.1f%%", best_model$surv_5yr_lower)`. This drive costs `r sprintf("$%1.2f", best_model$cost_per_tb_5yr)` to store 1 TB for 5 years (this price includes the probability of failure).

Again, the price data is probably incorrect, but its still an interesting analysis.

# Full Data

Here are the full results for all drives, excluding drives that are less than 2TB:

```{r all_drives, echo=FALSE}

knitr::kable(

dat[model != "00md00",

list(

model,

capacity_tb,

N=n_unique,

drive_days,

failures=failed,

years_97pct=sprintf("%1.1f", years_97pct),

surv_5yr_lo=sprintf("%1.2f%%", 100*surv_5yr_lower),

surv_5yr=sprintf("%1.2f%%", 100*surv_5yr),

surv_5yr_hi=sprintf("%1.2f%%", 100*surv_5yr_upper)

)]

)

```

Note that some drives have a very low sample size, which gives them a very wide confidence interval. More data are needed for these drives to draw conclusions about their survival rates.

# Replicating my results

[results/drive_dates.csv](results/drive_dates.csv) has the cleaned up data from backblaze, with each drive by serial number, model, when it was installed, when it was last observed, and whether it failed.

```{r data, echo=FALSE}

knitr::kable(head(data.table::fread("results/drive_dates.csv")[order(-max_date, min_date),], 10L))

```

I use a [Makefile](Makefile) to automate the analysis. Run `make help` for more info, or just run `make all` to download the data, unzip and combine it, run the survival analysis and generate the [README.md](README.md). The download is 50+ GB, so it takes a while, but you only need to do it once.

An interesting note about this data: It's 55GB uncompressed, and contains a whole bunch of irrelevant information. It was very interesting to me that I could compress a 55GB dataset to `r file_size`, while still keeping **all** of the relevant information for modeling. (In other words, this dataset was thousands of times larger than it needed to be!). I think this is another example of how good data structures are essential for data science.

# Erratum

```{r bad_drives, echo=FALSE}

bad_drive <- dat[n_unique>100,][which.min(surv_5yr_upper),]

```

I'm probably way over-thinking this, but it was fun to analyze the data.

There are some drives in this data I plan to avoid. For example, the `r bad_drive$model` has a 5 year survival of `r sprintf("%1.1f%%", 100*bad_drive$surv_5yr)`. I'd be a little nervous to buy this drive. [Backblaze](https://www.backblaze.com/blog/3tb-hard-drive-failure/) has a good analysis of issues with 3TB drives on their blog.

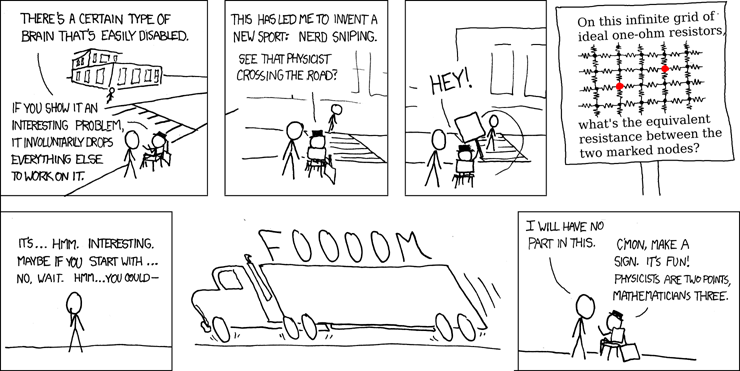

[](https://xkcd.com/356/)