Underlying enum class to support the

-

+

DataType

@@ -467,7 +467,7 @@

In the case that you need to use the

-

+

DataType

@@ -476,7 +476,7 @@

ex. trtorch::DataType type =

-

+

DataType::kFloat

@@ -612,7 +612,7 @@

-

+

DataType

@@ -696,7 +696,7 @@

Get the enum value of the

-

+

DataType

@@ -775,7 +775,7 @@

Comparision operator for

-

+

DataType

@@ -865,7 +865,7 @@

Comparision operator for

-

+

DataType

@@ -951,7 +951,7 @@

Comparision operator for

-

+

DataType

@@ -1041,7 +1041,7 @@

Comparision operator for

-

+

DataType

diff --git a/docs/_cpp_api/classtrtorch_1_1CompileSpec_1_1DeviceType.html b/docs/_cpp_api/classtrtorch_1_1CompileSpec_1_1DeviceType.html

index acb0528810..1d1772a40c 100644

--- a/docs/_cpp_api/classtrtorch_1_1CompileSpec_1_1DeviceType.html

+++ b/docs/_cpp_api/classtrtorch_1_1CompileSpec_1_1DeviceType.html

@@ -392,7 +392,7 @@

This class is a nested type of

-

+

Struct CompileSpec

@@ -435,7 +435,7 @@

This class is compatable with c10::DeviceTypes (but will check for TRT support) but the only applicable value is at::kCUDA, which maps to

-

+

DeviceType::kGPU

@@ -443,7 +443,7 @@

To use the

-

+

DataType

@@ -452,7 +452,7 @@

ex. trtorch::DeviceType type =

-

+

DeviceType::kGPU

@@ -481,7 +481,7 @@

Underlying enum class to support the

-

+

DeviceType

@@ -490,7 +490,7 @@

In the case that you need to use the

-

+

DeviceType

@@ -499,7 +499,7 @@

ex. trtorch::DeviceType type =

-

+

DeviceType::kGPU

@@ -766,7 +766,7 @@

Comparison operator for

-

+

DeviceType

@@ -852,7 +852,7 @@

Comparison operator for

-

+

DeviceType

diff --git a/docs/_cpp_api/classtrtorch_1_1ptq_1_1Int8CacheCalibrator.html b/docs/_cpp_api/classtrtorch_1_1ptq_1_1Int8CacheCalibrator.html

index f043962260..d13bc517fd 100644

--- a/docs/_cpp_api/classtrtorch_1_1ptq_1_1Int8CacheCalibrator.html

+++ b/docs/_cpp_api/classtrtorch_1_1ptq_1_1Int8CacheCalibrator.html

@@ -865,7 +865,7 @@

Convience function to convert to a IInt8Calibrator* to easily be assigned to the ptq_calibrator field in

-

+

CompileSpec

diff --git a/docs/_cpp_api/classtrtorch_1_1ptq_1_1Int8Calibrator.html b/docs/_cpp_api/classtrtorch_1_1ptq_1_1Int8Calibrator.html

index 797c91a841..552fbd4d3d 100644

--- a/docs/_cpp_api/classtrtorch_1_1ptq_1_1Int8Calibrator.html

+++ b/docs/_cpp_api/classtrtorch_1_1ptq_1_1Int8Calibrator.html

@@ -926,7 +926,7 @@

Convience function to convert to a IInt8Calibrator* to easily be assigned to the ptq_calibrator field in

-

+

CompileSpec

diff --git a/docs/_cpp_api/file_cpp_api_include_trtorch_logging.h.html b/docs/_cpp_api/file_cpp_api_include_trtorch_logging.h.html

index 28c3b28c94..e54f3aab7c 100644

--- a/docs/_cpp_api/file_cpp_api_include_trtorch_logging.h.html

+++ b/docs/_cpp_api/file_cpp_api_include_trtorch_logging.h.html

@@ -407,7 +407,7 @@

)

-

+

Contents

diff --git a/docs/_cpp_api/file_cpp_api_include_trtorch_macros.h.html b/docs/_cpp_api/file_cpp_api_include_trtorch_macros.h.html

index ff83ad0cbb..3730db95da 100644

--- a/docs/_cpp_api/file_cpp_api_include_trtorch_macros.h.html

+++ b/docs/_cpp_api/file_cpp_api_include_trtorch_macros.h.html

@@ -392,7 +392,7 @@

)

-

+

Contents

diff --git a/docs/_cpp_api/file_cpp_api_include_trtorch_ptq.h.html b/docs/_cpp_api/file_cpp_api_include_trtorch_ptq.h.html

index e2c6adc7bd..43d78bb1c1 100644

--- a/docs/_cpp_api/file_cpp_api_include_trtorch_ptq.h.html

+++ b/docs/_cpp_api/file_cpp_api_include_trtorch_ptq.h.html

@@ -402,7 +402,7 @@

)

-

+

Contents

diff --git a/docs/_cpp_api/file_cpp_api_include_trtorch_trtorch.h.html b/docs/_cpp_api/file_cpp_api_include_trtorch_trtorch.h.html

index cbb1c8a391..687ea513c9 100644

--- a/docs/_cpp_api/file_cpp_api_include_trtorch_trtorch.h.html

+++ b/docs/_cpp_api/file_cpp_api_include_trtorch_trtorch.h.html

@@ -402,7 +402,7 @@

)

-

+

Contents

@@ -547,7 +547,7 @@

-

-

-

-

-

+

Class CompileSpec::DeviceType

diff --git a/docs/_cpp_api/function_trtorch_8h_1a3eace458ae9122f571fabfc9ef1b9e3a.html b/docs/_cpp_api/function_trtorch_8h_1a3eace458ae9122f571fabfc9ef1b9e3a.html

index a6aa986ac0..1d15d10c2a 100644

--- a/docs/_cpp_api/function_trtorch_8h_1a3eace458ae9122f571fabfc9ef1b9e3a.html

+++ b/docs/_cpp_api/function_trtorch_8h_1a3eace458ae9122f571fabfc9ef1b9e3a.html

@@ -459,7 +459,7 @@

:

-

+

trtorch::CompileSpec

diff --git a/docs/_cpp_api/function_trtorch_8h_1af19cb866b0520fc84b69a1cf25a52b65.html b/docs/_cpp_api/function_trtorch_8h_1af19cb866b0520fc84b69a1cf25a52b65.html

index 143288c087..9513f38991 100644

--- a/docs/_cpp_api/function_trtorch_8h_1af19cb866b0520fc84b69a1cf25a52b65.html

+++ b/docs/_cpp_api/function_trtorch_8h_1af19cb866b0520fc84b69a1cf25a52b65.html

@@ -423,6 +423,9 @@

)

+

+ ¶

+

-

@@ -470,7 +473,7 @@

:

-

+

trtorch::CompileSpec

diff --git a/docs/_cpp_api/namespace_trtorch.html b/docs/_cpp_api/namespace_trtorch.html

index fd747baf1d..65dd616a2b 100644

--- a/docs/_cpp_api/namespace_trtorch.html

+++ b/docs/_cpp_api/namespace_trtorch.html

@@ -378,7 +378,7 @@

-

+

Contents

@@ -440,7 +440,7 @@

-

-

-

-

-

+

Class CompileSpec::DeviceType

diff --git a/docs/_cpp_api/namespace_trtorch__logging.html b/docs/_cpp_api/namespace_trtorch__logging.html

index 28fe619697..6da91d52aa 100644

--- a/docs/_cpp_api/namespace_trtorch__logging.html

+++ b/docs/_cpp_api/namespace_trtorch__logging.html

@@ -373,7 +373,7 @@

-

+

Contents

diff --git a/docs/_cpp_api/namespace_trtorch__ptq.html b/docs/_cpp_api/namespace_trtorch__ptq.html

index 55807907c4..53fb113684 100644

--- a/docs/_cpp_api/namespace_trtorch__ptq.html

+++ b/docs/_cpp_api/namespace_trtorch__ptq.html

@@ -373,7 +373,7 @@

-

+

Contents

diff --git a/docs/_cpp_api/structtrtorch_1_1CompileSpec.html b/docs/_cpp_api/structtrtorch_1_1CompileSpec.html

index 5ab4b8daad..4650623324 100644

--- a/docs/_cpp_api/structtrtorch_1_1CompileSpec.html

+++ b/docs/_cpp_api/structtrtorch_1_1CompileSpec.html

@@ -408,7 +408,7 @@

-

-

-

-

+

Struct CompileSpec::InputRange

@@ -759,7 +759,10 @@

DataType

- ::kFloat

+ ::

+

+ kFloat

+

¶

@@ -865,7 +868,10 @@

DeviceType

- ::kGPU

+ ::

+

+ kGPU

+

¶

@@ -1045,7 +1051,7 @@

-

Underlying enum class to support the

-

+

DataType

@@ -1054,7 +1060,7 @@

In the case that you need to use the

-

+

DataType

@@ -1063,7 +1069,7 @@

ex. trtorch::DataType type =

-

+

DataType::kFloat

@@ -1184,7 +1190,7 @@

-

-

+

DataType

@@ -1262,7 +1268,7 @@

-

Get the enum value of the

-

+

DataType

@@ -1335,7 +1341,7 @@

-

Comparision operator for

-

+

DataType

@@ -1422,7 +1428,7 @@

-

Comparision operator for

-

+

DataType

@@ -1505,7 +1511,7 @@

-

Comparision operator for

-

+

DataType

@@ -1592,7 +1598,7 @@

-

Comparision operator for

-

+

DataType

@@ -1665,7 +1671,7 @@

This class is compatable with c10::DeviceTypes (but will check for TRT support) but the only applicable value is at::kCUDA, which maps to

-

+

DeviceType::kGPU

@@ -1673,7 +1679,7 @@

To use the

-

+

DataType

@@ -1682,7 +1688,7 @@

ex. trtorch::DeviceType type =

-

+

DeviceType::kGPU

@@ -1708,7 +1714,7 @@

-

Underlying enum class to support the

-

+

DeviceType

@@ -1717,7 +1723,7 @@

In the case that you need to use the

-

+

DeviceType

@@ -1726,7 +1732,7 @@

ex. trtorch::DeviceType type =

-

+

DeviceType::kGPU

@@ -1969,7 +1975,7 @@

-

Comparison operator for

-

+

DeviceType

@@ -2052,7 +2058,7 @@

-

Comparison operator for

-

+

DeviceType

diff --git a/docs/_cpp_api/structtrtorch_1_1CompileSpec_1_1InputRange.html b/docs/_cpp_api/structtrtorch_1_1CompileSpec_1_1InputRange.html

index c87755f91e..0620aef0db 100644

--- a/docs/_cpp_api/structtrtorch_1_1CompileSpec_1_1InputRange.html

+++ b/docs/_cpp_api/structtrtorch_1_1CompileSpec_1_1InputRange.html

@@ -392,7 +392,7 @@

This struct is a nested type of

-

+

Struct CompileSpec

diff --git a/docs/_notebooks/Resnet50-example.html b/docs/_notebooks/Resnet50-example.html

index 7bff2ba3f8..2c22d0b157 100644

--- a/docs/_notebooks/Resnet50-example.html

+++ b/docs/_notebooks/Resnet50-example.html

@@ -380,7 +380,7 @@

-

-

+

What’s next

@@ -646,26 +646,26 @@

-# Copyright 2019 NVIDIA Corporation. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-# ==============================================================================

+# Copyright 2019 NVIDIA Corporation. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ==============================================================================

-  +

+

TRTorch Getting Started - ResNet 50

@@ -753,7 +753,7 @@

-!nvidia-smi

+!nvidia-smi

@@ -815,9 +815,13 @@

-

- ## 1. Requirements

-

+

+

+

+ ## 1. Requirements

+

+

+

Follow the steps in

@@ -827,9 +831,13 @@

to prepare a Docker container, within which you can run this notebook.

-

- ## 2. ResNet-50 Overview

-

+

+

+

+ ## 2. ResNet-50 Overview

+

+

+

PyTorch has a model repository called the PyTorch Hub, which is a source for high quality implementations of common models. We can get our ResNet-50 model from there pretrained on ImageNet.

@@ -859,9 +867,9 @@

-import torch

-resnet50_model = torch.hub.load('pytorch/vision:v0.6.0', 'resnet50', pretrained=True)

-resnet50_model.eval()

+import torch

+resnet50_model = torch.hub.load('pytorch/vision:v0.6.0', 'resnet50', pretrained=True)

+resnet50_model.eval()

@@ -1068,7 +1076,7 @@

- All pre-trained models expect input images normalized in the same way, i.e. mini-batches of 3-channel RGB images of shape

+ All pre-trained models expect input images normalized in the same way, i.e. mini-batches of 3-channel RGB images of shape

(3

@@ -1164,13 +1172,13 @@

-!mkdir ./data

-!wget -O ./data/img0.JPG "https://d17fnq9dkz9hgj.cloudfront.net/breed-uploads/2018/08/siberian-husky-detail.jpg?bust=1535566590&width=630"

-!wget -O ./data/img1.JPG "https://www.hakaimagazine.com/wp-content/uploads/header-gulf-birds.jpg"

-!wget -O ./data/img2.JPG "https://www.artis.nl/media/filer_public_thumbnails/filer_public/00/f1/00f1b6db-fbed-4fef-9ab0-84e944ff11f8/chimpansee_amber_r_1920x1080.jpg__1920x1080_q85_subject_location-923%2C365_subsampling-2.jpg"

-!wget -O ./data/img3.JPG "https://www.familyhandyman.com/wp-content/uploads/2018/09/How-to-Avoid-Snakes-Slithering-Up-Your-Toilet-shutterstock_780480850.jpg"

+!mkdir ./data

+!wget -O ./data/img0.JPG "https://d17fnq9dkz9hgj.cloudfront.net/breed-uploads/2018/08/siberian-husky-detail.jpg?bust=1535566590&width=630"

+!wget -O ./data/img1.JPG "https://www.hakaimagazine.com/wp-content/uploads/header-gulf-birds.jpg"

+!wget -O ./data/img2.JPG "https://www.artis.nl/media/filer_public_thumbnails/filer_public/00/f1/00f1b6db-fbed-4fef-9ab0-84e944ff11f8/chimpansee_amber_r_1920x1080.jpg__1920x1080_q85_subject_location-923%2C365_subsampling-2.jpg"

+!wget -O ./data/img3.JPG "https://www.familyhandyman.com/wp-content/uploads/2018/09/How-to-Avoid-Snakes-Slithering-Up-Your-Toilet-shutterstock_780480850.jpg"

-!wget -O ./data/imagenet_class_index.json "https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json"

+!wget -O ./data/imagenet_class_index.json "https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json"

@@ -1251,7 +1259,7 @@

-!pip install pillow matplotlib

+!pip install pillow matplotlib

@@ -1288,25 +1296,25 @@

-from PIL import Image

-from torchvision import transforms

-import matplotlib.pyplot as plt

+from PIL import Image

+from torchvision import transforms

+import matplotlib.pyplot as plt

-fig, axes = plt.subplots(nrows=2, ncols=2)

+fig, axes = plt.subplots(nrows=2, ncols=2)

-for i in range(4):

- img_path = './data/img%d.JPG'%i

- img = Image.open(img_path)

- preprocess = transforms.Compose([

- transforms.Resize(256),

- transforms.CenterCrop(224),

- transforms.ToTensor(),

- transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

-])

- input_tensor = preprocess(img)

- plt.subplot(2,2,i+1)

- plt.imshow(img)

- plt.axis('off')

+for i in range(4):

+ img_path = './data/img%d.JPG'%i

+ img = Image.open(img_path)

+ preprocess = transforms.Compose([

+ transforms.Resize(256),

+ transforms.CenterCrop(224),

+ transforms.ToTensor(),

+ transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

+])

+ input_tensor = preprocess(img)

+ plt.subplot(2,2,i+1)

+ plt.imshow(img)

+ plt.axis('off')

@@ -1328,12 +1336,12 @@

-import json

+import json

-with open("./data/imagenet_class_index.json") as json_file:

- d = json.load(json_file)

+with open("./data/imagenet_class_index.json") as json_file:

+ d = json.load(json_file)

-print("Number of classes in ImageNet: {}".format(len(d)))

+print("Number of classes in ImageNet: {}".format(len(d)))

@@ -1359,36 +1367,36 @@

-import numpy as np

+import numpy as np

-def rn50_preprocess():

- preprocess = transforms.Compose([

- transforms.Resize(256),

- transforms.CenterCrop(224),

- transforms.ToTensor(),

- transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

- ])

- return preprocess

+def rn50_preprocess():

+ preprocess = transforms.Compose([

+ transforms.Resize(256),

+ transforms.CenterCrop(224),

+ transforms.ToTensor(),

+ transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

+ ])

+ return preprocess

-# decode the results into ([predicted class, description], probability)

-def predict(img_path, model):

- img = Image.open(img_path)

- preprocess = rn50_preprocess()

- input_tensor = preprocess(img)

- input_batch = input_tensor.unsqueeze(0) # create a mini-batch as expected by the model

+# decode the results into ([predicted class, description], probability)

+def predict(img_path, model):

+ img = Image.open(img_path)

+ preprocess = rn50_preprocess()

+ input_tensor = preprocess(img)

+ input_batch = input_tensor.unsqueeze(0) # create a mini-batch as expected by the model

- # move the input and model to GPU for speed if available

- if torch.cuda.is_available():

- input_batch = input_batch.to('cuda')

- model.to('cuda')

+ # move the input and model to GPU for speed if available

+ if torch.cuda.is_available():

+ input_batch = input_batch.to('cuda')

+ model.to('cuda')

- with torch.no_grad():

- output = model(input_batch)

- # Tensor of shape 1000, with confidence scores over Imagenet's 1000 classes

- sm_output = torch.nn.functional.softmax(output[0], dim=0)

+ with torch.no_grad():

+ output = model(input_batch)

+ # Tensor of shape 1000, with confidence scores over Imagenet's 1000 classes

+ sm_output = torch.nn.functional.softmax(output[0], dim=0)

- ind = torch.argmax(sm_output)

- return d[str(ind.item())], sm_output[ind] #([predicted class, description], probability)

+ ind = torch.argmax(sm_output)

+ return d[str(ind.item())], sm_output[ind] #([predicted class, description], probability)

@@ -1403,17 +1411,17 @@

-for i in range(4):

- img_path = './data/img%d.JPG'%i

- img = Image.open(img_path)

+for i in range(4):

+ img_path = './data/img%d.JPG'%i

+ img = Image.open(img_path)

- pred, prob = predict(img_path, resnet50_model)

- print('{} - Predicted: {}, Probablility: {}'.format(img_path, pred, prob))

+ pred, prob = predict(img_path, resnet50_model)

+ print('{} - Predicted: {}, Probablility: {}'.format(img_path, pred, prob))

- plt.subplot(2,2,i+1)

- plt.imshow(img);

- plt.axis('off');

- plt.title(pred[1])

+ plt.subplot(2,2,i+1)

+ plt.imshow(img);

+ plt.axis('off');

+ plt.title(pred[1])

@@ -1458,38 +1466,38 @@

-import time

-import numpy as np

+import time

+import numpy as np

-import torch.backends.cudnn as cudnn

-cudnn.benchmark = True

+import torch.backends.cudnn as cudnn

+cudnn.benchmark = True

-def benchmark(model, input_shape=(1024, 1, 224, 224), dtype='fp32', nwarmup=50, nruns=10000):

- input_data = torch.randn(input_shape)

- input_data = input_data.to("cuda")

- if dtype=='fp16':

- input_data = input_data.half()

+def benchmark(model, input_shape=(1024, 1, 224, 224), dtype='fp32', nwarmup=50, nruns=10000):

+ input_data = torch.randn(input_shape)

+ input_data = input_data.to("cuda")

+ if dtype=='fp16':

+ input_data = input_data.half()

- print("Warm up ...")

- with torch.no_grad():

- for _ in range(nwarmup):

- features = model(input_data)

- torch.cuda.synchronize()

- print("Start timing ...")

- timings = []

- with torch.no_grad():

- for i in range(1, nruns+1):

- start_time = time.time()

- features = model(input_data)

- torch.cuda.synchronize()

- end_time = time.time()

- timings.append(end_time - start_time)

- if i%100==0:

- print('Iteration %d/%d, ave batch time %.2f ms'%(i, nruns, np.mean(timings)*1000))

+ print("Warm up ...")

+ with torch.no_grad():

+ for _ in range(nwarmup):

+ features = model(input_data)

+ torch.cuda.synchronize()

+ print("Start timing ...")

+ timings = []

+ with torch.no_grad():

+ for i in range(1, nruns+1):

+ start_time = time.time()

+ features = model(input_data)

+ torch.cuda.synchronize()

+ end_time = time.time()

+ timings.append(end_time - start_time)

+ if i%100==0:

+ print('Iteration %d/%d, ave batch time %.2f ms'%(i, nruns, np.mean(timings)*1000))

- print("Input shape:", input_data.size())

- print("Output features size:", features.size())

- print('Average batch time: %.2f ms'%(np.mean(timings)*1000))

+ print("Input shape:", input_data.size())

+ print("Output features size:", features.size())

+ print('Average batch time: %.2f ms'%(np.mean(timings)*1000))

@@ -1504,9 +1512,9 @@

-# Model benchmark without TRTorch/TensorRT

-model = resnet50_model.eval().to("cuda")

-benchmark(model, input_shape=(128, 3, 224, 224), nruns=1000)

+# Model benchmark without TRTorch/TensorRT

+model = resnet50_model.eval().to("cuda")

+benchmark(model, input_shape=(128, 3, 224, 224), nruns=1000)

@@ -1536,9 +1544,13 @@

-

- ## 3. Creating TorchScript modules

-

+

+

+

+ ## 3. Creating TorchScript modules

+

+

+

To compile with TRTorch, the model must first be in

@@ -1591,8 +1603,8 @@

-model = resnet50_model.eval().to("cuda")

-traced_model = torch.jit.trace(model, [torch.randn((128, 3, 224, 224)).to("cuda")])

+model = resnet50_model.eval().to("cuda")

+traced_model = torch.jit.trace(model, [torch.randn((128, 3, 224, 224)).to("cuda")])

@@ -1610,8 +1622,8 @@

-# This is just an example, and not required for the purposes of this demo

-torch.jit.save(traced_model, "resnet_50_traced.jit.pt")

+# This is just an example, and not required for the purposes of this demo

+torch.jit.save(traced_model, "resnet_50_traced.jit.pt")

@@ -1626,8 +1638,8 @@

-# Obtain the average time taken by a batch of input

-benchmark(traced_model, input_shape=(128, 3, 224, 224), nruns=1000)

+# Obtain the average time taken by a batch of input

+benchmark(traced_model, input_shape=(128, 3, 224, 224), nruns=1000)

@@ -1657,9 +1669,13 @@

-

- ## 4. Compiling with TRTorch

-

+

+

+

+ ## 4. Compiling with TRTorch

+

+

+

TorchScript modules behave just like normal PyTorch modules and are intercompatible. From TorchScript we can now compile a TensorRT based module. This module will still be implemented in TorchScript but all the computation will be done in TensorRT.

@@ -1685,15 +1701,15 @@

-import trtorch

+import trtorch

-# The compiled module will have precision as specified by "op_precision".

-# Here, it will have FP16 precision.

-trt_model_fp32 = trtorch.compile(traced_model, {

- "input_shapes": [(128, 3, 224, 224)],

- "op_precision": torch.float32, # Run with FP32

- "workspace_size": 1 << 20

-})

+# The compiled module will have precision as specified by "op_precision".

+# Here, it will have FP16 precision.

+trt_model_fp32 = trtorch.compile(traced_model, {

+ "input_shapes": [(128, 3, 224, 224)],

+ "op_precision": torch.float32, # Run with FP32

+ "workspace_size": 1 << 20

+})

@@ -1710,8 +1726,8 @@

-# Obtain the average time taken by a batch of input

-benchmark(trt_model_fp32, input_shape=(128, 3, 224, 224), nruns=1000)

+# Obtain the average time taken by a batch of input

+benchmark(trt_model_fp32, input_shape=(128, 3, 224, 224), nruns=1000)

@@ -1757,15 +1773,15 @@

-import trtorch

+import trtorch

-# The compiled module will have precision as specified by "op_precision".

-# Here, it will have FP16 precision.

-trt_model = trtorch.compile(traced_model, {

- "input_shapes": [(128, 3, 224, 224)],

- "op_precision": torch.half, # Run with FP16

- "workspace_size": 1 << 20

-})

+# The compiled module will have precision as specified by "op_precision".

+# Here, it will have FP16 precision.

+trt_model = trtorch.compile(traced_model, {

+ "input_shapes": [(128, 3, 224, 224)],

+ "op_precision": torch.half, # Run with FP16

+ "workspace_size": 1 << 20

+})

@@ -1781,8 +1797,8 @@

-# Obtain the average time taken by a batch of input

-benchmark(trt_model, input_shape=(128, 3, 224, 224), dtype='fp16', nruns=1000)

+# Obtain the average time taken by a batch of input

+benchmark(trt_model, input_shape=(128, 3, 224, 224), dtype='fp16', nruns=1000)

@@ -1812,9 +1828,13 @@

-

- ## 5. Conclusion

-

+

+

+

+ ## 5. Conclusion

+

+

+

In this notebook, we have walked through the complete process of compiling TorchScript models with TRTorch for ResNet-50 model and test the performance impact of the optimization. With TRTorch, we observe a speedup of

@@ -1826,9 +1846,9 @@

with FP16.

-

+

What’s next

-

+

¶

diff --git a/docs/_notebooks/lenet-getting-started.html b/docs/_notebooks/lenet-getting-started.html

index 034ee524aa..99c0d6f8f1 100644

--- a/docs/_notebooks/lenet-getting-started.html

+++ b/docs/_notebooks/lenet-getting-started.html

@@ -326,7 +326,7 @@

-

- ## 1. Requirements

-

+

+

+

+ ## 1. Requirements

+

+

+

Follow the steps in

@@ -835,9 +839,13 @@

to prepare a Docker container, within which you can run this notebook.

-

- ## 2. Creating TorchScript modules

-

+

+

+

+ ## 2. Creating TorchScript modules

+

+

+

Here we create two submodules for a feature extractor and a classifier and stitch them together in a single LeNet module. In this case this is overkill but modules give us granular control over our program including where we decide to optimize and where we don’t. It is also the unit that the TorchScript compiler operates on. So you can decide to only convert/optimize the feature extractor and leave the classifier in standard PyTorch or you can convert the whole thing. When compiling your module

to TorchScript, there are two paths: Tracing and Scripting.

@@ -852,45 +860,45 @@

-import torch

-from torch import nn

-import torch.nn.functional as F

+import torch

+from torch import nn

+import torch.nn.functional as F

-class LeNetFeatExtractor(nn.Module):

- def __init__(self):

- super(LeNetFeatExtractor, self).__init__()

- self.conv1 = nn.Conv2d(1, 6, 3)

- self.conv2 = nn.Conv2d(6, 16, 3)

+class LeNetFeatExtractor(nn.Module):

+ def __init__(self):

+ super(LeNetFeatExtractor, self).__init__()

+ self.conv1 = nn.Conv2d(1, 6, 3)

+ self.conv2 = nn.Conv2d(6, 16, 3)

- def forward(self, x):

- x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

- x = F.max_pool2d(F.relu(self.conv2(x)), 2)

- return x

+ def forward(self, x):

+ x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

+ x = F.max_pool2d(F.relu(self.conv2(x)), 2)

+ return x

-class LeNetClassifier(nn.Module):

- def __init__(self):

- super(LeNetClassifier, self).__init__()

- self.fc1 = nn.Linear(16 * 6 * 6, 120)

- self.fc2 = nn.Linear(120, 84)

- self.fc3 = nn.Linear(84, 10)

+class LeNetClassifier(nn.Module):

+ def __init__(self):

+ super(LeNetClassifier, self).__init__()

+ self.fc1 = nn.Linear(16 * 6 * 6, 120)

+ self.fc2 = nn.Linear(120, 84)

+ self.fc3 = nn.Linear(84, 10)

- def forward(self, x):

- x = torch.flatten(x,1)

- x = F.relu(self.fc1(x))

- x = F.relu(self.fc2(x))

- x = self.fc3(x)

- return x

+ def forward(self, x):

+ x = torch.flatten(x,1)

+ x = F.relu(self.fc1(x))

+ x = F.relu(self.fc2(x))

+ x = self.fc3(x)

+ return x

-class LeNet(nn.Module):

- def __init__(self):

- super(LeNet, self).__init__()

- self.feat = LeNetFeatExtractor()

- self.classifer = LeNetClassifier()

+class LeNet(nn.Module):

+ def __init__(self):

+ super(LeNet, self).__init__()

+ self.feat = LeNetFeatExtractor()

+ self.classifer = LeNetClassifier()

- def forward(self, x):

- x = self.feat(x)

- x = self.classifer(x)

- return x

+ def forward(self, x):

+ x = self.feat(x)

+ x = self.classifer(x)

+ return x

@@ -909,39 +917,39 @@

-import time

-import numpy as np

+import time

+import numpy as np

-import torch.backends.cudnn as cudnn

-cudnn.benchmark = True

+import torch.backends.cudnn as cudnn

+cudnn.benchmark = True

-def benchmark(model, input_shape=(1024, 1, 32, 32), dtype='fp32', nwarmup=50, nruns=10000):

- input_data = torch.randn(input_shape)

- input_data = input_data.to("cuda")

- if dtype=='fp16':

- input_data = input_data.half()

+def benchmark(model, input_shape=(1024, 1, 32, 32), dtype='fp32', nwarmup=50, nruns=10000):

+ input_data = torch.randn(input_shape)

+ input_data = input_data.to("cuda")

+ if dtype=='fp16':

+ input_data = input_data.half()

- print("Warm up ...")

- with torch.no_grad():

- for _ in range(nwarmup):

- features = model(input_data)

- torch.cuda.synchronize()

- print("Start timing ...")

- timings = []

- with torch.no_grad():

- for i in range(1, nruns+1):

- start_time = time.time()

- features = model(input_data)

- torch.cuda.synchronize()

- end_time = time.time()

- timings.append(end_time - start_time)

- if i%1000==0:

- print('Iteration %d/%d, ave batch time %.2f ms'%(i, nruns, np.mean(timings)*1000))

+ print("Warm up ...")

+ with torch.no_grad():

+ for _ in range(nwarmup):

+ features = model(input_data)

+ torch.cuda.synchronize()

+ print("Start timing ...")

+ timings = []

+ with torch.no_grad():

+ for i in range(1, nruns+1):

+ start_time = time.time()

+ features = model(input_data)

+ torch.cuda.synchronize()

+ end_time = time.time()

+ timings.append(end_time - start_time)

+ if i%1000==0:

+ print('Iteration %d/%d, ave batch time %.2f ms'%(i, nruns, np.mean(timings)*1000))

- print("Input shape:", input_data.size())

- print("Output features size:", features.size())

+ print("Input shape:", input_data.size())

+ print("Output features size:", features.size())

- print('Average batch time: %.2f ms'%(np.mean(timings)*1000))

+ print('Average batch time: %.2f ms'%(np.mean(timings)*1000))

@@ -962,8 +970,8 @@

-model = LeNet()

-model.to("cuda").eval()

+model = LeNet()

+model.to("cuda").eval()

@@ -1004,7 +1012,7 @@

-benchmark(model)

+benchmark(model)

@@ -1056,8 +1064,8 @@

-traced_model = torch.jit.trace(model, torch.empty([1,1,32,32]).to("cuda"))

-traced_model

+traced_model = torch.jit.trace(model, torch.empty([1,1,32,32]).to("cuda"))

+traced_model

@@ -1100,7 +1108,7 @@

-benchmark(traced_model)

+benchmark(traced_model)

@@ -1149,8 +1157,8 @@

-model = LeNet().to("cuda").eval()

-script_model = torch.jit.script(model)

+model = LeNet().to("cuda").eval()

+script_model = torch.jit.script(model)

@@ -1166,7 +1174,7 @@

-script_model

+script_model

@@ -1209,7 +1217,7 @@

-benchmark(script_model)

+benchmark(script_model)

@@ -1239,9 +1247,13 @@

-

- ## 3. Compiling with TRTorch

-

+

+

+

+ ## 3. Compiling with TRTorch

+

+

+

TorchScript traced model

@@ -1261,28 +1273,28 @@

-import trtorch

+import trtorch

-# We use a batch-size of 1024, and half precision

-compile_settings = {

- "input_shapes": [

- {

- "min" : [1024, 1, 32, 32],

- "opt" : [1024, 1, 33, 33],

- "max" : [1024, 1, 34, 34],

- }

- ],

- "op_precision": torch.half # Run with FP16

-}

+# We use a batch-size of 1024, and half precision

+compile_settings = {

+ "input_shapes": [

+ {

+ "min" : [1024, 1, 32, 32],

+ "opt" : [1024, 1, 33, 33],

+ "max" : [1024, 1, 34, 34],

+ }

+ ],

+ "op_precision": torch.half # Run with FP16

+}

-trt_ts_module = trtorch.compile(traced_model, compile_settings)

+trt_ts_module = trtorch.compile(traced_model, compile_settings)

-input_data = torch.randn((1024, 1, 32, 32))

-input_data = input_data.half().to("cuda")

+input_data = torch.randn((1024, 1, 32, 32))

+input_data = input_data.half().to("cuda")

-input_data = input_data.half()

-result = trt_ts_module(input_data)

-torch.jit.save(trt_ts_module, "trt_ts_module.ts")

+input_data = input_data.half()

+result = trt_ts_module(input_data)

+torch.jit.save(trt_ts_module, "trt_ts_module.ts")

@@ -1297,7 +1309,7 @@

-benchmark(trt_ts_module, input_shape=(1024, 1, 32, 32), dtype="fp16")

+benchmark(trt_ts_module, input_shape=(1024, 1, 32, 32), dtype="fp16")

@@ -1346,28 +1358,28 @@

-import trtorch

+import trtorch

-# We use a batch-size of 1024, and half precision

-compile_settings = {

- "input_shapes": [

- {

- "min" : [1024, 1, 32, 32],

- "opt" : [1024, 1, 33, 33],

- "max" : [1024, 1, 34, 34],

- }

- ],

- "op_precision": torch.half # Run with FP16

-}

+# We use a batch-size of 1024, and half precision

+compile_settings = {

+ "input_shapes": [

+ {

+ "min" : [1024, 1, 32, 32],

+ "opt" : [1024, 1, 33, 33],

+ "max" : [1024, 1, 34, 34],

+ }

+ ],

+ "op_precision": torch.half # Run with FP16

+}

-trt_script_module = trtorch.compile(script_model, compile_settings)

+trt_script_module = trtorch.compile(script_model, compile_settings)

-input_data = torch.randn((1024, 1, 32, 32))

-input_data = input_data.half().to("cuda")

+input_data = torch.randn((1024, 1, 32, 32))

+input_data = input_data.half().to("cuda")

-input_data = input_data.half()

-result = trt_script_module(input_data)

-torch.jit.save(trt_script_module, "trt_script_module.ts")

+input_data = input_data.half()

+result = trt_script_module(input_data)

+torch.jit.save(trt_script_module, "trt_script_module.ts")

@@ -1382,7 +1394,7 @@

-benchmark(trt_script_module, input_shape=(1024, 1, 32, 32), dtype="fp16")

+benchmark(trt_script_module, input_shape=(1024, 1, 32, 32), dtype="fp16")

@@ -1421,9 +1433,9 @@

In this notebook, we have walked through the complete process of compiling TorchScript models with TRTorch and test the performance impact of the optimization.

-

+

What’s next

-

+

¶

diff --git a/docs/_notebooks/ssd-object-detection-demo.html b/docs/_notebooks/ssd-object-detection-demo.html

index 5843220367..2de3efd2d0 100644

--- a/docs/_notebooks/ssd-object-detection-demo.html

+++ b/docs/_notebooks/ssd-object-detection-demo.html

@@ -760,26 +760,26 @@

-# Copyright 2020 NVIDIA Corporation. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-# ==============================================================================

+# Copyright 2020 NVIDIA Corporation. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ==============================================================================

-  +

+

Object Detection with TRTorch (SSD)

@@ -877,9 +877,13 @@

-

- ## 1. Requirements

-

+

+

+

+ ## 1. Requirements

+

+

+

Follow the steps in

@@ -902,18 +906,22 @@

-%%capture

-%%bash

-# Known working versions

-pip install numpy==1.19 scipy==1.5.2 Pillow==6.2.0 scikit-image==0.17.2 matplotlib==3.3.0

+%%capture

+%%bash

+# Known working versions

+pip install numpy==1.19 scipy==1.5.2 Pillow==6.2.0 scikit-image==0.17.2 matplotlib==3.3.0

-

- ## 2. SSD

-

+

+

+

+ ## 2. SSD

+

+

+

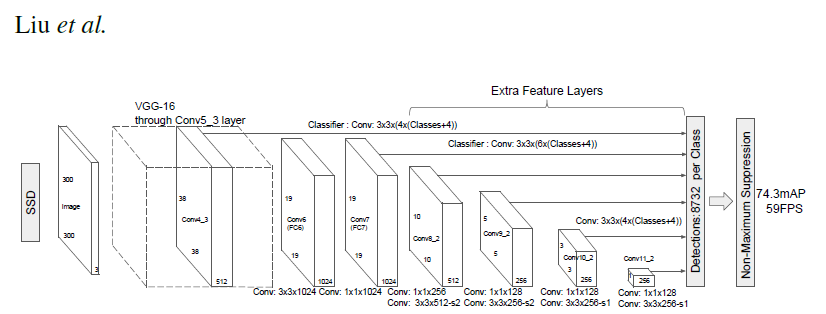

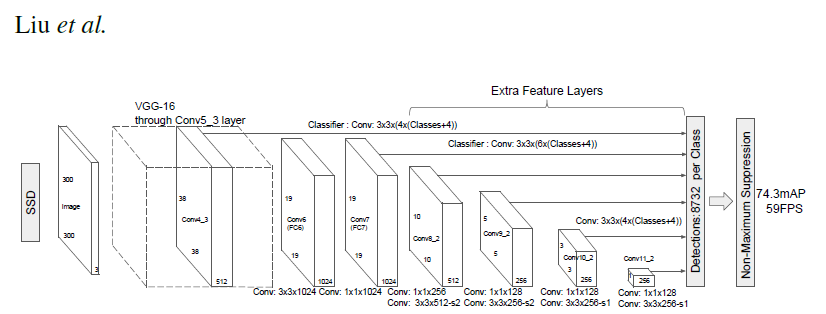

Single Shot MultiBox Detector model for object detection

@@ -922,8 +930,8 @@

-

@@ -943,12 +951,12 @@

-  +

+

-  +

+

@@ -1007,10 +1015,10 @@

-import torch

+import torch

-# List of available models in PyTorch Hub from Nvidia/DeepLearningExamples

-torch.hub.list('NVIDIA/DeepLearningExamples:torchhub')

+# List of available models in PyTorch Hub from Nvidia/DeepLearningExamples

+torch.hub.list('NVIDIA/DeepLearningExamples:torchhub')

@@ -1057,9 +1065,9 @@

-# load SSD model pretrained on COCO from Torch Hub

-precision = 'fp32'

-ssd300 = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_ssd', model_math=precision);

+# load SSD model pretrained on COCO from Torch Hub

+precision = 'fp32'

+ssd300 = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_ssd', model_math=precision);

@@ -1103,23 +1111,23 @@

-# Sample images from the COCO validation set

-uris = [

- 'http://images.cocodataset.org/val2017/000000397133.jpg',

- 'http://images.cocodataset.org/val2017/000000037777.jpg',

- 'http://images.cocodataset.org/val2017/000000252219.jpg'

-]

+# Sample images from the COCO validation set

+uris = [

+ 'http://images.cocodataset.org/val2017/000000397133.jpg',

+ 'http://images.cocodataset.org/val2017/000000037777.jpg',

+ 'http://images.cocodataset.org/val2017/000000252219.jpg'

+]

-# For convenient and comprehensive formatting of input and output of the model, load a set of utility methods.

-utils = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_ssd_processing_utils')

+# For convenient and comprehensive formatting of input and output of the model, load a set of utility methods.

+utils = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_ssd_processing_utils')

-# Format images to comply with the network input

-inputs = [utils.prepare_input(uri) for uri in uris]

-tensor = utils.prepare_tensor(inputs, False)

+# Format images to comply with the network input

+inputs = [utils.prepare_input(uri) for uri in uris]

+tensor = utils.prepare_tensor(inputs, False)

-# The model was trained on COCO dataset, which we need to access in order to

-# translate class IDs into object names.

-classes_to_labels = utils.get_coco_object_dictionary()

+# The model was trained on COCO dataset, which we need to access in order to

+# translate class IDs into object names.

+classes_to_labels = utils.get_coco_object_dictionary()

@@ -1157,15 +1165,15 @@

-# Next, we run object detection

-model = ssd300.eval().to("cuda")

-detections_batch = model(tensor)

+# Next, we run object detection

+model = ssd300.eval().to("cuda")

+detections_batch = model(tensor)

-# By default, raw output from SSD network per input image contains 8732 boxes with

-# localization and class probability distribution.

-# Let’s filter this output to only get reasonable detections (confidence>40%) in a more comprehensive format.

-results_per_input = utils.decode_results(detections_batch)

-best_results_per_input = [utils.pick_best(results, 0.40) for results in results_per_input]

+# By default, raw output from SSD network per input image contains 8732 boxes with

+# localization and class probability distribution.

+# Let’s filter this output to only get reasonable detections (confidence>40%) in a more comprehensive format.

+results_per_input = utils.decode_results(detections_batch)

+best_results_per_input = [utils.pick_best(results, 0.40) for results in results_per_input]

@@ -1186,25 +1194,25 @@

-from matplotlib import pyplot as plt

-import matplotlib.patches as patches

+from matplotlib import pyplot as plt

+import matplotlib.patches as patches

-# The utility plots the images and predicted bounding boxes (with confidence scores).

-def plot_results(best_results):

- for image_idx in range(len(best_results)):

- fig, ax = plt.subplots(1)

- # Show original, denormalized image...

- image = inputs[image_idx] / 2 + 0.5

- ax.imshow(image)

- # ...with detections

- bboxes, classes, confidences = best_results[image_idx]

- for idx in range(len(bboxes)):

- left, bot, right, top = bboxes[idx]

- x, y, w, h = [val * 300 for val in [left, bot, right - left, top - bot]]

- rect = patches.Rectangle((x, y), w, h, linewidth=1, edgecolor='r', facecolor='none')

- ax.add_patch(rect)

- ax.text(x, y, "{} {:.0f}%".format(classes_to_labels[classes[idx] - 1], confidences[idx]*100), bbox=dict(facecolor='white', alpha=0.5))

- plt.show()

+# The utility plots the images and predicted bounding boxes (with confidence scores).

+def plot_results(best_results):

+ for image_idx in range(len(best_results)):

+ fig, ax = plt.subplots(1)

+ # Show original, denormalized image...

+ image = inputs[image_idx] / 2 + 0.5

+ ax.imshow(image)

+ # ...with detections

+ bboxes, classes, confidences = best_results[image_idx]

+ for idx in range(len(bboxes)):

+ left, bot, right, top = bboxes[idx]

+ x, y, w, h = [val * 300 for val in [left, bot, right - left, top - bot]]

+ rect = patches.Rectangle((x, y), w, h, linewidth=1, edgecolor='r', facecolor='none')

+ ax.add_patch(rect)

+ ax.text(x, y, "{} {:.0f}%".format(classes_to_labels[classes[idx] - 1], confidences[idx]*100), bbox=dict(facecolor='white', alpha=0.5))

+ plt.show()

@@ -1220,8 +1228,8 @@

-# Visualize results without TRTorch/TensorRT

-plot_results(best_results_per_input)

+# Visualize results without TRTorch/TensorRT

+plot_results(best_results_per_input)

@@ -1263,40 +1271,40 @@

-import time

-import numpy as np

+import time

+import numpy as np

-import torch.backends.cudnn as cudnn

-cudnn.benchmark = True

+import torch.backends.cudnn as cudnn

+cudnn.benchmark = True

-# Helper function to benchmark the model

-def benchmark(model, input_shape=(1024, 1, 32, 32), dtype='fp32', nwarmup=50, nruns=1000):

- input_data = torch.randn(input_shape)

- input_data = input_data.to("cuda")

- if dtype=='fp16':

- input_data = input_data.half()

+# Helper function to benchmark the model

+def benchmark(model, input_shape=(1024, 1, 32, 32), dtype='fp32', nwarmup=50, nruns=1000):

+ input_data = torch.randn(input_shape)

+ input_data = input_data.to("cuda")

+ if dtype=='fp16':

+ input_data = input_data.half()

- print("Warm up ...")

- with torch.no_grad():

- for _ in range(nwarmup):

- features = model(input_data)

- torch.cuda.synchronize()

- print("Start timing ...")

- timings = []

- with torch.no_grad():

- for i in range(1, nruns+1):

- start_time = time.time()

- pred_loc, pred_label = model(input_data)

- torch.cuda.synchronize()

- end_time = time.time()

- timings.append(end_time - start_time)

- if i%100==0:

- print('Iteration %d/%d, avg batch time %.2f ms'%(i, nruns, np.mean(timings)*1000))

+ print("Warm up ...")

+ with torch.no_grad():

+ for _ in range(nwarmup):

+ features = model(input_data)

+ torch.cuda.synchronize()

+ print("Start timing ...")

+ timings = []

+ with torch.no_grad():

+ for i in range(1, nruns+1):

+ start_time = time.time()

+ pred_loc, pred_label = model(input_data)

+ torch.cuda.synchronize()

+ end_time = time.time()

+ timings.append(end_time - start_time)

+ if i%100==0:

+ print('Iteration %d/%d, avg batch time %.2f ms'%(i, nruns, np.mean(timings)*1000))

- print("Input shape:", input_data.size())

- print("Output location prediction size:", pred_loc.size())

- print("Output label prediction size:", pred_label.size())

- print('Average batch time: %.2f ms'%(np.mean(timings)*1000))

+ print("Input shape:", input_data.size())

+ print("Output location prediction size:", pred_loc.size())

+ print("Output label prediction size:", pred_label.size())

+ print('Average batch time: %.2f ms'%(np.mean(timings)*1000))

@@ -1319,9 +1327,9 @@

-# Model benchmark without TRTorch/TensorRT

-model = ssd300.eval().to("cuda")

-benchmark(model, input_shape=(128, 3, 300, 300), nruns=1000)

+# Model benchmark without TRTorch/TensorRT

+model = ssd300.eval().to("cuda")

+benchmark(model, input_shape=(128, 3, 300, 300), nruns=1000)

@@ -1353,9 +1361,13 @@

-

- ## 3. Creating TorchScript modules

-

+

+

+

+ ## 3. Creating TorchScript modules

+

+

+

To compile with TRTorch, the model must first be in

@@ -1385,8 +1397,8 @@

-model = ssd300.eval().to("cuda")

-traced_model = torch.jit.trace(model, [torch.randn((1,3,300,300)).to("cuda")])

+model = ssd300.eval().to("cuda")

+traced_model = torch.jit.trace(model, [torch.randn((1,3,300,300)).to("cuda")])

@@ -1404,8 +1416,8 @@

-# This is just an example, and not required for the purposes of this demo

-torch.jit.save(traced_model, "ssd_300_traced.jit.pt")

+# This is just an example, and not required for the purposes of this demo

+torch.jit.save(traced_model, "ssd_300_traced.jit.pt")

@@ -1420,8 +1432,8 @@

-# Obtain the average time taken by a batch of input with Torchscript compiled modules

-benchmark(traced_model, input_shape=(128, 3, 300, 300), nruns=1000)

+# Obtain the average time taken by a batch of input with Torchscript compiled modules

+benchmark(traced_model, input_shape=(128, 3, 300, 300), nruns=1000)

@@ -1453,9 +1465,13 @@

-

- ## 4. Compiling with TRTorch TorchScript modules behave just like normal PyTorch modules and are intercompatible. From TorchScript we can now compile a TensorRT based module. This module will still be implemented in TorchScript but all the computation will be done in TensorRT.

-

+

+

+

+ ## 4. Compiling with TRTorch TorchScript modules behave just like normal PyTorch modules and are intercompatible. From TorchScript we can now compile a TensorRT based module. This module will still be implemented in TorchScript but all the computation will be done in TensorRT.

+

+

+

@@ -1466,23 +1482,27 @@

-import trtorch

+import trtorch

-# The compiled module will have precision as specified by "op_precision".

-# Here, it will have FP16 precision.

-trt_model = trtorch.compile(traced_model, {

- "input_shapes": [(3, 3, 300, 300)],

- "op_precision": torch.half, # Run with FP16

- "workspace_size": 1 << 20

-})

+# The compiled module will have precision as specified by "op_precision".

+# Here, it will have FP16 precision.

+trt_model = trtorch.compile(traced_model, {

+ "input_shapes": [(3, 3, 300, 300)],

+ "op_precision": torch.half, # Run with FP16

+ "workspace_size": 1 << 20

+})

-

- ## 5. Running Inference

-

+

+

+

+ ## 5. Running Inference

+

+

+

Next, we run object detection

@@ -1496,14 +1516,14 @@

-# using a TRTorch module is exactly the same as how we usually do inference in PyTorch i.e. model(inputs)

-detections_batch = trt_model(tensor.to(torch.half)) # convert the input to half precision

+# using a TRTorch module is exactly the same as how we usually do inference in PyTorch i.e. model(inputs)

+detections_batch = trt_model(tensor.to(torch.half)) # convert the input to half precision

-# By default, raw output from SSD network per input image contains 8732 boxes with

-# localization and class probability distribution.

-# Let’s filter this output to only get reasonable detections (confidence>40%) in a more comprehensive format.

-results_per_input = utils.decode_results(detections_batch)

-best_results_per_input_trt = [utils.pick_best(results, 0.40) for results in results_per_input]

+# By default, raw output from SSD network per input image contains 8732 boxes with

+# localization and class probability distribution.

+# Let’s filter this output to only get reasonable detections (confidence>40%) in a more comprehensive format.

+results_per_input = utils.decode_results(detections_batch)

+best_results_per_input_trt = [utils.pick_best(results, 0.40) for results in results_per_input]

@@ -1521,8 +1541,8 @@

-# Visualize results with TRTorch/TensorRT

-plot_results(best_results_per_input_trt)

+# Visualize results with TRTorch/TensorRT

+plot_results(best_results_per_input_trt)

@@ -1571,16 +1591,16 @@

-batch_size = 128

+batch_size = 128

-# Recompiling with batch_size we use for evaluating performance

-trt_model = trtorch.compile(traced_model, {

- "input_shapes": [(batch_size, 3, 300, 300)],

- "op_precision": torch.half, # Run with FP16

- "workspace_size": 1 << 20

-})

+# Recompiling with batch_size we use for evaluating performance

+trt_model = trtorch.compile(traced_model, {

+ "input_shapes": [(batch_size, 3, 300, 300)],

+ "op_precision": torch.half, # Run with FP16

+ "workspace_size": 1 << 20

+})

-benchmark(trt_model, input_shape=(batch_size, 3, 300, 300), nruns=1000, dtype="fp16")

+benchmark(trt_model, input_shape=(batch_size, 3, 300, 300), nruns=1000, dtype="fp16")

diff --git a/docs/genindex.html b/docs/genindex.html

index 49024a4473..d8854460f3 100644

--- a/docs/genindex.html

+++ b/docs/genindex.html

@@ -677,37 +677,21 @@

trtorch::CompileGraph (C++ function)

- ,

-

- [1]

-

trtorch::CompileSpec (C++ struct)

- ,

-

- [1]

-

trtorch::CompileSpec::allow_gpu_fallback (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::capability (C++ member)

- ,

-

- [1]

-

@@ -721,34 +705,14 @@

[2]

- ,

-

- [3]

-

- ,

-

- [4]

-

- ,

-

- [5]

-

trtorch::CompileSpec::DataType (C++ class)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -764,40 +728,16 @@

[2]

,

-

- [3]

-

- ,

-

- [4]

-

- ,

-

- [5]

-

- ,

- [6]

+ [3]

,

- [7]

+ [4]

,

- [8]

-

- ,

-

- [9]

-

- ,

-

- [10]

-

- ,

-

- [11]

+ [5]

@@ -805,16 +745,8 @@

trtorch::CompileSpec::DataType::operator bool (C++ function)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -822,16 +754,8 @@

trtorch::CompileSpec::DataType::operator Value (C++ function)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -843,28 +767,12 @@

[1]

,

-

- [2]

-

- ,

-

- [3]

-

- ,

- [4]

+ [2]

,

- [5]

-

- ,

-

- [6]

-

- ,

-

- [7]

+ [3]

@@ -876,28 +784,12 @@

[1]

,

-

- [2]

-

- ,

-

- [3]

-

- ,

- [4]

+ [2]

,

- [5]

-

- ,

-

- [6]

-

- ,

-

- [7]

+ [3]

@@ -905,16 +797,8 @@

trtorch::CompileSpec::DataType::Value (C++ enum)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -922,16 +806,8 @@

trtorch::CompileSpec::DataType::Value::kChar (C++ enumerator)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -939,16 +815,8 @@

trtorch::CompileSpec::DataType::Value::kFloat (C++ enumerator)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -956,51 +824,27 @@

trtorch::CompileSpec::DataType::Value::kHalf (C++ enumerator)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

trtorch::CompileSpec::debug (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::device (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::DeviceType (C++ class)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -1016,40 +860,16 @@

[2]

,

-

- [3]

-

- ,

-

- [4]

-

- ,

-

- [5]

-

- ,

- [6]

+ [3]

,

- [7]

+ [4]

,

- [8]

-

- ,

-

- [9]

-

- ,

-

- [10]

-

- ,

-

- [11]

+ [5]

@@ -1057,16 +877,8 @@

trtorch::CompileSpec::DeviceType::operator bool (C++ function)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -1074,16 +886,8 @@

trtorch::CompileSpec::DeviceType::operator Value (C++ function)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -1091,16 +895,8 @@

trtorch::CompileSpec::DeviceType::operator!= (C++ function)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -1108,16 +904,8 @@

trtorch::CompileSpec::DeviceType::operator== (C++ function)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -1125,16 +913,8 @@

trtorch::CompileSpec::DeviceType::Value (C++ enum)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -1142,16 +922,8 @@

trtorch::CompileSpec::DeviceType::Value::kDLA (C++ enumerator)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

@@ -1159,62 +931,34 @@

trtorch::CompileSpec::DeviceType::Value::kGPU (C++ enumerator)

,

-

- [1]

-

- ,

- [2]

-

- ,

-

- [3]

+ [1]

trtorch::CompileSpec::EngineCapability (C++ enum)

- ,

-

- [1]

-

trtorch::CompileSpec::EngineCapability::kDEFAULT (C++ enumerator)

- ,

-

- [1]

-

trtorch::CompileSpec::EngineCapability::kSAFE_DLA (C++ enumerator)

- ,

-

- [1]

-

trtorch::CompileSpec::EngineCapability::kSAFE_GPU (C++ enumerator)

- ,

-

- [1]

-

trtorch::CompileSpec::input_ranges (C++ member)

- ,

-

- [1]

-

@@ -1224,14 +968,6 @@

[1]

- ,

-

- [2]

-

- ,

-

- [3]

-

@@ -1265,38 +1001,6 @@

[7]

- ,

-

- [8]

-

- ,

-

- [9]

-

- ,

-

- [10]

-

- ,

-

- [11]

-

- ,

-

- [12]

-

- ,

-

- [13]

-

- ,

-

- [14]

-

- ,

-

- [15]

-

@@ -1306,14 +1010,6 @@

[1]

- ,

-

- [2]

-

- ,

-

- [3]

-

@@ -1323,14 +1019,6 @@

[1]

- ,

-

- [2]

-

- ,

-

- [3]

-

@@ -1340,32 +1028,16 @@

[1]

- ,

-

- [2]

-

- ,

-

- [3]

-

trtorch::CompileSpec::max_batch_size (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::num_avg_timing_iters (C++ member)

- ,

-

- [1]

-

@@ -1375,63 +1047,35 @@

trtorch::CompileSpec::num_min_timing_iters (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::op_precision (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::ptq_calibrator (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::refit (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::strict_types (C++ member)

- ,

-

- [1]

-

trtorch::CompileSpec::workspace_size (C++ member)

- ,

-

- [1]

-

-

- trtorch::ConvertGraphToTRTEngine (C++ function)

-

- ,

- [1]

+ trtorch::ConvertGraphToTRTEngine (C++ function)

diff --git a/docs/objects.inv b/docs/objects.inv

index 5b9856090f..15f76af02c 100644

Binary files a/docs/objects.inv and b/docs/objects.inv differ

diff --git a/docs/searchindex.js b/docs/searchindex.js

index 9eda3beb46..879b329c9c 100644

--- a/docs/searchindex.js

+++ b/docs/searchindex.js

@@ -1 +1 @@