-

-

Notifications

You must be signed in to change notification settings - Fork 24

/

Copy pathREADME.md

2280 lines (1768 loc) · 112 KB

/

README.md

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

503

504

505

506

507

508

509

510

511

512

513

514

515

516

517

518

519

520

521

522

523

524

525

526

527

528

529

530

531

532

533

534

535

536

537

538

539

540

541

542

543

544

545

546

547

548

549

550

551

552

553

554

555

556

557

558

559

560

561

562

563

564

565

566

567

568

569

570

571

572

573

574

575

576

577

578

579

580

581

582

583

584

585

586

587

588

589

590

591

592

593

594

595

596

597

598

599

600

601

602

603

604

605

606

607

608

609

610

611

612

613

614

615

616

617

618

619

620

621

622

623

624

625

626

627

628

629

630

631

632

633

634

635

636

637

638

639

640

641

642

643

644

645

646

647

648

649

650

651

652

653

654

655

656

657

658

659

660

661

662

663

664

665

666

667

668

669

670

671

672

673

674

675

676

677

678

679

680

681

682

683

684

685

686

687

688

689

690

691

692

693

694

695

696

697

698

699

700

701

702

703

704

705

706

707

708

709

710

711

712

713

714

715

716

717

718

719

720

721

722

723

724

725

726

727

728

729

730

731

732

733

734

735

736

737

738

739

740

741

742

743

744

745

746

747

748

749

750

751

752

753

754

755

756

757

758

759

760

761

762

763

764

765

766

767

768

769

770

771

772

773

774

775

776

777

778

779

780

781

782

783

784

785

786

787

788

789

790

791

792

793

794

795

796

797

798

799

800

801

802

803

804

805

806

807

808

809

810

811

812

813

814

815

816

817

818

819

820

821

822

823

824

825

826

827

828

829

830

831

832

833

834

835

836

837

838

839

840

841

842

843

844

845

846

847

848

849

850

851

852

853

854

855

856

857

858

859

860

861

862

863

864

865

866

867

868

869

870

871

872

873

874

875

876

877

878

879

880

881

882

883

884

885

886

887

888

889

890

891

892

893

894

895

896

897

898

899

900

901

902

903

904

905

906

907

908

909

910

911

912

913

914

915

916

917

918

919

920

921

922

923

924

925

926

927

928

929

930

931

932

933

934

935

936

937

938

939

940

941

942

943

944

945

946

947

948

949

950

951

952

953

954

955

956

957

958

959

960

961

962

963

964

965

966

967

968

969

970

971

972

973

974

975

976

977

978

979

980

981

982

983

984

985

986

987

988

989

990

991

992

993

994

995

996

997

998

999

1000

# `tomodachi` ⌁ *a lightweight µservice lib* ⌁ *for Python 3*

<p align="left">

<sup><i>tomodachi</i> [<b>友達</b>] <i>means friends — 🦊🐶🐻🐯🐮🐸🐍 — a suitable name for microservices working together.</i> ✨✨</sup>

<br/>

<sup><tt>events</tt> <tt>messaging</tt> <tt>api</tt> <tt>pubsub</tt> <tt>sns+sqs</tt> <tt>amqp</tt> <tt>http</tt> <tt>queues</tt> <tt>handlers</tt> <tt>scheduling</tt> <tt>tasks</tt> <tt>microservice</tt> <tt>tomodachi</tt></sup>

</p>

[](https://github.com/kalaspuff/tomodachi/actions/workflows/pythonpackage.yml)

[](https://pypi.python.org/pypi/tomodachi)

[](https://codecov.io/gh/kalaspuff/tomodachi)

[](https://pypi.python.org/pypi/tomodachi)

------------------------------------------------------------------------

`tomodachi` *is a library designed to make it easy for devs to build

microservices using* `asyncio` *on Python.*

Includes ready implementations to support handlers built for HTTP

requests, websockets, AWS SNS+SQS and RabbitMQ / AMQP for 🚀 event based

messaging, 🔗 intra-service communication and 🐶 watchdog handlers.

- HTTP request handlers (API endpoints) are sent requests via the `aiohttp` server library. 🪢

- Events and message handlers are hooked into a message bus, such as a queue, from for example AWS (Amazon Web Services) SNS+SQS (`aiobotocore`), RabbitMQ / AMQP (`aioamqp`), etc. 📡

Using the provided handler managers, the need for devs to interface with

low-level libs directly should be lower, making it more of a breeze to

focus on building the business logic. 🪄

`tomodachi` has a featureset to meet most basic needs, for example...

- `🦸` ⋯ Graceful termination of consumers, listeners and tasks to ensure smooth deployments.

- `⏰` ⋯ Scheduled function execution (cron notation / time interval) for building watchdog handlers.

- `🍔` ⋯ Execution middleware interface for incoming HTTP requests and received messages.

- `💌` ⋯ Simple envelope building and parsing for both receiving and publishing messages.

- `📚` ⋯ Logging support via `structlog` with template loggers for both "dev console" and JSON output.

- `⛑️` ⋯ Loggers and handler managers built to support exception tracing, from for example Sentry.

- `📡` ⋯ SQS queues with filter policies for SNS topic subscriptions filtering messages on message attributes.

- `📦` ⋯ Supports SQS dead-letter queues via redrive policy -- infra orchestration from service optional.

- `🌱` ⋯ Designed to be extendable -- most kinds of transport layers or event sources can be added.

------------------------------------------------------------------------

## Quicklinks to the documentation 📖

*This documentation README includes information on how to get started

with services, what built-in functionality exists in this library, lists

of available configuration parameters and a few examples of service

code.*

Visit [https://tomodachi.dev/](https://tomodachi.dev/docs) for

additional documentation. 📔

- [Getting started / installation](https://tomodachi.dev/docs)

Handler types / endpoint built-ins. 🛍️

- [HTTP and WebSocket endpoints](https://tomodachi.dev/docs/http)

- [AWS SNS+SQS event messaging](https://tomodachi.dev/docs/aws-sns-sqs)

- [RabbitMQ / AMQP messaging](https://tomodachi.dev/docs/amqp-rabbitmq)

- [Scheduled functions and cron](https://tomodachi.dev/docs/scheduled-functions-cron)

Service options to tweak handler managers. 🛠️

- [Options and configuration parameters](https://tomodachi.dev/docs/options)

Use the features you need. 🌮

- [Middleware functionality](https://tomodachi.dev/docs/middlewares)

- [Function signature keywords](https://tomodachi.dev/docs/function-keywords)

- [Logging and log formatters](https://tomodachi.dev/docs/using-the-tomodachi-logger)

- [OpenTelemetry instrumentation](https://tomodachi.dev/docs/opentelemetry)

Recommendations and examples. 🧘

- [Good practices for running services in production](https://tomodachi.dev/docs/running-a-service-in-production)

- [Example code and template services](https://tomodachi.dev/docs/examples)

------------------------------------------------------------------------

**Please note -- this library is a work in progress.** 🐣

Consider `tomodachi` as beta software. This library follows an unregular

release schedule. There may be breaking changes between `0.x` versions.

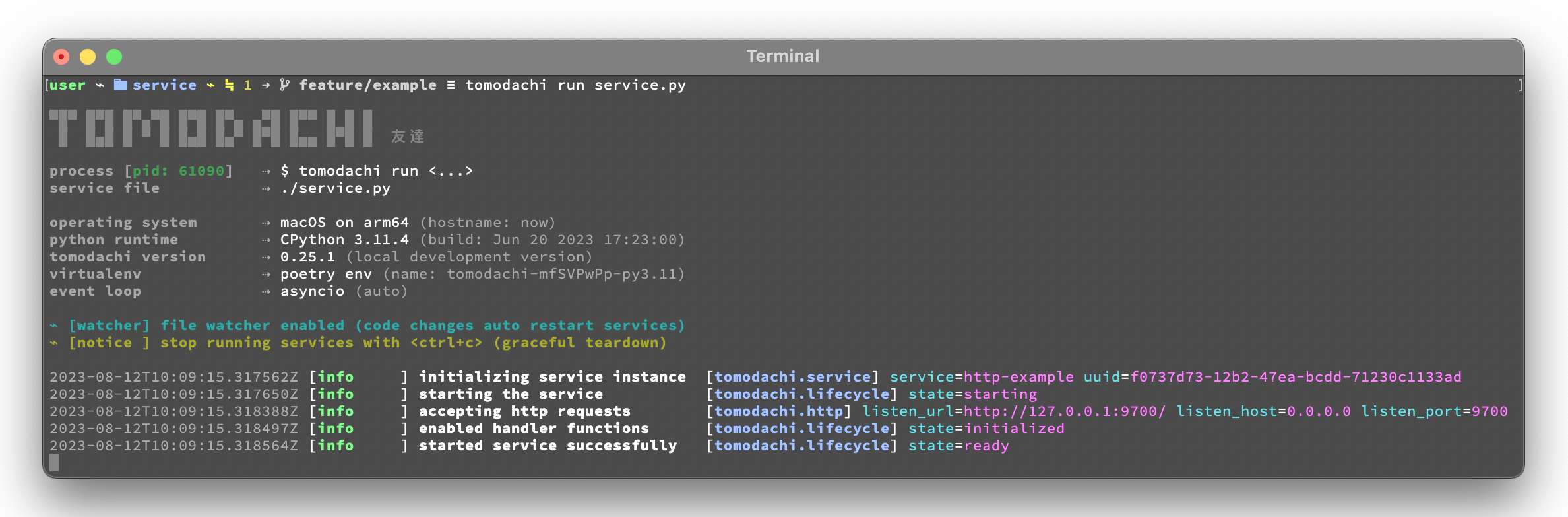

## Usage

`tomodachi` is used to execute service code via command line interface

or within container images. It will be installed automatically when the

package is installed in the environment.

The CLI endpoint `tomodachi` is then used to run services defined as

`tomodachi` service classes.

<img src="https://raw.githubusercontent.com/kalaspuff/tomodachi/53dfc4d2b3a8f9df16995aa61541afa2412b1074/docs/assets/tomodachi-usage.png" width="65%" align="right">

Start a service with its class definition defined in `./service/app.py`

by running `tomodachi run service/app.py`. Finally stop the service with

the keyboard interrupt `<ctrl+c>`.

The run command has some options available that can be specified with

arguments to the CLI.

Most options can also be set as an environment variable value.

For example setting environment `TOMODACHI_LOGGER=json` will yield the

same change to the logger as if running the service using the argument

`--logger json`.

<br clear="right"/>

<table align="left">

<thead>

<tr vertical-align="center">

<th align="center" width="50px">🧩</th>

<th align="left" width="440px"><tt>--loop [auto|asyncio|uvloop]</tt></th>

</tr>

<tr vertical-align="center">

<th align="center" width="50px">🖥️</th>

<th align="left" width="440px"><tt>TOMODACHI_LOOP=...</tt></th>

</tr>

</thead>

</table>

<br clear="left"/>

The value for `--loop` can either be set to `asyncio`, `uvloop` or

`auto`. The `uvloop` value can only be used if uvloop is installed in

the execution environment. Note that the default `auto` value will

currently end up using the event loop implementation that is preferred

by the Python interpreter, which in most cases will be `asyncio`.

<table align="left">

<thead>

<tr vertical-align="center">

<th align="center" width="50px">🧩</th>

<th align="left" width="440px"><tt>--production</tt></th>

</tr>

<tr vertical-align="center">

<th align="center" width="50px">🖥️</th>

<th align="left" width="440px"><tt>TOMODACHI_PRODUCTION=1</tt></th>

</tr>

</thead>

</table>

<br clear="left"/>

Use `--production` to disable the file watcher that restarts the service

on file changes and to hide the startup info banner.

⇢ *recommendation* ✨👀 \

⇢ *Highly recommended to enable this option for built docker images and

for builds of services that are to be released to any environment. The

only time you should run without the* `--production` *option is during

development and in local development environment.*

<table align="left">

<thead>

<tr vertical-align="center">

<th align="center" width="50px">🧩</th>

<th align="left" width="440px"><tt>--log-level [debug|info|warning|error|critical]</tt></th>

</tr>

<tr vertical-align="center">

<th align="center" width="50px">🖥️</th>

<th align="left" width="440px"><tt>TOMODACHI_LOG_LEVEL=...</tt></th>

</tr>

</thead>

</table>

<br clear="left"/>

Set the minimum log level for which the loggers will emit logs to their

handlers with the `--log-level` option. By default the minimum log level

is set to `info` (which includes `info`, `warning`, `error` and

`critical`, resulting in only the `debug` log records to be filtered

out).

<table align="left">

<thead>

<tr vertical-align="center">

<th align="center" width="50px">🧩</th>

<th align="left" width="440px"><tt>--logger [console|json|python|disabled]</tt></th>

</tr>

<tr vertical-align="center">

<th align="center" width="50px">🖥️</th>

<th align="left" width="440px"><tt>TOMODACHI_LOGGER=...</tt></th>

</tr>

</thead>

</table>

<br clear="left"/>

Apply the `--logger` option to change the log formatter that is used by

the library. The default value `console` is mostly suited for local

development environments as it provides a structured and colorized view

of log records. The console colors can be disabled by setting the env

value `NO_COLOR=1`.

⇢ *recommendation* ✨👀 \

⇢ *For released services / images it's recommended to use the* `json`

*option so that you can set up structured log collection via for

example Logstash, Fluentd, Fluent Bit, Vector, etc.*

If you prefer to disable log output from the library you can use

`disabled` (and presumably add a log handler with another

implementation).

The `python` option isn't recommended, but available if required to use

the loggers from Python's built-in `logging` module. Note that the

built-in `logging` module will be used any way. as the library's

loggers are both added as handlers to `logging.root` and has propagation

of records through to `logging` as well.

<table align="left">

<thead>

<tr vertical-align="center">

<th align="center" width="50px">🧩</th>

<th align="left" width="440px"><tt>--custom-logger <module.attribute|module></tt></th>

</tr>

<tr vertical-align="center">

<th align="center" width="50px">🖥️</th>

<th align="left" width="440px"><tt>TOMODACHI_CUSTOM_LOGGER=...</tt></th>

</tr>

</thead>

</table>

<br clear="left"/>

If the template loggers from the option above doesnt' cut it or if you

already have your own logger (preferably a `structlog` logger) and

processor chain set up, you can specify a `--custom-logger` which will

also make `tomodachi` use your logger set up. This is suitable also if

your app is using a custom logging setup that would differ in output

from what the `tomodachi` loggers outputs.

If your logger is initialized in for example the module

`yourapp.logging` and the initialized (`structlog`) logger is aptly

named `logger`, then use `--custom-logger yourapp.logging.logger` (or

set as an env value `TOMODACHI_CUSTOM_LOGGER=yourapp.logging.logger`).

The path to the logger attribute in the module you're specifying must

implement `debug`, `info`, `warning`, `error`, `exception`, `critical`

and preferably also `new(context: Dict[str, Any]) -> Logger` (as that is

what primarily will be called to create (or get) a logger).

Although non-native `structlog` loggers can be used as custom loggers,

it's highly recommended to specify a path that has been assigned a

value from `structlog.wrap_logger` or `structlog.get_logger`.

<table align="left">

<thead>

<tr vertical-align="center">

<th align="center" width="50px">🧩</th>

<th align="left" width="440px"><tt>--opentelemetry-instrument</tt></th>

</tr>

<tr vertical-align="center">

<th align="center" width="50px">🖥️</th>

<th align="left" width="440px"><tt>TOMODACHI_OPENTELEMETRY_INSTRUMENT=1</tt></th>

</tr>

</thead>

</table>

<br clear="left"/>

Use `--opentelemetry-instrument` to enable OpenTelemetry auto

instrumentation of the service and libraries for which the environment

has installed instrumentors.

If `tomodachi` is installed in the environment, using the argument

`--opentelemetry-instrument` (or setting the

`TOMODACHI_OPENTELEMETRY_INSTRUMENT=1` env variable value) is mostly

equivalent to starting the service using the `opentelemetry-instrument`

CLI -- OTEL distros, configurators and instrumentors will be loaded

automatically and `OTEL_*` environment values will be processed in the

same way.

------------------------------------------------------------------------

## Getting started 🏃

### First off -- installation using `poetry` is fully supported and battle-tested (`pip` works just as fine)

Install `tomodachi` in your preferred way, wether it be `poetry`, `pip`,

`pipenv`, etc. Installing the distribution will give your environment

access to the `tomodachi` package for imports as well as a shortcut to

the CLI alias, which later is used to run the microservices you build.

```bash

local ~$ pip install tomodachi

> ...

> Installing collected packages: ..., ..., ..., tomodachi

> Successfully installed ... ... ... tomodachi-x.x.xx

local ~$ tomodachi --version

> tomodachi x.xx.xx

```

`tomodachi` can be installed together with a set of "extras" that will

install a set of dependencies that are useful for different purposes.

The extras are:

- `uvloop`: for the possibility to start services with the `--loop uvloop` option.

- `protobuf`: for protobuf support in envelope transformation and message serialization.

- `aiodns`: to use `aiodns` as the DNS resolver for `aiohttp`.

- `brotli`: to use `brotli` compression in `aiohttp`.

- `opentelemetry`: for OpenTelemetry instrumentation support.

- `opentelemetry-exporter-prometheus`: to use the experimental OTEL meter provider for Prometheus.

Services and their dependencies, together with runtime utilities like

`tomodachi`, should preferably always be installed and run in isolated

environments like Docker containers or virtual environments.

### Building blocks for a service class and microservice entrypoint

1. `import tomodachi` and create a class that inherits `tomodachi.Service`, it can be called anything... or just `Service` to keep it simple.

2. Add a `name` attribute to the class and give it a string value. Having a `name` attribute isn't required, but good practice.

3. Define an awaitable function in the service class -- in this example we'll use it as an entrypoint to trigger code in the service by decorating it with one of the available invoker decorators. Note that a service class must have at least one decorated function available to even be recognized as a service by `tomodachi run`.

4. Decide on how to trigger the function -- for example using HTTP, pub/sub or on a timed interval, then decorate your function with one of these trigger / subscription decorators, which also invokes what capabilities the service initially has.

*Further down you'll find a desciption of how each of the built-in

invoker decorators work and which keywords and parameters you can use to

change their behaviour.*

*Note: Publishing and subscribing to events and messages may require

user credentials or hosting configuration to be able to access queues

and topics.*

**For simplicity, let's do HTTP:**

- On each POST request to `/sheep`, the service will wait for up to one whole second (pretend that it's performing I/O -- waiting for response on a slow sheep counting database modification, for example) and then issue a 200 OK with some data.

- It's also possible to query the amount of times the POST tasks has run by doing a `GET` request to the same url, `/sheep`.

- By using `@tomodachi.http` an HTTP server backed by `aiohttp` will be started on service start. `tomodachi` will act as a middleware to route requests to the correct handlers, upgrade websocket connections and then also gracefully await connections with still executing tasks, when the service is asked to stop -- up until a configurable amount of time has passed.

```python

import asyncio

import random

import tomodachi

class Service(tomodachi.Service):

name = "sleepy-sheep-counter"

_sheep_count = 0

@tomodachi.http("POST", r"/sheep")

async def add_to_sheep_count(self, request):

await asyncio.sleep(random.random())

self._sheep_count += 1

return 200, str(self._sheep_count)

@tomodachi.http("GET", r"/sheep")

async def return_sheep_count(self, request):

return 200, str(self._sheep_count)

```

Run services with:

```bash

local ~/code/service$ tomodachi run service.py

```

------------------------------------------------------------------------

Beside the currently existing built-in ways of interfacing with a

service, it's possible to build additional function decorators to suit

the use-cases one may have.

To give a few possible examples / ideas of functionality that could be

coded to call functions with data in similar ways:

- Using Redis as a task queue with configurable keys to push or pop onto.

- Subscribing to Kinesis or Kafka event streams and act on the data received.

- An abstraction around otherwise complex functionality or to unify API design.

- As an example to above sentence; GraphQL resolver functionality with built-in tracability and authentication management, with a unified API to application devs.

------------------------------------------------------------------------

## Additional examples will follow with different ways to trigger functions in the service

Of course the different ways can be used within the same class, for

example the very common use-case of having a service listening on HTTP

while also performing some kind of async pub/sub tasks.

### Basic HTTP based service 🌟

Code for a simple service which would service data over HTTP, pretty

similar, but with a few more concepts added.

```python

import tomodachi

class Service(tomodachi.Service):

name = "http-example"

# Request paths are specified as regex for full flexibility

@tomodachi.http("GET", r"/resource/(?P<id>[^/]+?)/?")

async def resource(self, request, id):

# Returning a string value normally means 200 OK

return f"id = {id}"

@tomodachi.http("GET", r"/health")

async def health_check(self, request):

# Return can also be a tuple, dict or even an aiohttp.web.Response

# object for more complex responses - for example if you need to

# send byte data, set your own status code or define own headers

return {

"body": "Healthy",

"status": 200,

}

# Specify custom 404 catch-all response

@tomodachi.http_error(status_code=404)

async def error_404(self, request):

return "error 404"

```

### RabbitMQ or AWS SNS+SQS event based messaging service 🐰

Example of a service that calls a function when messages are published

on an AMQP topic exchange.

```python

import tomodachi

class Service(tomodachi.Service):

name = "amqp-example"

# The "message_envelope" attribute can be set on the service class to build / parse data.

# message_envelope = ...

# A route / topic on which the service will subscribe to via RabbitMQ / AMQP

@tomodachi.amqp("example.topic")

async def example_func(self, message):

# Received message, fordarding the same message as response on another route / topic

await tomodachi.amqp_publish(self, message, routing_key="example.response")

```

#### AMQP – Publish to exchange / routing key – `tomodachi.amqp_publish`

```python

await tomodachi.amqp_publish(service, message, routing_key=routine_key, exchange_name=...)

```

- `service` is the instance of the service class (from within a handler, use `self`)

- `message` is the message to publish before any potential envelope transformation

- `routing_key` is the routing key to use when publishing the message

- `exchange_name` is the exchange name for publishing the message (default: "amq.topic")

For more advanced workflows, it's also possible to specify overrides for the routing key prefix or message enveloping class.

### AWS SNS+SQS event based messaging service 📡

Example of a service using AWS SNS+SQS managed pub/sub messaging. AWS

SNS and AWS SQS together brings managed message queues for

microservices, distributed systems, and serverless applications hosted

on AWS. `tomodachi` services can customize their enveloping

functionality to both unwrap incoming messages and/or to produce

enveloped messages for published events / messages. Pub/sub patterns are

great for scalability in distributed architectures, when for example

hosted in Docker on Kubernetes.

```python

import tomodachi

class Service(tomodachi.Service):

name = "aws-example"

# The "message_envelope" attribute can be set on the service class to build / parse data.

# message_envelope = ...

# Using the @tomodachi.aws_sns_sqs decorator to make the service create an AWS SNS topic,

# an AWS SQS queue and to make a subscription from the topic to the queue as well as start

# receive messages from the queue using SQS.ReceiveMessages.

@tomodachi.aws_sns_sqs("example-topic", queue_name="example-queue")

async def example_func(self, message):

# Received message, forwarding the same message as response on another topic

await tomodachi.aws_sns_sqs_publish(self, message, topic="another-example-topic")

```

#### AWS – Publish message to SNS – `tomodachi.aws_sns_sqs_publish`

```python

await tomodachi.aws_sns_sqs_publish(service, message, topic=topic)

```

- `service` is the instance of the service class (from within a handler, use `self`)

- `message` is the message to publish before any potential envelope transformation

- `topic` is the non-prefixed name of the SNS topic used to publish the message

Additional function arguments can be supplied to also include `message_attributes`, and / or `group_id` + `deduplication_id`.

For more advanced workflows, it's also possible to specify overrides for the SNS topic name prefix or message enveloping class.

#### AWS – Send message to SQS – `tomodachi.sqs_send_message`

```python

await tomodachi.sqs_send_message(service, message, queue_name=queue_name)

```

- `service` is the instance of the service class (from within a handler, use `self`)

- `message` is the message to publish before any potential envelope transformation

- `queue_name` is the SQS queue url, queue ARN or non-prefixed queue name to be used

Additional function arguments can be supplied to also include `message_attributes`, and / or `group_id` + `deduplication_id`.

For more advanced workflows, it's also possible to set delay seconds, define a custom message body formatter, or to specify overrides for the SNS topic name prefix or message enveloping class.

### Scheduling, inter-communication between services, etc. ⚡️

There are other examples available with code of how to use services with

self-invoking methods called on a specified interval or at specific

times / days, as well as additional examples for inter-communication

pub/sub between different services on both AMQP or AWS SNS+SQS as shown

above. See more at the [examples

folder](https://github.com/kalaspuff/tomodachi/blob/master/examples/).

------------------------------------------------------------------------

## Run the service 😎

```bash

# cli alias is set up automatically on installation

local ~/code/service$ tomodachi run service.py

# alternatively using the tomodachi.run module

local ~/code/service$ python -m tomodachi.run service.py

```

*Defaults to output startup banner on stdout and log output on stderr.*

*HTTP service acts like a normal web server.*

```bash

local ~$ curl -v "http://127.0.0.1:9700/resource/1234"

# > HTTP/1.1 200 OK

# > Content-Type: text/plain; charset=utf-8

# > Server: tomodachi

# > Content-Length: 9

# > Date: Sun, 16 Oct 2022 13:38:02 GMT

# >

# > id = 1234

```

## Getting an instance of a service

If the a Service instance is needed outside the Service class itself, it

can be acquired with `tomodachi.get_service`. If multiple Service

instances exist within the same event loop, the name of the Service can

be used to get the correct one.

```python

import tomodachi

# Get the instance of the active Service.

service = tomodachi.get_service()

# Get the instance of the Service by service name.

service = tomodachi.get_service(service_name)

```

## Stopping the service

Stopping a service can be achieved by either sending a `SIGINT`

\<ctrl+c\> or `SIGTERM` signal to to the `tomodachi` Python process, or

by invoking the `tomodachi.exit()` function, which will initiate the

termination processing flow. The `tomodachi.exit()` call can

additionally take an optional exit code as an argument, which otherwise

will default to use exit code 0.

- `SIGINT` signal (equivalent to using \<ctrl+c\>)

- `SIGTERM` signal

- `tomodachi.exit()` or `tomodachi.exit(exit_code)`

The process' exit code can also be altered by changing the value of

`tomodachi.SERVICE_EXIT_CODE`, however using `tomodachi.exit` with an

integer argument will override any previous value set to

`tomodachi.SERVICE_EXIT_CODE`.

All above mentioned ways of initiating the termination flow of the

service will perform a graceful shutdown of the service which will try

to await open HTTP handlers and await currently running tasks using

tomodachi's scheduling functionality as well as await tasks processing

messages from queues such as AWS SQS or RabbitMQ.

Some tasks may timeout during termination according to used

configuration (see options such as

`http.termination_grace_period_seconds`) if they are long running tasks.

Additionally container handlers may impose additional timeouts for how

long termination are allowed to take. If no ongoing tasks are to be

awaited and the service lifecycle can be cleanly terminated the shutdown

usually happens within milliseconds.

## Function hooks for service lifecycle changes

To be able to initialize connections to external resources or to perform

graceful shutdown of connections made by a service, there's a few

functions a service can specify to hook into lifecycle changes of a

service.

| **Magic function name** | **When is the function called?** | **What is suitable to put here** |

|:---|:---|:---|

| `_start_service` | Called before invokers / servers have started. | Initialize connections to databases, etc.

| `_started_service` | Called after invokers / server have started. | Start reporting or start tasks to run once.

| `_stopping_service` | Called on termination signal. | Cancel eventual internal long-running tasks.

| `_stop_service` | Called after tasks have gracefully finished. | Close connections to databases, etc.

Changes to a service settings / configuration (by for example modifying

the `options` values) should be done in the `__init__` function instead

of in any of the lifecycle function hooks.

Good practice -- in general, make use of the `_start_service` (for

setting up connections) in addition to the `_stop_service` (to close

connections) lifecycle hooks. The other hooks may be used for more

uncommon use-cases.

**Lifecycle functions are defined as class functions and will be called

by the tomodachi process on lifecycle changes:**

```python

import tomodachi

class Service(tomodachi.Service):

name = "example"

async def _start_service(self):

# The _start_service function is called during initialization,

# before consumers or an eventual HTTP server has started.

# It's suitable to setup or connect to external resources here.

return

async def _started_service(self):

# The _started_service function is called after invoker

# functions have been set up and the service is up and running.

# The service is ready to process messages and requests.

return

async def _stopping_service(self):

# The _stopping_service function is called the moment the

# service is instructed to terminate - usually this happens

# when a termination signal is received by the service.

# This hook can be used to cancel ongoing tasks or similar.

# Note that some tasks may be processing during this time.

return

async def _stop_service(self):

# Finally the _stop_service function is called after HTTP server,

# scheduled functions and consumers have gracefully stopped.

# Previously ongoing tasks have been awaited for completion.

# This is the place to close connections to external services and

# clean up eventual tasks you may have started previously.

return

```

Exceptions raised in `_start_service` or `_started_service` will

gracefully terminate the service.

## Graceful termination of a service (`SIGINT` / `SIGTERM`)

When the service process receives a `SIGINT` or `SIGTERM` signal (or `tomodachi.exit()` is called) the service begins the process for graceful termination, which in practice means:

- The service' `_stopping_service` method, if implemented, is called immediately upon the received signal.

- The service stops accepting new HTTP connections and closes keep-alive HTTP connections at the earliest.

- Already established HTTP connections for which a handler call is awaited called are allowed to finish their work before the service stops (up to `options.http.termination_grace_period_seconds` seconds, after which the open TCP connections for those HTTP connections will be forcefully closed if still not completed).

- Any AWS SQS / AMQP handlers (decorated with `@aws_sns_sqs` or `@amqp`) will stop receiving new messages. However handlers already processing a received message will be awaited to return their result. Unlike the HTTP handler connections there is no grace period for these queue consuming handlers.

- Currently running scheduled handlers will also be awaited to fully complete their execution before the service will terminates. No new scheduled handlers will be started.

- When all HTTP connections are closed, all scheduled handlers has completed and all pub-sub handlers have been awaited, the service' `_stop_service` method is finally called (if implemented), where for example database connections can be closed. When the `_stop_service` method returns (or immediately after completion of handler invocations if any `_stop_service` isn't implemented), the service will finally terminate.

It's recommended to use a `http.termination_grace_period_seconds` options value of around 30 seconds to allow for the graceful termination of HTTP connections. This value can be adjusted based on the expected time it takes for the service to complete the processing of incoming request.

Make sure that the orchestration engine (such as Kubernetes) waits at least 30 seconds from sending the `SIGTERM` to remove the pod. For extra compatibility when operating services in k8s and to get around most kind of edge-cases of intermittent timeouts and problems with ingress connections, (and unless your setup includes long running queue consuming handler calls which requires an even longer grace period), set the pod spec `terminationGracePeriodSeconds` to `90` seconds and use a `preStop` lifecycle hook of 20 seconds.

Keep the `http.termination_grace_period_seconds` options value lower than the pod spec's `terminationGracePeriodSeconds` value, as the latter is a hard limit for how long the pod will be allowed to run after receiving a `SIGTERM` signal.

In a setup where long running queue consuming handler calls commonly occurs, any grace period the orchestration engine uses will have to take that into account. It's generally advised to split work up into sizeable chunks that can quickly complete or if handlers are idempotent, apply the possibility to cancel long running handlers as part of the `_stopping_service` implementation.

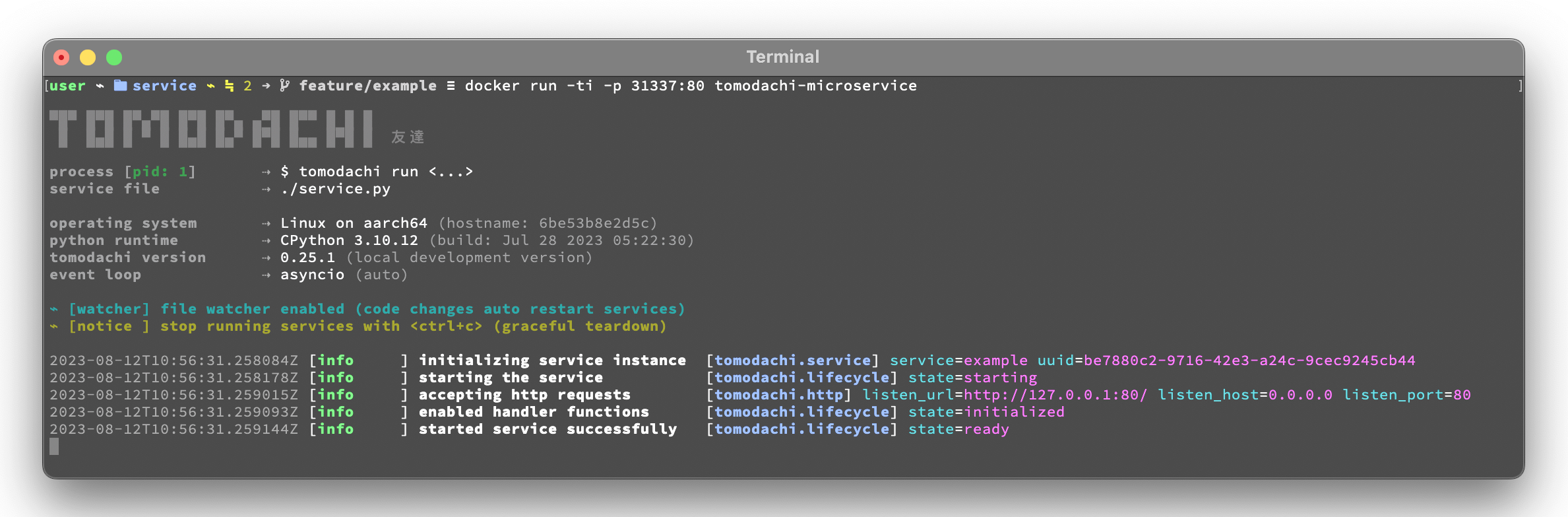

## Example of a microservice containerized in Docker 🐳

A great way to distribute and operate microservices are usually to run

them in containers or even more interestingly, in clusters of compute

nodes. Here follows an example of getting a `tomodachi` based service up

and running in Docker.

We're building the service' container image using just two small

files, the `Dockerfile` and the actual code for the microservice,

`service.py`. In reality a service would probably not be quite this

small, but as a template to get started.

**Dockerfile**

```dockerfile

FROM python:3.10-bullseye

RUN pip install tomodachi

RUN mkdir /app

WORKDIR /app

COPY service.py .

ENV PYTHONUNBUFFERED=1

CMD ["tomodachi", "run", "service.py"]

```

**service.py**

```python

import json

import tomodachi

class Service(tomodachi.Service):

name = "example"

options = tomodachi.Options(

http=tomodachi.Options.HTTP(

port=80,

content_type="application/json; charset=utf-8",

),

)

_healthy = True

@tomodachi.http("GET", r"/")

async def index_endpoint(self, request):

# tomodachi.get_execution_context() can be used for

# debugging purposes or to add additional service context

# in logs or alerts.

execution_context = tomodachi.get_execution_context()

return json.dumps({

"data": "hello world!",

"execution_context": execution_context,

})

@tomodachi.http("GET", r"/health/?", ignore_logging=True)

async def health_check(self, request):

if self._healthy:

return 200, json.dumps({"status": "healthy"})

else:

return 503, json.dumps({"status": "not healthy"})

@tomodachi.http_error(status_code=400)

async def error_400(self, request):

return json.dumps({"error": "bad-request"})

@tomodachi.http_error(status_code=404)

async def error_404(self, request):

return json.dumps({"error": "not-found"})

@tomodachi.http_error(status_code=405)

async def error_405(self, request):

return json.dumps({"error": "method-not-allowed"})

```

### Building and running the container, forwarding host's port 31337 to port 80

```bash

local ~/code/service$ docker build . -t tomodachi-microservice

# > Sending build context to Docker daemon 9.216kB

# > Step 1/7 : FROM python:3.10-bullseye

# > 3.10-bullseye: Pulling from library/python

# > ...

# > ---> 3f7f3ab065d4

# > Step 7/7 : CMD ["tomodachi", "run", "service.py"]

# > ---> Running in b8dfa9deb243

# > Removing intermediate container b8dfa9deb243

# > ---> 8f09a3614da3

# > Successfully built 8f09a3614da3

# > Successfully tagged tomodachi-microservice:latest

```

```bash

local ~/code/service$ docker run -ti -p 31337:80 tomodachi-microservice

```

### Making requests to the running container

```bash

local ~$ curl http://127.0.0.1:31337/ | jq

# {

# "data": "hello world!",

# "execution_context": {

# "tomodachi_version": "x.x.xx",

# "python_version": "3.x.x",

# "system_platform": "Linux",

# "process_id": 1,

# "init_timestamp": "2022-10-16T13:38:01.201509Z",

# "event_loop": "asyncio",

# "http_enabled": true,

# "http_current_tasks": 1,

# "http_total_tasks": 1,

# "aiohttp_version": "x.x.xx"

# }

# }

```

```bash

local ~$ curl http://127.0.0.1:31337/health -i

# > HTTP/1.1 200 OK

# > Content-Type: application/json; charset=utf-8

# > Server: tomodachi

# > Content-Length: 21

# > Date: Sun, 16 Oct 2022 13:40:44 GMT

# >

# > {"status": "healthy"}

```

```bash

local ~$ curl http://127.0.0.1:31337/no-route -i

# > HTTP/1.1 404 Not Found

# > Content-Type: application/json; charset=utf-8

# > Server: tomodachi

# > Content-Length: 22

# > Date: Sun, 16 Oct 2022 13:41:18 GMT

# >

# > {"error": "not-found"}

```

**It's actually as easy as that to get something spinning. The hard

part is usually to figure out (or decide) what to build next.**

Other popular ways of running microservices are of course to use them as

serverless functions, with an ability of scaling to zero (Lambda, Cloud

Functions, Knative, etc. may come to mind). Currently `tomodachi` works

best in a container setup and until proper serverless supporting

execution context is available in the library, it should be adviced to

hold off and use other tech for those kinds of deployments.

------------------------------------------------------------------------

## Available built-ins used as endpoints 🚀

As shown, there's different ways to trigger your microservice function

in which the most common ones are either directly via HTTP or via event

based messaging (for example AMQP or AWS SNS+SQS). Here's a list of the

currently available built-ins you may use to decorate your service

functions.

## HTTP endpoints

### `@tomodachi.http`

```python

@tomodachi.http(method, url, ignore_logging=[200])

def handler(self, request, *args, **kwargs):

...

```

Sets up an **HTTP endpoint** for the specified `method` (`GET`,

`PUT`, `POST`, `DELETE`) on the regexp `url`. Optionally specify

`ignore_logging` as a dict or tuple containing the status codes you

do not wish to log the access of.

Can also be set to `True` to

ignore everything except status code 500.

------------------------------------------------------------------------

### `@tomodachi.http_static`

```python

@tomodachi.http_static(path, url)

def handler(self, request, *args, **kwargs):

# noop

pass

```

Sets up an **HTTP endpoint for static content** available as `GET`

`HEAD` from the `path` on disk on the base regexp `url`.

------------------------------------------------------------------------

### `@tomodachi.websocket`

```python

@tomodachi.websocket(url)

def handler(self, request, *args, **kwargs):

async def _receive(data: Union[str, bytes]) -> None:

...

async def _close() -> None:

...

return _receive, _close

```

Sets up a **websocket endpoint** on the regexp `url`. The invoked

function is called upon websocket connection and should return a two

value tuple containing callables for a function receiving frames

(first callable) and a function called on websocket close (second

callable).

The passed arguments to the function beside the class

object is first the `websocket` response connection which can be

used to send frames to the client, and optionally also the `request`

object.

------------------------------------------------------------------------

### `@tomodachi.http_error`

```python

@tomodachi.http_error(status_code)

def handler(self, request, *args, **kwargs):

...

```

A function which will be called if the **HTTP request would result

in a 4XX** `status_code`. You may use this for example to set up a

custom handler on "404 Not Found" or "403 Forbidden" responses.

------------------------------------------------------------------------

## AWS SNS+SQS messaging

### `@tomodachi.aws_sns_sqs`

```python

@tomodachi.aws_sns_sqs(

topic=None,

competing=True,

queue_name=None,

filter_policy=FILTER_POLICY_DEFAULT,

visibility_timeout=VISIBILITY_TIMEOUT_DEFAULT,

dead_letter_queue_name=DEAD_LETTER_QUEUE_DEFAULT,

max_receive_count=MAX_RECEIVE_COUNT_DEFAULT,

fifo=False,

max_number_of_consumed_messages=MAX_NUMBER_OF_CONSUMED_MESSAGES

**kwargs,

)

def handler(self, data, *args, **kwargs):

...

```

#### Topic and Queue

This would set up an **AWS SQS queue**, subscribing to messages on

the **AWS SNS topic** `topic` (if a `topic` is specified),

whereafter it will start consuming messages from the queue. The value

can be omitted in order to make the service consume messages from an existing

queue, without setting up an SNS topic subscription.

The `competing` value is used when the same queue name should be

used for several services of the same type and thus "compete" for

who should consume the message. Since `tomodachi` version 0.19.x

this value has a changed default value and will now default to

`True` as this is the most likely use-case for pub/sub in

distributed architectures.

Unless `queue_name` is specified an auto generated queue name will

be used. Additional prefixes to both `topic` and `queue_name` can be

assigned by setting the `options.aws_sns_sqs.topic_prefix` and

`options.aws_sns_sqs.queue_name_prefix` dict values.

#### FIFO queues + max number of consumed messages

AWS supports two types of queues and topics, namely `standard` and

`FIFO`. The major difference between these is that the latter

guarantees correct ordering and at-most-once delivery. By default,

tomodachi creates `standard` queues and topics. To create them as

`FIFO` instead, set `fifo` to `True`.

The `max_number_of_consumed_messages` setting determines how many

messages should be pulled from the queue at once. This is useful if

you have a resource-intensive task that you don't want other

messages to compete for. The default value is 10 for `standard`

queues and 1 for `FIFO` queues. The minimum value is 1, and the

maximum value is 10.

#### Filter policy

The `filter_policy` value of specified as a keyword argument will be

applied on the SNS subscription (for the specified topic and queue)

as the `"FilterPolicy` attribute. This will apply a filter on SNS

messages using the chosen "message attributes" and/or their values

specified in the filter. Make note that the filter policy dict

structure differs somewhat from the actual message attributes, as

values to the keys in the filter policy must be a dict (object) or

list (array).

Example: A filter policy value of

`{"event": ["order_paid"], "currency": ["EUR", "USD"]}` would set up

the SNS subscription to receive messages on the topic only where the

message attribute `"event"` is `"order_paid"` and the `"currency"`

value is either `"EUR"` or `"USD"`.

If `filter_policy` is not specified as an argument (default), the

queue will receive messages on the topic as per already specified if

using an existing subscription, or receive all messages on the topic

if a new subscription is set up (default). Changing the

`filter_policy` on an existing subscription may take several minutes

to propagate.

Read more about the filter policy format on AWS:

- <https://docs.aws.amazon.com/sns/latest/dg/sns-subscription-filter-policies.html>

Related to the above mentioned filter policy, the

`tomodachi.aws_sns_sqs_publish` (which is used for publishing

messages to SNS) and `tomodachi.sqs_send_message` (which sends

messages directly to SQS) functions, can specify "message