A Human-Computer Interaction Bot is a Humanoid Bot and the second version of previous year's Reaction Bot. It can give output/reactions according to the environment and can be used as friendly pet and extended for home automation & Indoor Security. It can read facial emotion of the person and can do actions based on that.This year we updated a lot of new features in the bot like 3D motion for its face, removed the mobile interference, used cam reaction api and sound source localization, etc.

We would like to express our special thanks of gratitude to our mentor Mr. Nikhil Kumar (ECE 3rd year) who gave us the golden opportunity to do this wonderful project which helped us in doing a lot of research and we came to know about so many new things.

Humanoid Robotics

Like as a human being it is really very easy for us give a reactions over a thing and give a specific output as over mind is trained on a very large dataset. But as far a machine is concern it is really gonna difficult when you have limited dataset or zero dataset. Then you have the only thing on which you can rely and it is web APIs.

Locate a Sound Source

Localize a sound source is actually not a easy task as you have a lot of noise in sound. So first of all you have to filter your input and also your input should be very precise with time then only you can use any calculation but in real world scenerio it is not so. So it is really very challenging to locate the sound source.

The mechanical design of the bot includes:

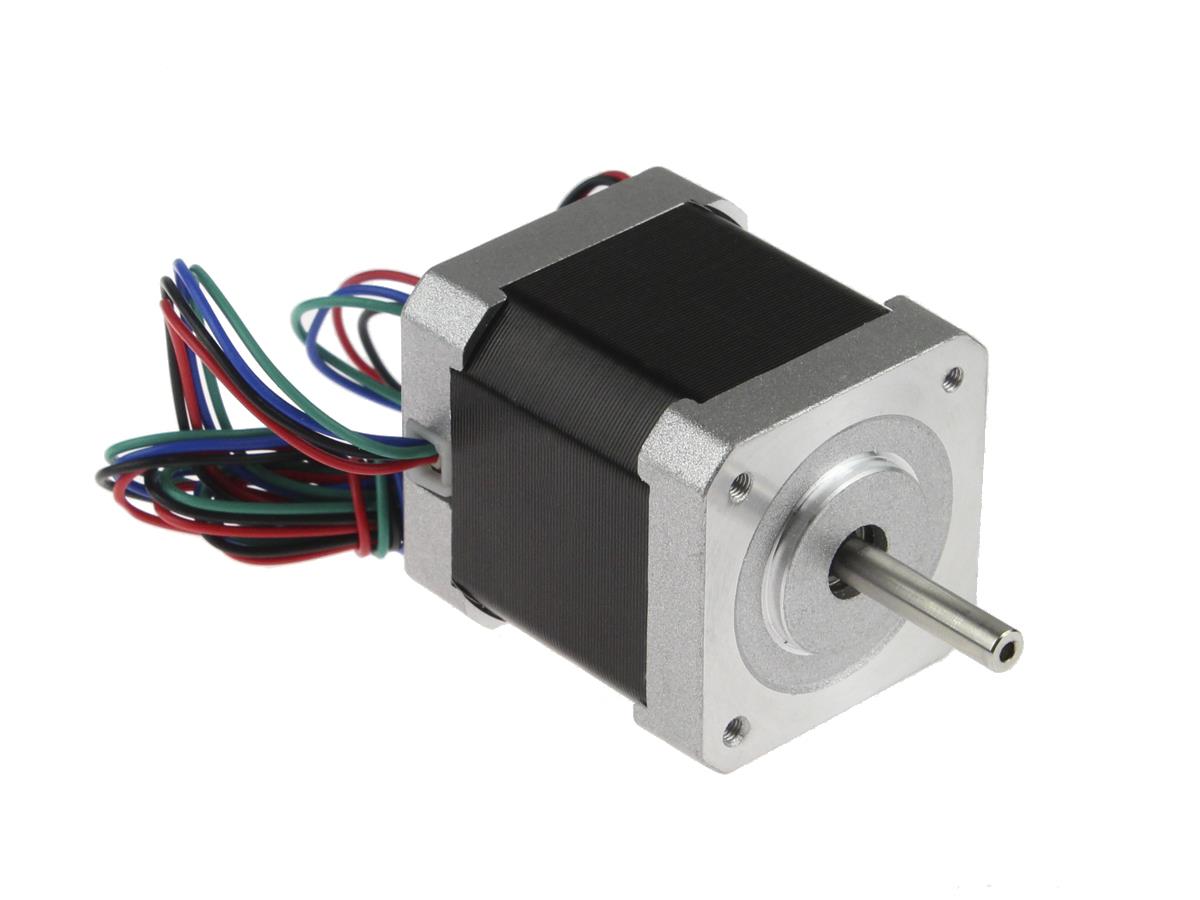

- Stepper Motor (12 volt)(2):

- Servo Motor (6 volt)(3):

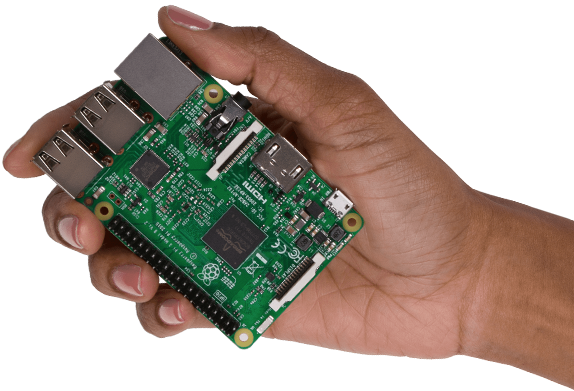

- Raspberry Pi 3(1):

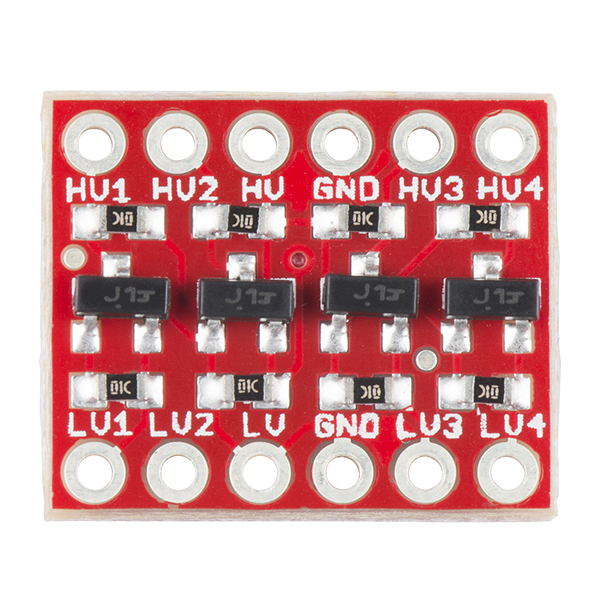

- Logic Level Converter:

- L298 Motor Drivers(1):

- Gears:

- Combination of LM317 and potentiometer for voltage regulation:

- Castor Wheels(3):

Description of Mechanical Part:

The face of the bot is build by 3D printer and other parts are made of plyboard, rod.

Description on code is as follows:

Code is basically divided in 3 parts

- Sound - Kanish

- Camera - Anant Singh

- Motion - Anand Kumar

Explanation

-

Sound part is basically divided into 2 parts. First is to get sentiment/ work according to sound input. For this, we use Google API to process the sound into text. After that, we use google translate API to translate input into English text. Then with the use of 'IBM Watson Tone Analyzer', we got the sentiment. We successfully implement this in file

tone.pyinsidespeechfolder. Second is to locate the sound source. So firstly we find the time gap between two sound inputs (its multiplication with sound speed gives the distance gap) and amplitude ratio (amplitude inversely proportional to the distance) can give the exact angle of the sound source with respect to the bot. It is implemented incode2.pyinside thespeechfolder. -

camera - The camera used in the bot is picam and it takes pictures of the person which is sent to the Sightcorp face api to get its emotion.

-

Motion - The face and the body of bot moves as per the environment. The face uses 3 Servo Motors to show reaction of bot and it uses 2 stepper motors to walk.

-

We can connect this bot with mobile and after that user will be notified if bot found something uncommon thing is happening at your place.

-

There are many issues with the mechanical model like the motor of the neck is not perfectly coupled. Due to which we got a lot of issue during exhibition like it is not localizing the source but neck is not rotating according to that value.

-

The bot can be used as a music player,to do home automation and indoor security as it can make ,take video/pictures , understand environment and act accordingly.

- Shivam Srivastava [Models And Robotics Section Secretary 2017-2018.]