-

Notifications

You must be signed in to change notification settings - Fork 123

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

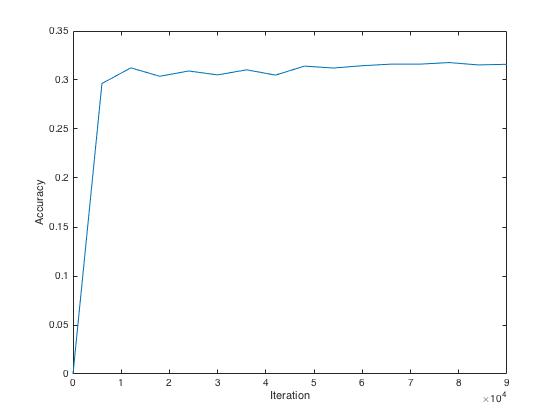

The accuracy is weird #3

Comments

|

There is no image_model folder Thanks |

|

Thanks for the reply. Btw is there codes for attention visualization? |

|

It seems like there is a bug in the model, since it almost always produce "yes" as the answer. Not sure what the bug is yet. Edit: remove the line that restrict the #samples to 5000 @ vqa prepro |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Hi all, I run this code with alternating attention and VGG feature and the output accuracy is weird.

Here is what it looks like

It supposed to be 60.5 according to the paper.

Btw in step of downloading image model, I didn't see

image_modelfolder.The text was updated successfully, but these errors were encountered: