-

Notifications

You must be signed in to change notification settings - Fork 2.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add a client stream WriteSpan method in grpc storage plugin #3636

Comments

|

A couple comments: (1) adding a new method to the existing interface is a breaking change, so our approach so far was to add different interfaces and expose the support for those via the Capabilities API. Following this methodology, you could add a new interface (2) It is not clear to me how the error path will be communicated back to Jaeger with a streaming approach. You are showing The error from the storage is a peculiar thing, we tend not to do much with it in the Jaeger collector because the processing is async (with queue in between), so we cannot propagate the error back to the exporter. Even in the Kafka ingester I think we will drop the span if it returns an error from write, since we don't want to hold the rest of the Kafka stream. So it is possible that your approach might work fine, but the error handling and dropping of spans will be happening lower in the stack. Which might be ok, but it would make the drop metrics misleading since they are produced at a higher level in the stack. |

|

I am also curious how any sort of backpressure could be done via gRPC stream. What would prevent the collector from just writing spans to the stream much faster than the storage plugin can process them? |

|

@yurishkuro hi Yuri

gRPC stream's flow control is based on http2 flow control, so there will be backpressure if the server

my idea here is that when using the grpc plugin, the |

Hi, thanks for this outstanding project. I have a performance improvement suggestion for the grpc storage plugin.

Requirement - what kind of business use case are you trying to solve?

When saving traces with jaeger-clickhouse storage plugin, I found that the all-in-one process's CPU utilization is relatively high, while the write throughput is not large enough. Through performance analysis, I found that grpc communication occupies a relatively large proportion of CPU, and this can be optimized.

Problem - what in Jaeger blocks you from solving the requirement?

In the grpc service definition of jaeger's storage grpc plugin, the

WriteSpanmethod is an unary rpc methodProposal - what do you suggest to solve the problem or improve the existing situation?

Write to storage with stream rpc. Specifically, we may add a client stream method

WriteSpanStreaminglyIn my test, using client stream RPC compared to using unary RPC, under the same throughput pressure, the CPU utilization decreased by 35%; under the same hardware and software parameter configuration, the maximum throughput increased by 76%. Below is the detailed data.

test result

environment

data summary

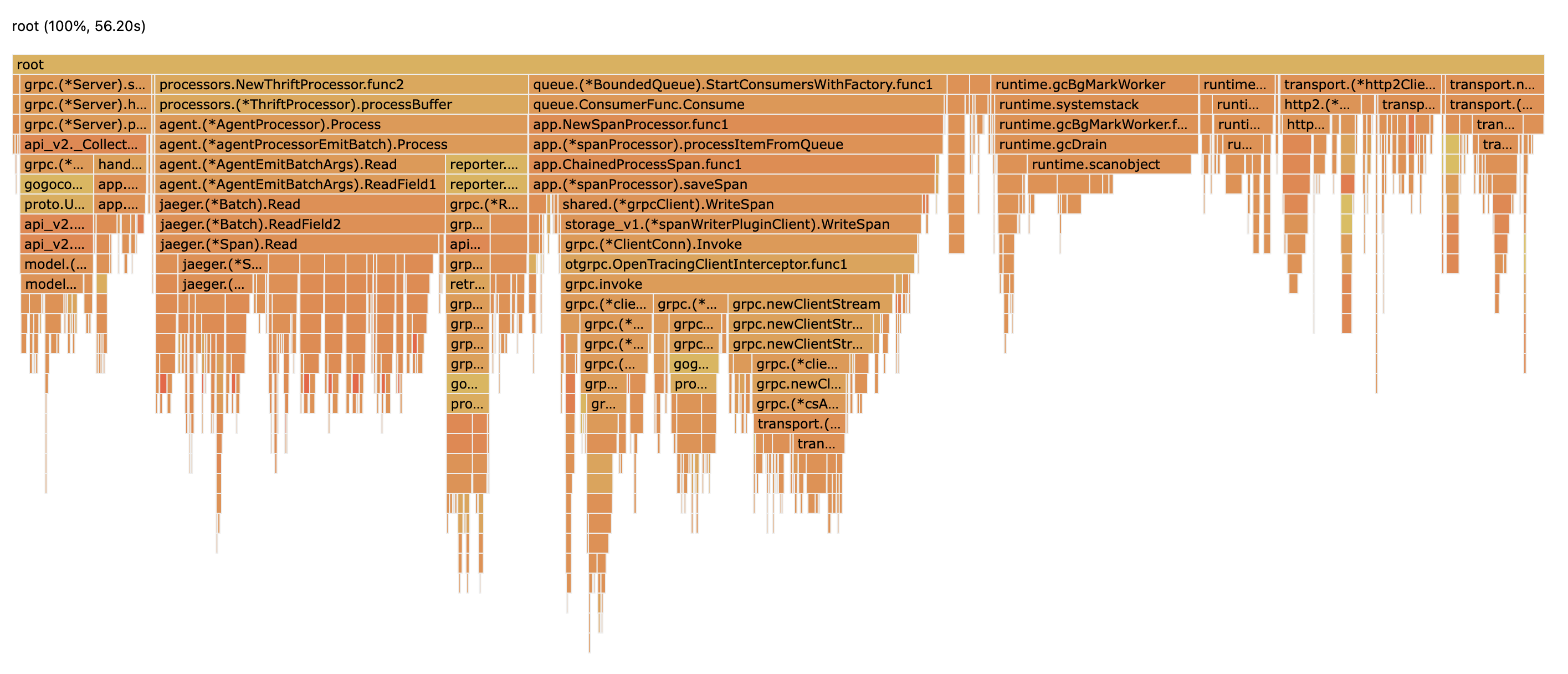

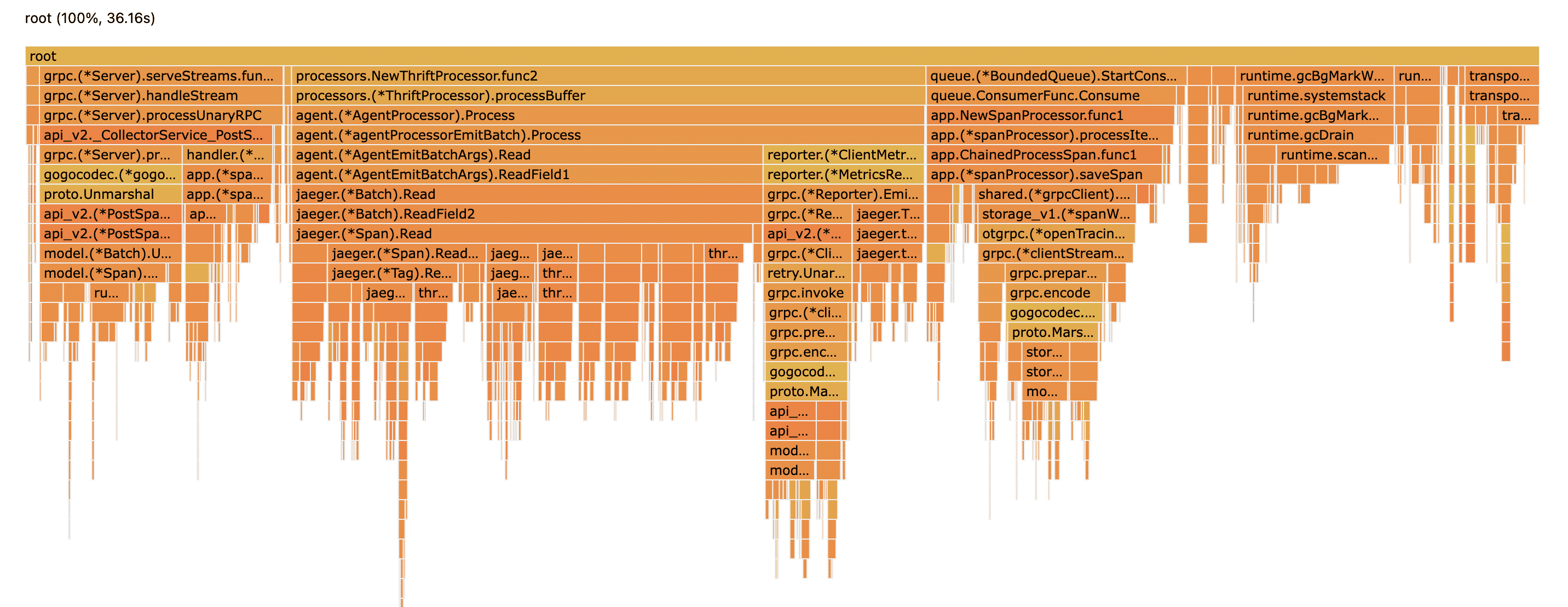

pprof flame graph

unary

stream

Any open questions to address

If there is no problem, I am willing to open a PR to complete it.

The text was updated successfully, but these errors were encountered: