-

Notifications

You must be signed in to change notification settings - Fork 283

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Investigate ways to improve basic primitive speed #198

Comments

|

See #199 I think rust-lang/stdarch#666 also affects addition and subtraction, so we end up with non-optimal assembly: |

|

Hmm, although this (adcx, adox etc) should affect mul due to requiring two carry chains, in theory, llvm can deal with single carry chains (as with adc). However, there are many questionable instructions here, such as I may also investigate if it is worth implementing cmp_less and cmp_greater functions to reduce the generality bloat required to define cmp. |

|

In that case, maybe we can just directly use the intrinsic? https://doc.rust-lang.org/core/arch/x86_64/fn._addcarryx_u64.html |

|

Possibly. However, since we are already using an assembly framework, it may pay to have greater control over the assembly that is produced. For instance for |

|

I think I will revamp the assembly framework so there are less weird artifacts from the time I went a little crazy with the proc macro stuff and trying to have a DSL syntax. Overall, I think the framework is an ok alternative to generating .S, but now it's rather chaotic and ad hoc. |

|

For sub_noborrow, we have https://doc.rust-lang.org/core/arch/x86_64/fn._subborrow_u64.html |

|

Hmm fair enough. I guess it would be worth investigating both options. |

|

I get similar results for addition: pub fn add_nocarry2(a: &mut [u64; 4], b: &[u64; 4]) -> bool {

let mut carry = 0;

for i in 0..4 {

unsafe {

carry = core::arch::x86_64::_addcarry_u64(carry, a[i], b[i], &mut a[i]);

}

}

carry != 0

}produces this: |

|

hmm, did you do this with unroll? If not, it's nice that the loops are unrolled by llvm in that case. I think it might not have worked for the mul/square case as the loops were nested. |

|

This is without unroll: https://godbolt.org/z/zv5435 |

|

Opened a PR for this: #202 |

|

With new benchmarks using intrinsics, fq::sub_assign and fq::add_assign are blazingly fast (~1.1-1.2ns), whereas fq::double is still pitifully slow (6.3ns). I have feature gated the use of intrinsics behind Now I will focus attention on double. |

This is ill-conceived as comparing greater than in a limb can also help shortcut a less than comparison. So current impl is optimal imo. |

|

Here are the changes to Scaled to the https://hackmd.io/@zkteam/eccbench results, our full pairing would take 1.03ms, which is better but still can be improved via algorithmic improvements. |

|

Unfortunately, mul2 using self addition results in ugly assembly code, hence I think we need to resort to using assembly |

|

Actually, here is a comparison between Fp add_assign and double's assemblies: double_in_place: add_assign They are virtually identical, but there is a discrepancy in their benchmarks. Notice however that there is already inefficiency in moving data to and from cache/registers. This furthers the conviction that one should move towards a full .S style ASM system, and convert more functions into pure ASM. |

|

I've decided to stop trusting the benchmarks for particular field/biginteger methods, and trust more the overall benchmarks for big functions, there seems to be some weird discrepancy, and I can't pinpoint the source. |

|

Here are some comparisons with gurvy on my Ryzen 4800H (4.2GHz boost clock): So it appears we are not too far away as a matter of fact, and the gap is at most 10%. In fact, some of these results seem to suggest that the benchmarks here https://hackmd.io/@zkteam/eccbench are made erroneously and may have used the wrong timings (extended jacobian rather than jacobian repr). (this fact has now been confirmed) However, we are still lagging significantly in G2 timings. This is at least partly because Fp towers are still laggard. |

|

Something worth investigating is why is there such a high discrepancy between Maybe inlining isn't functioning properly here |

|

Some comments:

You can benchmark Constantine the following way, assuming you have Nim 1.4.2 installed. git clone https://github.com/mratsim/constantine

cd constantine

nimble bench_fp_clang

nimble bench_fp2_clang

nimble bench_fp12_clang

nimble bench_ec_g1_clang

nimble bench_ec_g2_clang

nimble bench_pairing_bls12_381_clangAt one point I will add a per curve recap as well. There will be inline assembly generated in the nimcache folder

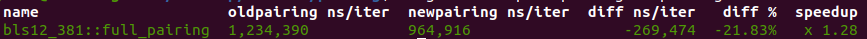

In my case I had a significant issue with Nim parameter passing with concepts, after upstream fix nim-lang/Nim#16897 or dropping concepts altogether (so that I don't need to wait for a new compiler, mratsim/constantine#153) I improved pairing perf by 28%. |

|

btw @mratsim this is a great resource, thanks! https://github.com/mratsim/constantine/blob/master/docs/optimizations.md |

With some simple changes converting biginteger primitive iter ops to their unrolled counterparts, one obtains some decent results.

Hence, the hypothesis is that implementing assembly for these functions could improve these results further.

Here are the results (with some anomalies I believe due to overly aggressive optimisations in the benchmark code):

My guess is that currently, gurvy is still not as good as mcl were mcl to improve its field mul and squaring.

This is certainly interesting and I don't have enough knowledge to say how one can obtain optimisations to the point of MCL's speed, since the improvements above do not account for the full difference in speeds.

Further, our field mul and squaring is more or less on par with gurvy's (however, they now have an update, which is apparently faster than before, but I have not seen a benchmark showing how much faster).

However, apart from the above changes, I will leave investigating further low-level optimisations to someone else who may be interested.

One interesting observation is that with 11 M/S, if the cost of a G1 mixed add were truly dominated by M/S, at 28ns per M/S, the time required would be ~ 300ns, however, this is clearly not the case, and shows that there is something wrong with the additions, subtractions and doublings, of which there are many.

In theory, they should cost [1/(2 * limbs), 1/limbs] as much as M/S. They are approximately as dense as M/S, so their cost should be, according to estimation, ~1/8 for 6 limbs, leading to a total time of 340ns. But due to the inefficient implementation, their total cost is > 1/4 for 6 limbs, leading to a total time of 415ns. That's because presently, the costs are in [1/limbs, 2/limbs] instead.

A timing of 340ns (relative to my processor speed) would put us as a contender for top place alongside mcl and constantine for this particular function.

Thus, it is my view that much of the discrepancy with other libraries for group ops can be accounted for with more efficient additive and mul by const ops.

The text was updated successfully, but these errors were encountered: