forked from databricks-industry-solutions/hls-tcga

-

Notifications

You must be signed in to change notification settings - Fork 0

/

Copy path00-data-download.py

148 lines (118 loc) · 4.61 KB

/

00-data-download.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

# Databricks notebook source

# MAGIC %md

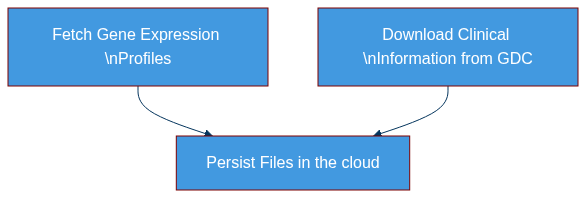

# MAGIC # Download Data from Genomic Data Common (GDC)

# MAGIC

# MAGIC In this notebook, we will:

# MAGIC

# MAGIC - Download clinical information from GDC.

# MAGIC - Fetch the corresponding gene expression profiles.

# MAGIC - Persist the files in a specified cloud path for future access.

# MAGIC

# MAGIC [](https://mermaid.live/edit#pako:eNplkk1rwzAMhv-K8Ai5dNBtLVtzGDRfZYdBYWWXegc3lleDYwfbYS2l_31OQteU-WDL0vNiWdKJVIYjSUgUnagGkFr6BHoTIPZ7rDFOIN4xh_Fk7P1kVrKdQhf_4SHUWFkze8yMMrbT3c0eF4tiepFeiQ0e_JUSQvxHUmM52iv0nE3DGnFKaryGp7On-TwfhR1WRvObbF6W2bIsR4xH6-UNki6LhzKLB-LcHWE7RxHV35Y1e9ikVKfb3PxoZRiHLCQhK6aAUv2mhbE189JoENbUsMqzL6qzbYm-2sMKNUJxaCw61yFBsbZGyFDDjoL7-1fIt2u0TjoPZecP7YBQbaiUaXmA0gGimkxIjeEtyUPr-vJT0reFkiSYHAVrlackpB7QtuHMY8GlN5Yk3rY4Iaz15uOoq8t9YHLJwjdrkgimXPBir3kfRqSflPMvKLewvw)

# COMMAND ----------

# MAGIC %md

# MAGIC ## 0. Initial configurations

# COMMAND ----------

# DBTITLE 1,make function definitions accessible

# MAGIC %run ./util/configurations

# COMMAND ----------

FORCE_DOWNLOAD=False

# COMMAND ----------

# MAGIC %md

# MAGIC ## 1. Download data from GDC

# COMMAND ----------

# DBTITLE 1,download files list

import pandas as pd

import os

file_fields = [

"access",

"data_format",

"data_type",

"file_id",

"cases.project.project_id",

"data_category",

"experimental_strategy",

"file_id",

"file_name",

"file_size",

"type",

"cases.case_id"

]

files_filters = [

('data_format','in',['TSV']),

('data_type','in',['Gene Expression Quantification']),

('cases.project.program.name','in',['TCGA']),

('access','in',['open'])

]

path = f"{STAGING_PATH}/expressions_info.tsv"

_flag = not os.path.isfile(path) or FORCE_DOWNLOAD

if _flag:

logging.info(f'file {path} does not exist. Downloading expressions_info.tsv')

download_table(files_endpt,file_fields,path,size=20000,filters=files_filters)

else:

logging.info(f'file {path} already exists')

files_list_pdf=pd.read_csv(path,sep='\t')

print(f'downloaded {files_list_pdf.shape[0]} records')

files_list_pdf.head()

# COMMAND ----------

# MAGIC %md

# MAGIC Now we use the list of the files to download expression profiles. Note that we are downloading ~11000 files and this can take some time (even with 32 cores concurrently).

# COMMAND ----------

# DBTITLE 1,download expressions

path = EXPRESSION_FILES_PATH

_flag = not bool(os.listdir(path)) or FORCE_DOWNLOAD

uuids=files_list_pdf.file_id.to_list()

if _flag:

logging.info(f'file {path} does not exist. Downloading expressions_info.tsv')

download_expressions(path,uuids)

else:

logging.info('expression files already downloaded.')

# COMMAND ----------

# DBTITLE 1,take a look at a sample expression file

sample_file=dbutils.fs.ls(f"{EXPRESSION_FILES_PATH}")[0].path

df=spark.read.csv(sample_file,sep='\t',comment="#",header=True)

print(f"n_records in {sample_file} is {df.count()}")

display(df)

# COMMAND ----------

# DBTITLE 1,download cases

demographic_fields = [

"demographic.ethnicity",

"demographic.gender",

"demographic.race",

"demographic.year_of_birth",

"demographic.year_of_death"

]

diagnoses_fields = [

"diagnoses.classification_of_tumor",

"diagnoses.diagnosis_id",

"diagnoses.primary_diagnosis",

"diagnoses.tissue_or_organ_of_origin",

"diagnoses.tumor_grade",

"diagnoses.tumor_stage",

"diagnoses.treatments.therapeutic_agents",

"diagnoses.treatments.treatment_id",

"diagnoses.treatments.updated_datetime"

]

exposures_fields = [

"exposures.alcohol_history",

"exposures.alcohol_intensity",

"exposures.bmi",

"exposures.cigarettes_per_day",

"exposures.height",

"exposures.updated_datetime",

"exposures.weight",

"exposures.years_smoked"]

fields = ['case_id']+demographic_fields+diagnoses_fields+exposures_fields

cases_filters = [

('cases.project.program.name','in',['TCGA']),

]

path = f"{STAGING_PATH}/cases.tsv"

_flag = not os.path.isfile(path) or FORCE_DOWNLOAD

if _flag:

logging.info(f'file {path} does not exist. Downloading cases.tsv')

download_table(cases_endpt,fields,path,size=100000,filters=cases_filters)

else:

logging.info(f'file {path} already exists')

df=spark.read.csv(path,sep='\t',header=True)

print(f"n_records in cases is {df.count()}")

display(df)