SimTacLS: Toward a Platform for Simulation and Learning of Vision-based Tactile Sensing at Large Scale

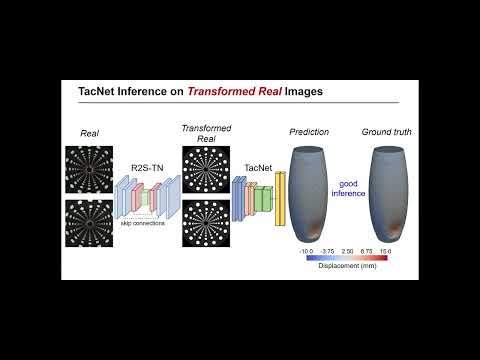

Open-source simulation tool and sim2real method for large-scale vision-based tactile (ViTac) sensing devices, based on SOFA-GAZEBO-GAN pipeline.

A video demonstration is at:

The overview of the pipeline is at:

Run:

$ python simtacls_run_realtime.py

Please note that the following dependecies required:

- pytorch

- opencv-python

- pyvista

- pyvistaqt

For the pre-trained R2S-TN and TacNet models, please contact quan-luu@jaist.ac.jp for more information.

The program was tested on a couple of fisheye-lense cameras (ELP, USB2.0 Webcam Board 2 Mega Pixels 1080P OV2710 CMOS Camera Module) which optionally provide up to 120fps. And the processing speed heavily depends on computing resources, e.g., GPU (NVIDIA, RTX8000, RTX3090, GTX 1070Ti were proved to be compatible) .

- Move to Catkin workspace (ROS) working directory.

$ cd [home/username]/catkin_ws/src

Then make the catkin environment

$ catkin_make

Next, move to the home directory of the module to avoid unfound reference path

$ cd [home/username]/catkin_ws/src/sofa_gazebo_interface

- Start Gazebo simulation environment for TacLink.

$ roslaunch vitaclink_gazebo vitaclink_world.launch

- Add PATH for Gazebo models (model uri), if necessary. This would be helful if the skin/marker states (.STL file) were not in the default Gazebo model directory.

$ nano ~/.bashrc

Then, write the following command to the end of .basrc file, given the skin/marker states are in the skin_state directory.

source /usr/share/share/gazebo/setup.sh

export GAZEBO_MODEL_PATH=$[path/to/data]/skin_state:$GAZEBO_MODEL_PATH

- Run program file (.py) for acquisition of tactile image dataset

-

Single contact dataset: Run the following python program for starting the acquisition process (single-touch data)

$ rosrun vitaclink_gazebo single_point_tactile_image_acquisition.py -

Multiple contact dataset:

The file name of skin and marker states (.STL) exported from SOFA should be saved as follows:

skin{group:04}_{depth:02}.stlandmarker{group:04}_{depth:02}.stl; e.g.,skin0010_15.stlis the skin state ofdata group: 10andcontact depth: 15.The output tactile images will be formatted as

{group}_{depth}.jpg. (For our demostration, we have 500 contact groups, each consists of 20 incremental contact depths).Run the following python program for starting the acquisition process (multi-touch data)

$ rosrun vitaclink_gazebo multiple_point_tactile_image_acquisition.py -

For TacNet training, run:

$ python tacnet_train_mutitouch_dataset.py

Note that the full simulation dataset is required for the program executaion, for the full dataset please contact correspondances for more information.

Distributed under the MIT License. See LICENSE for more information.

- Project Manager

- Ho Anh Van - van-ho[at]jaist.ac.jp

- Developers

- Nguyen Huu Nhan - nhnha[at]jaist.ac.jp

- Luu Khanh Quan - quan-luu[at]jaist.ac.jp

This work was supported in part by JSPS KAKENHI under Grant 18H01406 and JST Precursory Research for Embryonic Science and Technology PRESTO under Grant JPMJPR2038.

Since we are an acedamic lab, the software provided here is not as computationally robust or efficient as possible and is dedicated for research purpose only. There is no bug-free guarantee and we also do not have personnel devoted for regular maintanence.