-

Notifications

You must be signed in to change notification settings - Fork 89

Getting started in Swift

This Quick Start guide will get you up and performing scanning as quickly as possible. All steps described in this guide are required for the integration.

This guide sets up basic Machine readable travel document (MRTD) and US Driver's license (USDL) scanning functionality. It closely follows the BlinkId-sample app. We highly recommend you try to run the sample app. The sample app should compile and run on your device, and in the iOS Simulator.

The source code of the sample app can be used as the reference during the integration.

- If you wish to use version v1.4.0 or above, you need to install Git Large File Storage by running these comamnds:

brew install git-lfs

git lfs install-

Be sure to restart your console after installing Git LFS

-

Project dependencies to be managed by CocoaPods are specified in a file called

Podfile. Create this file in the same directory as your Xcode project (.xcodeproj) file. -

Copy and paste the following lines into the TextEdit window:

platform :ios, '8.0'

target 'TargetName' do

pod 'PPBlinkID', '~> 2.18.2'

end- Install the dependencies in your project:

$ pod install- From now on, be sure to always open the generated Xcode workspace (

.xcworkspace) instead of the project file when building your project:

open <YourProjectName>.xcworkspace-Download latest release (Download .zip or .tar.gz file starting with BlinkID. DO NOT download Source Code as GitHub does not fully support Git LFS)

OR

Clone this git repository:

- If you wish to clone version v1.4.0 or above, you need to install Git Large File Storage by running these comamnds:

brew install git-lfs

git lfs install-

Be sure to restart your console after installing Git LFS

-

To clone, run the following shell command:

git clone git@github.com:BlinkOCR/blinkocr-ios.git-

Copy MicroBlink.framework and MicroBlink.bundle to your project folder.

-

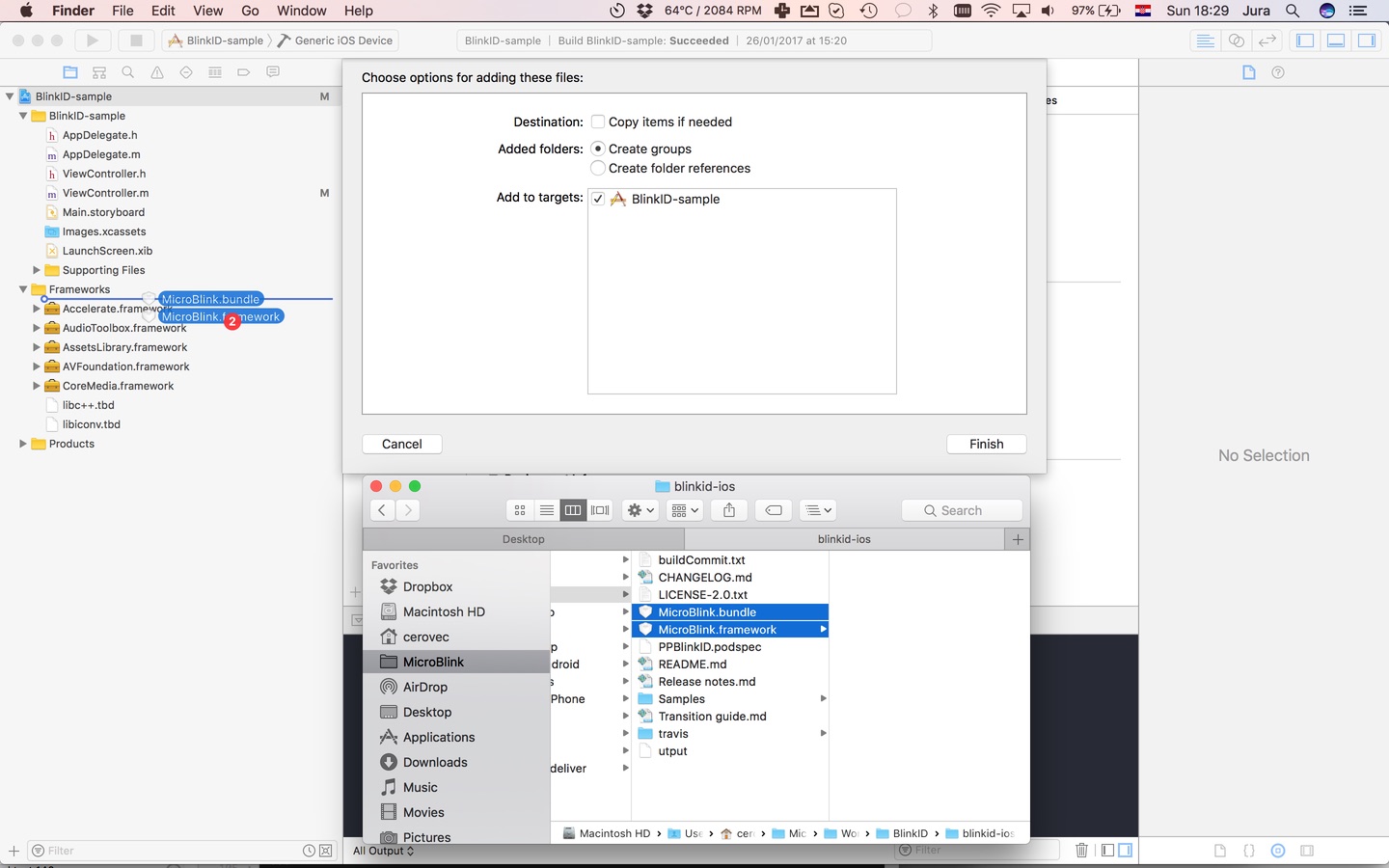

In your Xcode project, open the Project navigator. Drag MicroBlink.framework and MicroBlink.bundle files to your project, ideally in the Frameworks group, together with other frameworks you're using. When asked, choose "Create groups", instead of the "Create folder references" option.

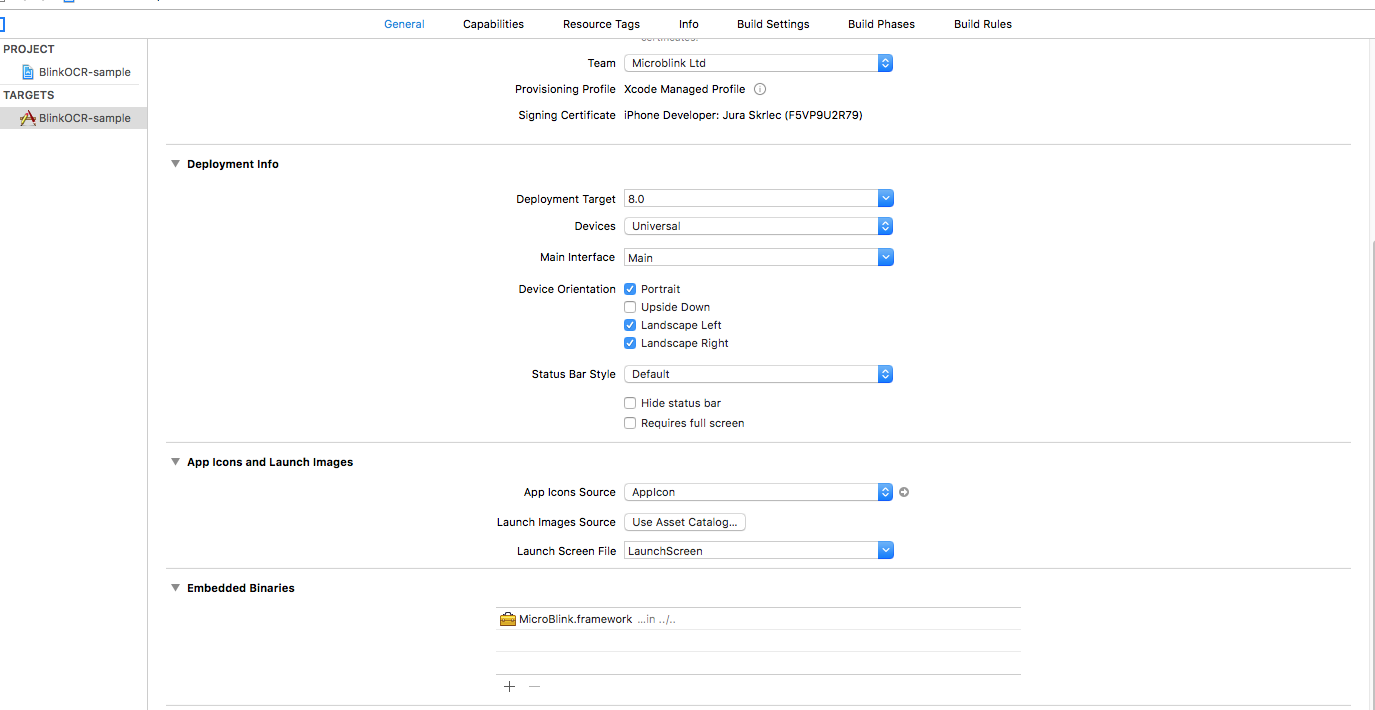

- Since Microblink.framework is a dynamic framework, you also need to add it to embedded binaries section in General settings of your target.

-

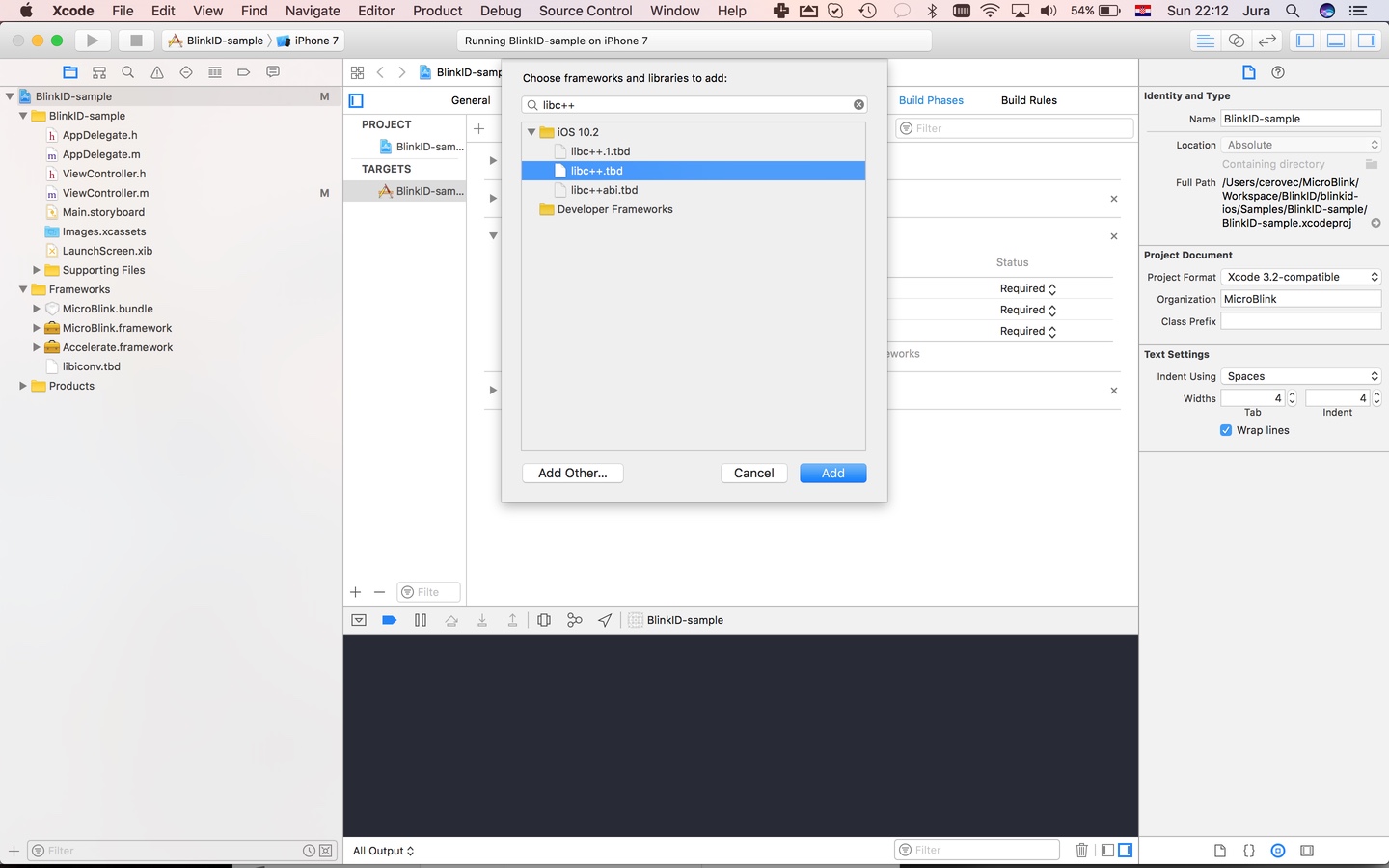

Include the additional frameworks and libraries into your project in the "Linked frameworks and libraries" section of your target settings.

- libc++.tbd

- libz.tbd

- libiconv.tbd

- Accelerate.framework

- AVFoundation.framework

- AudioToolbox.framework

- CoreMedia.framework

In files in which you want to use scanning functionality place import directive.

import MicroBlinkTo initiate the scanning process, first decide where in your app you want to add scanning functionality. Usually, users of the scanning library have a button which, when tapped, starts the scanning process. Initialization code is then placed in touch handler for that button. Here we're listing the initialization code as it looks in a touch handler method.

func coordinatorWithError(error: NSErrorPointer) -> PPCameraCoordinator? {

/** 0. Check if scanning is supported */

if (PPCameraCoordinator.isScanningUnsupported(for: PPCameraType.Back, error: error)) {

return nil;

}

/** 1. Initialize the Scanning settings */

// Initialize the scanner settings object. This initialize settings with all default values.

let settings: PPSettings = PPSettings()

/** 2. Setup the license key */

// Visit www.microblink.com to get the license key for your app

settings.licenseSettings.licenseKey = "<your license key here>"

/**

* 3. Set up what is being scanned. See detailed guides for specific use cases.

* Remove undesired recognizers (added below) for optimal performance.

*/

do { // Remove this if you're not using MRTD recognition

// To specify we want to perform MRTD (machine readable travel document) recognition, initialize the MRTD recognizer settings

let mrtdRecognizerSettings = PPMrtdRecognizerSettings()

/** You can modify the properties of mrtdRecognizerSettings to suit your use-case */

// tell the library to get full image of the document. Setting this to YES makes sense just if

// settings.metadataSettings.dewarpedImage = YES, otherwise it wastes CPU time.

mrtdRecognizerSettings.dewarpFullDocument = false;

// Add MRTD Recognizer setting to a list of used recognizer settings

settings.scanSettings.add(mrtdRecognizerSettings)

}

do { // Remove this if you're not using USDL recognition

// To specify we want to perform USDL (US Driver's license) recognition, initialize the USDL recognizer settings

let usdlRecognizerSettings = PPUsdlRecognizerSettings()

/** You can modify the properties of usdlRecognizerSettings to suit your use-case */

// Add USDL Recognizer setting to a list of used recognizer settings

settings.scanSettings.add(usdlRecognizerSettings)

}

/** 4. Initialize the Scanning Coordinator object */

return PPCameraCoordinator(settings: settings)

}

@IBAction func didTapScan(sender: AnyObject) {

/** Instantiate the scanning coordinator */

let error: NSErrorPointer = nil

let coordinator = self.coordinatorWithError(error: error)

/** If scanning isn't supported, present an error */

if coordinator == nil {

let messageString: String = (error?.pointee?.localizedDescription) ?? ""

self.present(UIAlertController(title: "Warning", message: messageString, preferredStyle: .alert), animated: true)

return

}

/** Allocate and present the scanning view controller */

let scanningViewController: UIViewController = PPViewControllerFactory.cameraViewControllerWithDelegate(self, coordinator: coordinator!, error: nil)

/** You can use other presentation methods as well */

self.presentViewController(scanningViewController, animated: true, completion: nil)

}In the previous step, you instantiated PPScanningViewController object with a delegate object. This object gets notified on certain events in scanning lifecycle. In this example we set it to self. The protocol which the delegate has to implement is PPScanningDelegate protocol. You can use the following default implementation of the protocol to get you started.

func scanningViewControllerUnauthorizedCamera(scanningViewController: UIViewController) {

// Add any logic which handles UI when app user doesn't allow usage of the phone's camera

}

func scanningViewController(scanningViewController: UIViewController, didFindError error: NSError) {

// Can be ignored. See description of the method

}

func scanningViewControllerDidClose(scanningViewController: UIViewController) {

// As scanning view controller is presented full screen and modally, dismiss it

self.dismissViewControllerAnimated(true, completion: nil)

}

func scanningViewController(scanningViewController: UIViewController?, didOutputResults results: [PPRecognizerResult]) {

let scanConroller : PPScanningViewController = scanningViewController as! PPScanningViewController

// Here you process scanning results. Scanning results are given in the array of PPRecognizerResult objects.

// first, pause scanning until we process all the results

scanConroller.pauseScanning()

var message : String = ""

var title : String = ""

// Collect data from the result

for result in results {

if(result.isKindOfClass(PPMrtdRecognizerResult)) {

let mrtdResult : PPMrtdRecognizerResult = result as! PPMrtdRecognizerResult

title="MRTD"

message=mrtdResult.description

}

if(result.isKindOfClass(PPUsdlRecognizerResult)) {

let usdlResult : PPUsdlRecognizerResult = result as! PPUsdlRecognizerResult

title="USDL"

message=usdlResult.description

}

}

//present the alert view with scanned results

let alertView = UIAlertView(title: title, message: message, delegate: self, cancelButtonTitle: "OK")

alertView.show()

}

func alertView(alertView: UIAlertView, clickedButtonAtIndex buttonIndex: Int) {

self.dismissViewControllerAnimated(true, completion: nil)

}With this performed, you've successfully integrated the scanning functionality in your app.

-

Know more about obtaining scanning results in general.

-

Jump directly to using different recognizers:

- Using MRTD recognizer for scanning Machine readable travel documents like passports, visas and ID cards

- Using USDL recognizer for scanning and parsing US Driver Licenses

- Using EUDL recognizer for scanning UK Driving Licenses

- Using MyKad recognizer for scanning Malaysian ID documents

- Using PDF417 recognizer for scanning PDF417 barcodes

- Using BarDecoder recognizer for Code 39 and Code 128 1D barcodes

- Using ZXing recognizer for all other 1D and 2D barcode types

-

Know more about customizing Camera UI.

-

Learn about Direct Processing API which you can use to process UIImage objects directly, without using camera management in the SDK.

For additional help, contact us directly at help.microblink.com.

- Getting Started with BlinkID SDK

- Obtaining scanning results

- Using Direct Processing API

- Customizing Camera UI

- Creating customized framework

- Upgrading from older versions

- Troubleshoot