-

Notifications

You must be signed in to change notification settings - Fork 3.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

"Your Azure credentials have not been set up or have expired, please run Connect-AzAccount to set up your Azure credentials" when passing Azure context to Start-Job #9448

Comments

|

I am unable to reproduce this, but I did experience this some time ago (maybe a year), but found a workaround, using This was mostly in Azure Function App that uses runspaces, so your mileage may vary. I actually just yesterday wanted to write a blogpost on this and found that I did not need to use I worked with runspaces the entire day and did not see this error once. Try below out and see if that makes a difference. $Global:ErrorActionPreference = 'Stop'

$DebugPreference = 'SilentlyContinue'

$AzContext = Get-AzContext

$Jobs = @()

$Jobs += Start-Job -ArgumentList $AzContext -ScriptBlock {

param($AzContext)

# $DebugPreference = 'Continue'

try {

$null = Get-AzVm -DefaultProfile $AzContext

}

catch {

$_

}

}

$Jobs += Start-Job -ArgumentList $AzContext -ScriptBlock {

param($AzContext)

# $DebugPreference = 'Continue'

try {

$null = Get-AzVm -DefaultProfile $AzContext

}

catch {

$_

}

}

$Jobs | Wait-Job | Receive-Job |

|

@spaelling I actually am using -DefaultProfile (technically -AzContext, but that is an alias for -DefaultProfile) in the actual script that I observed this issue on and it still fails in the same way. I can consistently reproduce this issue both in my actual production script and in my example script above. My steps for reproduction are just a simpler way to illustrate what I'm doing in the actual script (which is using Set-AzVmExtension to join a VM to a domain). EDIT: Looking at this again, since I am using -DefaultProfile in my actual script, it was an oversight leaving it out in my reproduction steps above. So much so, it's pointless passing in $AzContext if I'm not referencing it anywhere! :) I'm going to try reproducing this again using -DefaultProfile, but I suspect the results will be the same since I can reproduce the same issue in my actual production script (which already uses -DefaultProfile). EDIT2: I've added -AzContext to the Get-AzVm commands in the reproduction steps above. |

I would assume that if Maybe someone has an idea on how to troubleshoot this. Can you reproduce on a vanilla VM (some Windows image from Azure marketplace) |

|

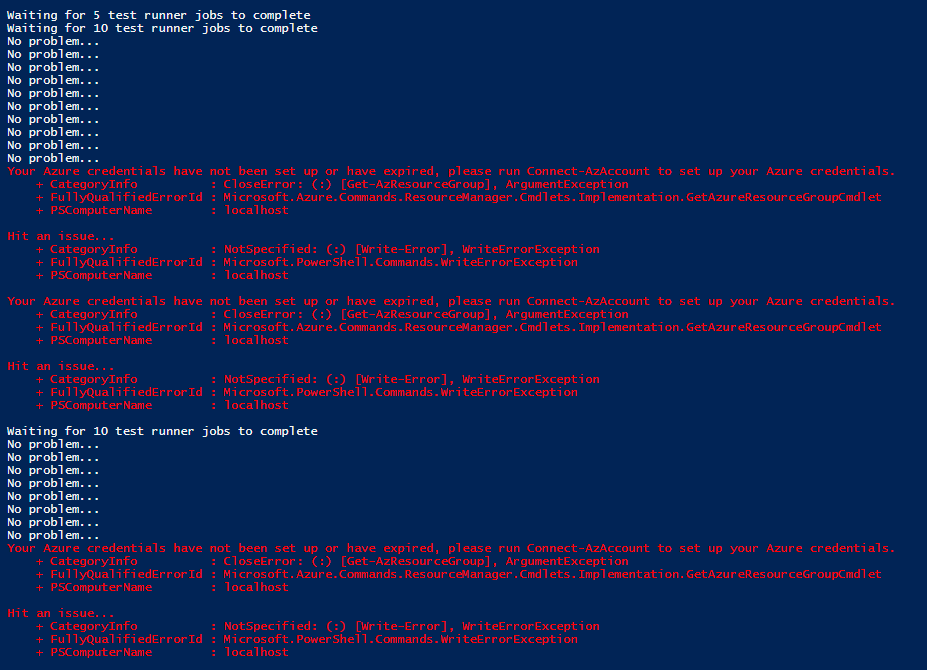

I have a repro for this I think... Here's my code: When I run that code - I get the following results: It basically means that there is some intermittent issue in retrieving the profile. I'm seeing this running Pester tests in parallel (with Start-Job) that rely on the Az module. Anytime I use "-AsJob" in a commandlet I see intermittent failures. |

|

Can't seem to get my code formatted correctly in the prior comment, so attaching it here... |

|

Put the code within $scriptBlock = {

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ScriptBlock {

$rg = $(Get-AzResourceGroup).Count

if (-not $rg) {

Write-Error "Hit an issue..."

}

else {

Write-Output "No problem..."

}

}

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}

}

$jobs = @()

for ($i = 0; $i -lt 5; $i++) {

$jobs += Start-Job -ScriptBlock $scriptBlock

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}So what you are doing is basically this (1..5 | % {Start-Job -ScriptBlock {

(1..10 | % {Start-Job -ScriptBlock {

$null = Get-AzResourceGroup -ErrorAction Stop

}}) | Wait-Job | Receive-Job

}}) | Wait-Job | Receive-JobThat is nesting jobs in jobs. Above is testing the same 50 times, so that fits fairly well with my observed error rate of 2%, ie. 1 in 50 will fail. |

|

You can get a debug trace from when it fails like this (1..5 | % {Start-Job -ScriptBlock {

(1..10 | % {Start-Job -ScriptBlock {

$DebugPreference = 'Continue'

$Path = "$($env:TEMP)\20062019_$([guid]::NewGuid().Guid).txt"

try {

$null = Get-AzResourceGroup -ErrorAction Stop 5>&1 > $Path

# Remove file if it did not fail

Remove-Item $Path

}

catch {

Write-Host "Failed, written debug to $Path. Error was $_"

notepad $Path

}

$DebugPreference = 'SilentlyContinue'

}}) | Wait-Job | Receive-Job

}}) | Wait-Job | Receive-JobMaybe it is just me, but it seems to fail more often when done like this. The debug trace I am getting is this |

This is fantastic, I can reproduce the issue with this consistently as well. I did modify it slightly to include passing the context as a parameter to the job, and then passing the context to the Get-AzResourceGroup command. This matches how I was encountering the issue originally, but I can get it to fail both ways, so it may not be important. I've included the full code below with my changes for reference. But overall, this is absolutely perfect--this is definitely the best way to reproduce this issue at this point. Seems my suspicion it was time-related is unfounded. I do find it interesting though that I would generally encounter it on the first job of the day after having not run anything for 24-48 hours. Seems immaterial now, but I do find it odd. Should I replace my reproduction steps in the original post with the code @petehauge has provided? As referenced above, here's the full code with my small modifications: $AzContext = Get-AzContext

$scriptBlock = {

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ArgumentList $AzContext -ScriptBlock {

param($AzContext)

$rg = $(Get-AzResourceGroup -AzContext $AzContext).Count

if (-not $rg) {

Write-Error "Hit an issue..."

}

else {

Write-Output "No problem..."

}

}

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}

}

$jobs = @()

for ($i = 0; $i -lt 5; $i++) {

$jobs += Start-Job -ScriptBlock $scriptBlock

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

} |

The code I provided shows the debug trace, but they will read the entire thread and work from that. Maybe @markcowl or @cormacpayne can comment on this? And just to repeat a comment I made earlier, this seems not to be a problem when using runspaces, but perhaps similar testing should be done before clearing runspaces entirely. |

|

I see the repro randomly in my code.

We randomly see below error at step 3. The error is gone when rerun the function. Your Azure credentials have not been set up or have expired, please run Connect-AzAccount to set up your Azure credentials Before it get fixed, is there any workaround for this error? |

|

@bingbing8 @spaelling @arpparker The likely culprit here is an issue with the type converter in the job. The type converter is used in this case because the type of the cmdlet parameter is To work around the issue, you can pass in an IAzureContextContainer. In a tpical azure environment, this would mean passint the results of running so $context = Connect-AzAccount -Subscription "My Subscription" -Tenant xxxx-xxxxxx-xxxxxx-yyyyy

$job = Start-Job -ArgumentList $context -ScriptBlock {param($AzContext) Get-AzVm -AzContext $AzContext ...} |

|

@markcowl, below is the code we run. I didn't pass in the result of Get-AzContext. It fails randomly. Note that when run this concurrently in multiple instances of queue triggered azure ps function, it randomly failed. Most time, the first trigger fail (either after pushed new changes or did not run it for long time, like 24 hours), the second time after the first failures would work fine no matter how many instances are running. |

I tried this with running 5 jobs, each with 10 nested jobs, and still got an error. I think even more than usual, but could just be coincidental. $AzContext = Connect-AzAccount -Tenant 'TENANTID' -Subscription 'SUBSCRIPTIONID'

$scriptBlock = {

param($AzContext)

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ArgumentList $AzContext -ScriptBlock {

param($AzContext)

# make sure this is not $null (will then grab from Get-AzContext)

if($null -eq $AzContext)

{

throw "Azure context is '`$null'"

}

$rg = $(Get-AzResourceGroup -AzContext $AzContext).Count

if (-not $rg) {

Write-Error "Hit an issue..."

}

else {

Write-Output "No problem..."

}

}

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs (NESTED) to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}

}

$jobs = @()

for ($i = 0; $i -lt 5; $i++) {

$jobs += Start-Job -ScriptBlock $scriptBlock -ArgumentList $AzContext

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

} |

Ha, you beat me to this by like 10 minutes! :) Was just coming to post the same, I'm getting the same results. I think I have found a way workaround though based on one that was posted in a similar issue that I linked in the original post. More to come in a few minutes... |

|

The following, based essentially completely from this post, has worked 100% of the time for me: Save-AzContext -Path "C:\Temp\AzContext.json" -Force

$scriptBlock = {

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ScriptBlock {

#Clear-AzContext -Force | Out-Null

Disable-AzContextAutosave -Scope Process | Out-Null

Import-AzContext -Path "C:\Temp\AzContext-Empty.json" | Out-Null

Import-AzContext -Path "C:\Temp\AzContext.json" | Out-Null

$AzContext = Get-AzContext

$rg = $(Get-AzResourceGroup -AzContext $AzContext).Count

if (-not $rg) {

Write-Error "Hit an issue..."

}

else {

Write-Output "No problem..."

}

}

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}

}

$jobs = @()

for ($i = 0; $i -lt 5; $i++) {

$jobs += Start-Job -ScriptBlock $scriptBlock

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}A couple notes:

I don't really understand why this works, but it does seem to work. What are the potential security implications of saving the context to disk? |

The implication is that you are committing the access-token to disk. That can be problematic as someone could potentially elevate their access in Azure by having access to this file. I find it odd that you have to import an empty context. I have done the same as you, but passing the access-token and an accountid along. But this still fails, which is odd. It should be pretty equivalent of logging in as a service principal. <#

.SYNOPSIS

Get cachec access token

.DESCRIPTION

Get cachec access token

.EXAMPLE

An example

.NOTES

This will fail if multiple accounts are logged in (to the same tenant?), check with Get-AzContext -ListAvailable, there should be only one listed

Remove accounts using Disconnect-AzAccount

#>

function Get-AzCachedAccessToken()

{

$ErrorActionPreference = 'Stop'

if(-not (Get-Module Az.Accounts)) {

Import-Module Az.Accounts

}

$azProfile = [Microsoft.Azure.Commands.Common.Authentication.Abstractions.AzureRmProfileProvider]::Instance.Profile

if(-not $azProfile.Accounts.Count) {

Write-Error "Ensure you have logged in before calling this function."

}

$currentAzureContext = Get-AzContext

$profileClient = New-Object Microsoft.Azure.Commands.ResourceManager.Common.RMProfileClient($azProfile)

Write-Debug ("Getting access token for tenant" + $currentAzureContext.Tenant.TenantId)

$token = $profileClient.AcquireAccessToken($currentAzureContext.Tenant.TenantId)

$token.AccessToken

}

$Token = Get-AzCachedAccessToken

$AccountId = (Get-AzContext).Account.Id

#Connect-AzAccount -AccessToken $Token -AccountId $AccountId

cls

$scriptBlock = {

param($Token, $AccountId)

$jobs = @()

for ($i = 0; $i -lt 5; $i++) {

$jobs += Start-Job -ArgumentList $Token, $AccountId -ScriptBlock {

param($Token, $AccountId)

Disable-AzContextAutosave -Scope Process | Out-Null

$AzContext = Connect-AzAccount -AccessToken $Token -AccountId $AccountId -Scope Process -ErrorAction SilentlyContinue

if($null -eq $Token -or $null -eq $AzContext)

{

throw "Azure Token/Context is '`$null'"

}

$rg = $(Get-AzResourceGroup -AzContext $AzContext -ErrorAction SilentlyContinue).Count

if (-not $rg) {

Write-Error "Hit an issue..."

}

else {

Write-Output "No problem..."

}

}

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs (NESTED) to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}

}

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ScriptBlock $scriptBlock -ArgumentList $Token, $AccountId

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

} |

|

@spaelling, it looks like your workaround works when the login in different jobs are same context so you can write/read the context from disk. For my case, we login to different tenant with different subscription in different function. It does not work that way. |

|

I tried all the workarounds mentioned here, but doesn't seem to be working anything.. |

|

I've been struggling with this for a week trying to upgrade our existing deployment scripts from AzureRM to Az. None of the workarounds posted here are working for us. Neither exporting/importing the context to a file nor passing the context to the scriptblock works. We make multiple calls to azure endpoints within the scriptblocks in parallel and we won't be able to finish migrating to Az until this works. |

|

The |

|

@arpparker , could you check if the latest Az can reproduce the problem? |

|

@dingmeng-xue - I just upgraded to the latest version of Azure Powershell with this command (admin window): And retried my repro from above and still see the same issue. Here's the code: $scriptBlock = {

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ScriptBlock {

$rg = $(Get-AzResourceGroup).Count

if (-not $rg) {

Write-Error "Hit an issue..."

}

else {

Write-Output "No problem..."

}

}

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}

}

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ScriptBlock $scriptBlock

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}

|

I'll give my reproduction steps a go tomorrow morning. But I can confirm that reproduction steps from @petehauge do indeed still fail for me. If I recall correctly, we aren't passing the Azure context into the script block in quite the same way though, so I'll definitely see if I can reproduce using my original steps. Stay tuned. EDIT: Also, just to confirm, the latest version of the Az module should be v4.3.0, correct? That's what I'm seeing after running the Update-Module command for Az. |

@dingmeng-xue, unfortunately the issue is not resolved. I was able to replicate the problem again using both my reproduction steps above and in the original script where I first discovered the issue. As mentioned above, the version of Az installed is v4.3. |

|

I believe this might be related to locks. Some of the jobs cannot get access to the token cache file, so they fall back to in-memory mode, which of course is empty and contains no access tokens, hence the error. I'm trying to figure out a solution. Will keep updating. |

|

Yes, I agree that it's probably related to locks. I was able to develop a workaround that seems to always work using a mutex before making any calls to Azure in each thread - the code is below. This tells me that as long as no jobs access the token cache at the same time they don't fail... $scriptBlock = {

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ScriptBlock {

# WORKAROUND: https://github.com/Azure/azure-powershell/issues/9448

$Mutex = New-Object -TypeName System.Threading.Mutex -ArgumentList $false, "Global\AzDtlLibrary"

$Mutex.WaitOne() | Out-Null

Enable-AzContextAutosave -Scope Process | Out-Null

$rg = Get-AzResourceGroup | Out-Null

$Mutex.ReleaseMutex() | Out-Null

$rg = $(Get-AzResourceGroup).Count

if (-not $rg) {

Write-Error "Hit an issue..."

}

else {

Write-Output "No problem..."

}

}

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

}

}

$jobs = @()

for ($i = 0; $i -lt 10; $i++) {

$jobs += Start-Job -ScriptBlock $scriptBlock

}

if($jobs.Count -ne 0)

{

Write-Output "Waiting for $($jobs.Count) test runner jobs to complete"

foreach ($job in $jobs){

$result = Receive-Job $job -Wait

Write-Output $result

}

Remove-Job -Job $jobs

} |

@isra-fel Thanks for the update! Looking forward to what you find.

@petehauge This sounds very promising, but this might extend beyond my level of expertise. Can you explain what exactly this is doing, I'm not sure I'm following. How would I (if possible) incorporate into my initial reproduction script in the original post considering I'm passing the Azure context as a parameter to the script block? |

|

@arpparker - sure! Basically, the code is insuring that the first line of code that needs to get a context is doing so only one at a time via using a single mutex. IE: you could incorporate this into your code this way: $context = Connect-AzAccount -Subscription "My Subscription" -Tenant xxxx-xxxxxx-xxxxxx-yyyyy

$job = Start-Job -ArgumentList $context -ScriptBlock {

param($AzContext)

# WORKAROUND: https://github.com/Azure/azure-powershell/issues/9448

$Mutex = New-Object -TypeName System.Threading.Mutex -ArgumentList $false, "Global\MyCustomMutex"

$Mutex.WaitOne() | Out-Null

# Put only the first line of AZ powershell code here

# this ensures that the first time on the thread we check tokens only one at a time

$vms = Get-AzVm -AzContext $AzContext

$Mutex.ReleaseMutex() | Out-Null

# Additional code goes here

}NOTE: I didn't test the code, but this is about what you would need... The first Az command in a script block needs to be guarded by a mutex so it executes only one at a time across all the threads. |

|

Hi all, |

|

This is such a relief! I ran through my repro above and it works, the issue looks like it's fixed! I really appreciate getting this fixed, I'm going to go throw away all my workaround code... :-) Thanks! |

|

Allright I'll close the issue. |

|

I can also confirm this appears to be fixed for me as well. @petehauge thanks for the explanation of the workaround a few weeks ago, I never even got a chance to try it. This is even better! :) Thanks everyone! |

|

This issue re-appeared since yesterday (23rd of July 2020). Upgrading Az.Accounts to 1.9.1 doesn't help |

|

@PavelPikat , many reason may lead to this error. Could you raise a new issue and we will continue following up? If you can clarify further about your steps, it will be great. |

|

It seems to be Azure DevOps pipeline specific, so I raised microsoft/azure-pipelines-tasks#13348 with them |

Description

This issue is very similar to several previous issues here, here, and here. When passing the current Azure context to the Start-Job command, the first job that completes will often fail with the error message, "Your Azure credentials have not been set up or have expired, please run Connect-AzAccount to set up your Azure credentials". Subsequent commands complete successfully.

Steps to reproduce

Finding a way to consistently reproduce this has nearly drove me mad. I fully realize the steps below may seem oddly specific, but what I've outlined is the only way I've been able to reliably and consistently reproduce the issue. There may very well be a better way to reproduce it (or a way with fewer steps), but this method will work for me every time.

Environment data

Module versions

Debug output

NOTE Any potentially private information has been blanked out with 'xxx'. If any thing that was blanked out is needed, please contact me privately.

Error output

The text was updated successfully, but these errors were encountered: