| layout | background-class | body-class | category | title | summary | image | author | tags | github-link | github-id | featured_image_1 | featured_image_2 | accelerator | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

hub_detail |

hub-background |

hub |

researchers |

YOLOv5 |

YOLOv5 in PyTorch > ONNX > CoreML > TFLite |

ultralytics_yolov5_img0.jpg |

Ultralytics LLC |

|

ultralytics/yolov5 |

ultralytics_yolov5_img1.jpg |

ultralytics_yolov5_img2.png |

cuda-optional |

Start from a working python environment with Python>=3.8 and PyTorch>=1.6 installed, as well as PyYAML>=5.3 for reading YOLOv5 configuration files. To install PyTorch see https://pytorch.org/get-started/locally/. To install dependencies:

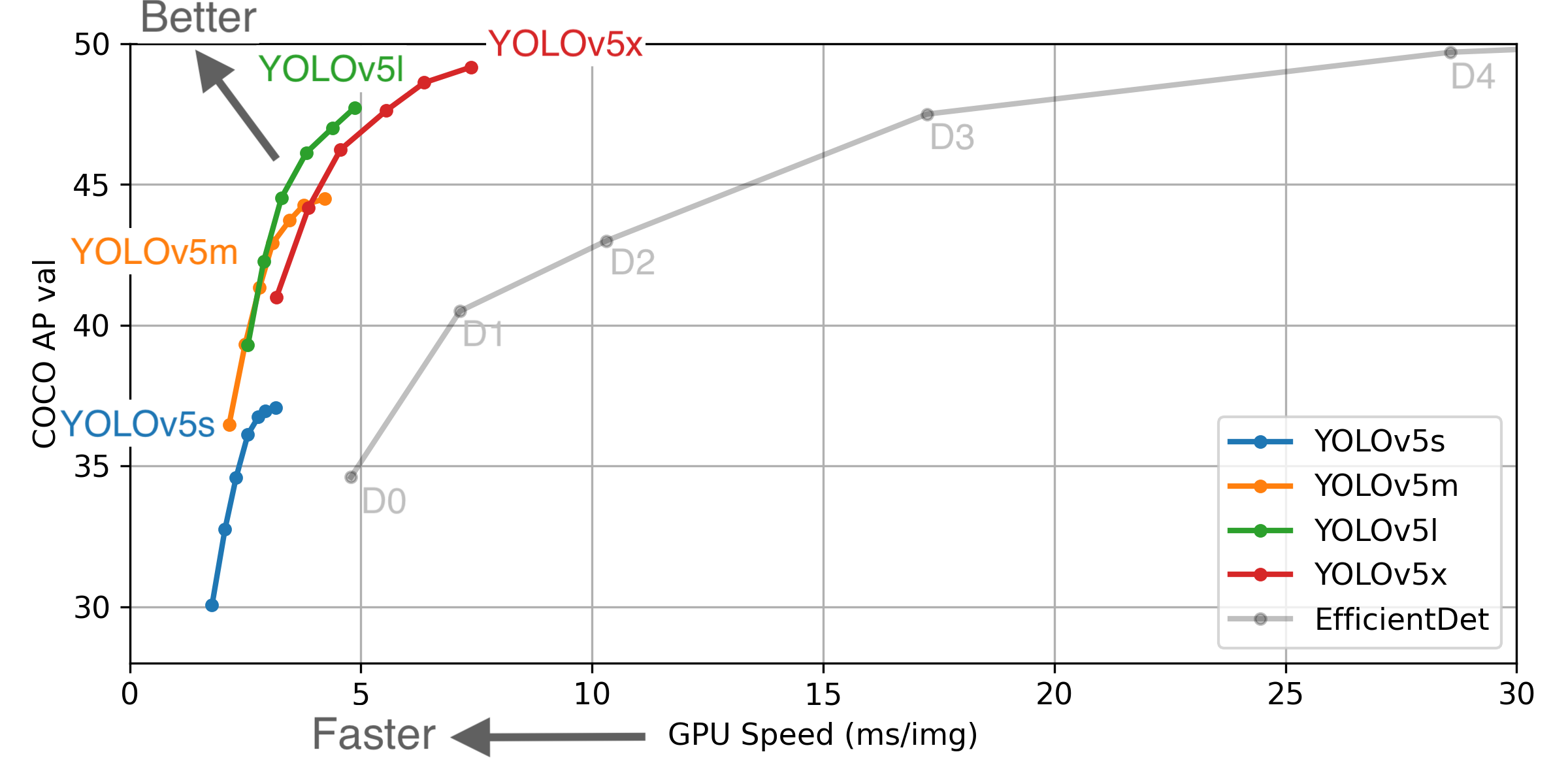

pip install -U PyYAML # install dependenciesYOLOv5 is a family of compound-scaled object detection models trained on COCO 2017, and includes built-in functionality for Test Time Augmentation (TTA), Model Ensembling, Rectangular Inference, Hyperparameter Evolution.

| Model | APval | APtest | AP50 | SpeedGPU | FPSGPU | params | FLOPS | |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 37.0 | 37.0 | 56.2 | 2.4ms | 416 | 7.5M | 13.2B | |

| YOLOv5m | 44.3 | 44.3 | 63.2 | 3.4ms | 294 | 21.8M | 39.4B | |

| YOLOv5l | 47.7 | 47.7 | 66.5 | 4.4ms | 227 | 47.8M | 88.1B | |

| YOLOv5x | 49.2 | 49.2 | 67.7 | 6.9ms | 145 | 89.0M | 166.4B | |

| YOLOv5x + TTA | 50.8 | 50.8 | 68.9 | 25.5ms | 39 | 89.0M | 354.3B |

To load YOLOv5 from PyTorch Hub for inference with PIL, OpenCV, Numpy or PyTorch inputs:

import cv2

import torch

from PIL import Image

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True).fuse().autoshape() # for PIL/cv2/np inputs and NMS

# Images

for f in ['zidane.jpg', 'bus.jpg']: # download 2 images

print(f'Downloading {f}...')

torch.hub.download_url_to_file('https://github.com/ultralytics/yolov5/releases/download/v1.0/' + f, f)

img1 = Image.open('zidane.jpg') # PIL image

img2 = cv2.imread('bus.jpg')[:, :, ::-1] # OpenCV image (BGR to RGB)

imgs = [img1, img2] # batched list of images

# Inference

results = model(imgs, size=640) # includes NMS

# Results

results.print() # print results to screen

results.show() # display results

results.save() # save as results1.jpg, results2.jpg... etc.

# Data

print('\n', results.xyxy[0]) # print img1 predictions

# x1 (pixels) y1 (pixels) x2 (pixels) y2 (pixels) confidence class

# tensor([[7.47613e+02, 4.01168e+01, 1.14978e+03, 7.12016e+02, 8.71210e-01, 0.00000e+00],

# [1.17464e+02, 1.96875e+02, 1.00145e+03, 7.11802e+02, 8.08795e-01, 0.00000e+00],

# [4.23969e+02, 4.30401e+02, 5.16833e+02, 7.20000e+02, 7.77376e-01, 2.70000e+01],

# [9.81310e+02, 3.10712e+02, 1.03111e+03, 4.19273e+02, 2.86850e-01, 2.70000e+01]])Issues should be raised directly in the repository. For business inquiries or professional support requests please visit https://www.ultralytics.com or email Glenn Jocher at glenn.jocher@ultralytics.com.